I'm trying to recover from a PCA done with scikit-learn, which features are selected as relevant.

A classic example with IRIS dataset.

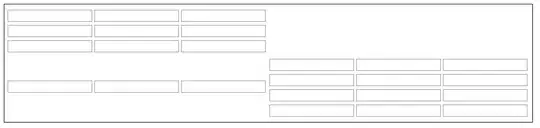

import pandas as pd

import pylab as pl

from sklearn import datasets

from sklearn.decomposition import PCA

# load dataset

iris = datasets.load_iris()

df = pd.DataFrame(iris.data, columns=iris.feature_names)

# normalize data

df_norm = (df - df.mean()) / df.std()

# PCA

pca = PCA(n_components=2)

pca.fit_transform(df_norm.values)

print pca.explained_variance_ratio_

This returns

In [42]: pca.explained_variance_ratio_

Out[42]: array([ 0.72770452, 0.23030523])

How can I recover which two features allow these two explained variance among the dataset ? Said diferently, how can i get the index of this features in iris.feature_names ?

In [47]: print iris.feature_names

['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)', 'petal width (cm)']