I'd like to add a generic solution to this topic. After doing some careful research on existing solutions (including Python and R) and datasets (especially biological "omic" datasets). I figured out the following Python solution, which has the advantages of:

Scale the scores (samples) and loadings (features) properly to make them visually pleasing in one plot. It should be pointed out that the relative scales of samples and features do not have any mathematical meaning (but their relative directions have), however, making them similarly sized can facilitate exploration.

Can handle high-dimensional data where there are many features and one could only afford to visualize the top several features (arrows) that drive the most variance of data. This involves explicit selection and scaling of the top features.

An example of final output (using "Moving Pictures", a classical dataset in my research field):

Preparation:

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

Basic example: displaying all features (arrows)

We will use the iris dataset (150 samples by 4 features).

# load data

iris = datasets.load_iris()

X = iris.data

y = iris.target

targets = iris.target_names

features = iris.feature_names

# standardization

X_scaled = StandardScaler().fit_transform(X)

# PCA

pca = PCA(n_components=2).fit(X_scaled)

X_reduced = pca.transform(X_scaled)

# coordinates of samples (i.e., scores; let's take the first two axes)

scores = X_reduced[:, :2]

# coordinates of features (i.e., loadings; note the transpose)

loadings = pca.components_[:2].T

# proportions of variance explained by axes

pvars = pca.explained_variance_ratio_[:2] * 100

Here comes the critical part: Scale the features (arrows) properly to match the samples (points). The following code scales by the maximum absolute value of samples on each axis.

arrows = loadings * np.abs(scores).max(axis=0)

Another way, as discussed in seralouk's answer, is to scale by range (max - min). But it will make the arrows larger than points.

# arrows = loadings * np.ptp(scores, axis=0)

Then plot out the points and arrows:

plt.figure(figsize=(5, 5))

# samples as points

for i, name in enumerate(targets):

plt.scatter(*zip(*scores[y == i]), label=name)

plt.legend(title='Species')

# empirical formula to determine arrow width

width = -0.0075 * np.min([np.subtract(*plt.xlim()), np.subtract(*plt.ylim())])

# features as arrows

for i, arrow in enumerate(arrows):

plt.arrow(0, 0, *arrow, color='k', alpha=0.5, width=width, ec='none',

length_includes_head=True)

plt.text(*(arrow * 1.05), features[i],

ha='center', va='center')

# axis labels

for i, axis in enumerate('xy'):

getattr(plt, f'{axis}ticks')([])

getattr(plt, f'{axis}label')(f'PC{i + 1} ({pvars[i]:.2f}%)')

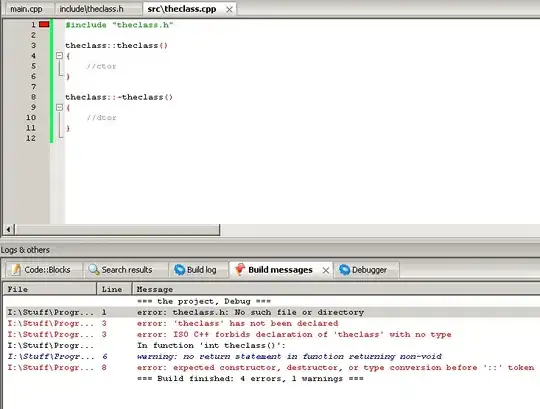

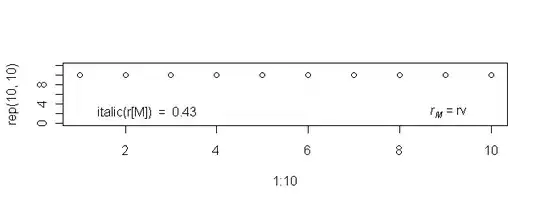

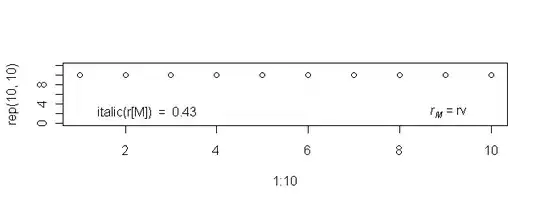

Compare the result with that of the R solution. You can see that they are quite consistent. (Note: it is known that PCAs of R and scikit-learn have opposite axes. You can flip one of them to make the directions consistent.)

iris.pca <- prcomp(iris[, 1:4], center = TRUE, scale. = TRUE)

biplot(iris.pca, scale = 0)

library(ggfortify)

autoplot(iris.pca, data = iris, colour = 'Species',

loadings = TRUE, loadings.colour = 'dimgrey',

loadings.label = TRUE, loadings.label.colour = 'black')

Advanced example: displaying only top k features

We will use the digits dataset (1797 samples by 64 features).

# load data

digits = datasets.load_digits()

X = digits.data

y = digits.target

targets = digits.target_names

features = digits.feature_names

# analysis

X_scaled = StandardScaler().fit_transform(X)

pca = PCA(n_components=2).fit(X_scaled)

X_reduced = pca.transform(X_scaled)

# results

scores = X_reduced[:, :2]

loadings = pca.components_[:2].T

pvars = pca.explained_variance_ratio_[:2] * 100

Now, we will find the top k features that best explain our data.

k = 8

Method 1: Find top k arrows that appear the longest (i.e., furthest from the origin) in the visible plot:

- Note that all features are equally long in the m by m space. But they are different in the 2 by m space (m is the total number of features), and the following code is to find the longest ones in the latter.

- This method is consistent with the microbiome program QIIME 2 / EMPeror (source code).

tops = (loadings ** 2).sum(axis=1).argsort()[-k:]

arrows = loadings[tops]

Method 2: Find top k features that drive most variance in the visible PCs:

# tops = (loadings * pvars).sum(axis=1).argsort()[-k:]

# arrows = loadings[tops]

Now there is a new problem: When the feature number is large, because the top k features are only a very small portion of all features, their contribution to data variance is tiny, thus they will look tiny in the plot.

To solve this, I came up with the following code. The rationale is: For all features, the sum of square loadings is always 1 per PC. With a small portion of features, we should bring them up such that the sum of square loadings of them is also 1. This method is tested and working, and generates nice plots.

arrows /= np.sqrt((arrows ** 2).sum(axis=0))

Then we will scale the arrows to match the samples (as discussed above):

arrows *= np.abs(scores).max(axis=0)

Now we can render the biplot:

plt.figure(figsize=(5, 5))

for i, name in enumerate(targets):

plt.scatter(*zip(*scores[y == i]), label=name, s=8, alpha=0.5)

plt.legend(title='Class')

width = -0.005 * np.min([np.subtract(*plt.xlim()), np.subtract(*plt.ylim())])

for i, arrow in zip(tops, arrows):

plt.arrow(0, 0, *arrow, color='k', alpha=0.75, width=width, ec='none',

length_includes_head=True)

plt.text(*(arrow * 1.15), features[i], ha='center', va='center')

for i, axis in enumerate('xy'):

getattr(plt, f'{axis}ticks')([])

getattr(plt, f'{axis}label')(f'PC{i + 1} ({pvars[i]:.2f}%)')

I hope my answer is useful to the community.

https://cran.r-project.org/web/packages/ggfortify/vignettes/plot_pca.html

https://cran.r-project.org/web/packages/ggfortify/vignettes/plot_pca.html