It is easy for human eyes to tell black from other colors. But how about computers?

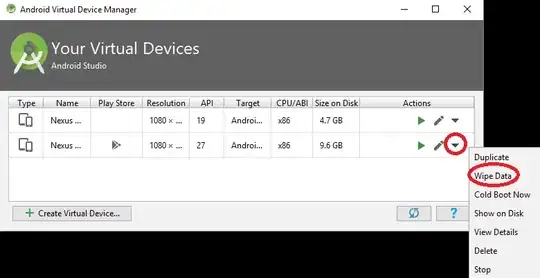

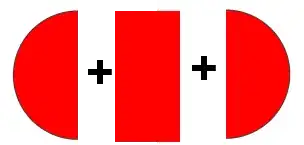

I printed some color blocks on the normal A4 paper. Since there are three kinds of ink to compose a color image, cyan, magenta and yellow, I set the color of each block C=20%, C=30%, C=40%, C=50% and rest of two colors are 0. That is the first column of my source image. So far, no black (K of CMYK) ink is supposed to print. After that, I set the color of each dot K=100% and rest colors are 0 to print black dots.

You may feel my image is weird and awful. In fact, the image is magnified 30 times and how the ink cheat our eyes can be seen clearly. The color strips hamper me to recognize these black dots (the dot is printed as just one pixel in 800 dpi). Without the color background, I used to blur and do canny edge detector to extract the edge. However, when adding color background, simply do grayscale and edge detector cannot get good results because of the strips. How will my eyes do in order to solve such problems?

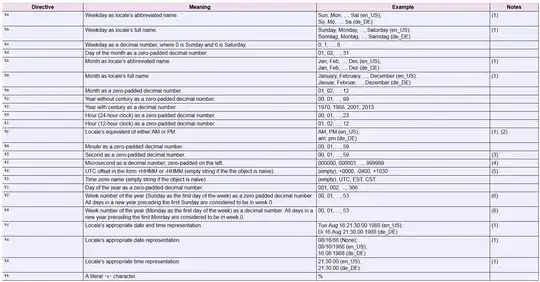

I determined to check the brightness of source image. I referred this article and formula:

brightness = sqrt( 0.299 R * R + 0.587 G * G + 0.114 B * B )

The brightness is more close to human perception and it works very well in the yellow background because the brightness of yellow is the highest compared with cyan and magenta. But how to make cyan and magenta strips as bright as possible? The expected result is that all the strips disappear.

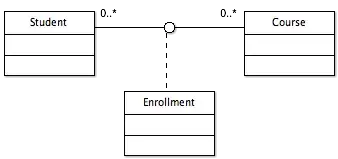

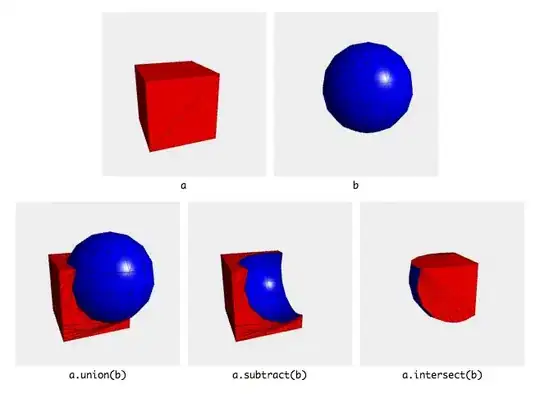

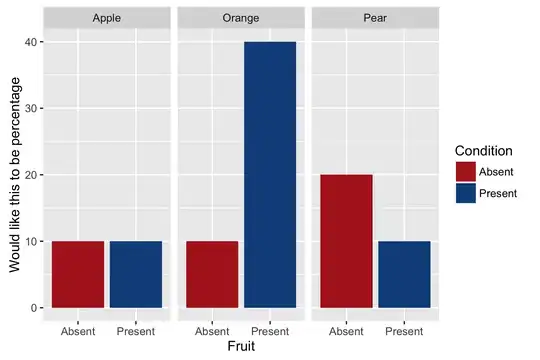

More complicated image:

C=40%, M=40%

C=40%, Y=40%

Y=40%, M=40%

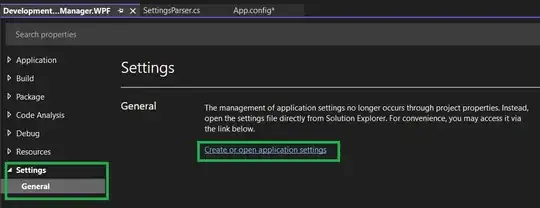

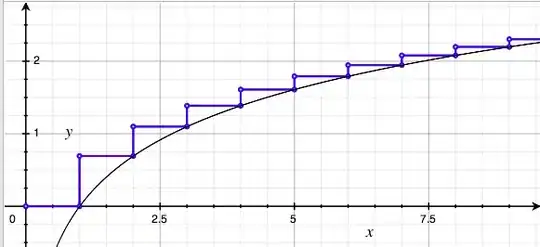

FFT result of C=40%, Y=40% brightness image

Anyone can give me some hints to remove the color strips?

@natan I tried FFT method you suggested me, but I was not lucky to get peak at both axis x and y. In order to plot the frequency as you did, I resized my image to square.