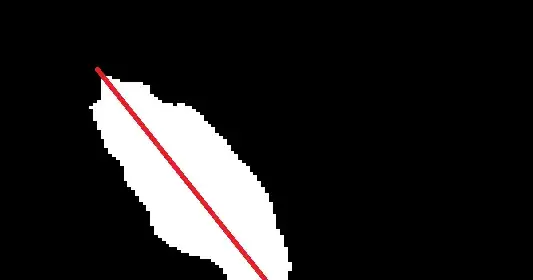

I have a depth image, that I've generated using 3D CAD data. This depth image can also be taken from a depth imaging sensor such as Microsoft Kinect or any other stereo camera. So basically it is a depth map of points visible in the imaging view. In other words it is segmented point cloud of an object from a certain view.

I would like to determine (estimating will also do) the surface normals of each point, then find tangent plane of that point.

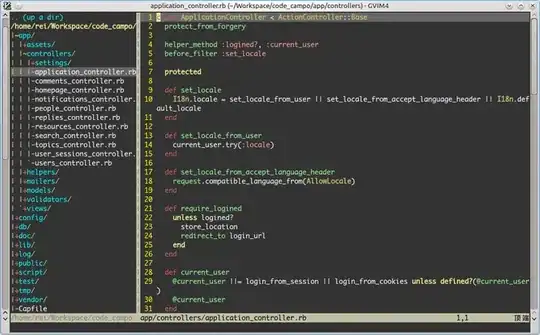

How can I do this? I've did some research and found some techniques but didn't understand them well (I could not implement it). More importantly how can I do this in Matlab or OpenCV? I couldn't manage to do this using surfnorm command. AFAIK it needs a single surface, and I have partial surfaces in my depth image.

This is an example depth image.

[EDIT]

What I want to do is, after I get the surface normal at each point I will create tangent planes at those points. Then use those tangent planes to decide if that point is coming from a flat region or not by taking the sum of distances of neighbor points to the tangent plane.