I have following code which is supposed to do some operation over a vector of data and store the result, my problem is that when I run this code at first each iteration (each outter loop) takes about 12 sec but after some time iteration's time become longer at the end each iteration takes 2 minutes to become complete, I wanna know what's wrong with my code? is this related to the size of memory and the size of array I am keeping? if yes how can I solve it? if no what's the problem?

allclassifiers is 2D vectors: allclassifiers.shape = tuple: (1020, 1629) which means my for should loop 1629 times and each time do the operation on a vectors of size 1020, each vectors is array 0 or 1s.

At the end the size of points_comb is around 400MB, points_comb.shape = tuple: (26538039, 2), and I must mention I am running the code on a system with 4GB of RAM.

valid_list is a vector of 0 and 1 with size of 1020, valid_list.shape tuple: (1020,)

startTime = datetime.now()

for i in range(allclassifiers.shape[1]):

for j in range(allclassifiers.shape[1]):

rs_t = combine_crisp(valid_list, allclassifiers[:,i], allclassifiers[:,j], boolean_function)

fpr_tmp, tpr_tmp = resp2pts(valid_list, rs_t)

points_comb = np.vstack((points_comb,np.hstack((fpr_tmp, tpr_tmp))))

endTime = datetime.now()

executionTime = endTime - startTime

print "Combination for classifier: " + str(i)+ ' ' + str(executionTime.seconds / 60) + " minutes and " + str((executionTime.seconds) - ((executionTime.seconds / 60)*60)) +" seconds"

def combine_crisp(lab, r_1, r_2, fun):

rs = np.empty([len(lab), len(fun)])

k = 0

for b in fun:

if b == 1: #----------------> 'A AND B'

r12 = np.logical_and(r_1, r_2)

elif b == 2: #----------------> 'NOT A AND B'

r12 = np.logical_not(np.logical_and(r_1, r_2))

elif b == 3: #----------------> 'A AND NOT B'

r12 = np.logical_and(r_1, np.logical_not (r_2))

elif b == 4: #----------------> 'A NAND B'

r12 = np.logical_not( (np.logical_and(r_1, r_2)))

elif b == 5: #----------------> 'A OR B'

r12 = np.logical_or(r_1, r_2)

elif b == 6: #----------------> 'NOT A OR B'; 'A IMP B'

r12 = np.logical_not (np.logical_or(r_1, r_2))

elif b == 7: #----------------> 'A OR NOT B' ;'B IMP A'

r12 = np.logical_or(r_1, np.logical_not (r_2))

elif b == 8: #----------------> 'A NOR B'

r12 = np.logical_not( (np.logical_or(r_1, r_2)))

elif b == 9: #----------------> 'A XOR B'

r12 = np.logical_xor(r_1, r_2)

elif b == 10: #----------------> 'A EQV B'

r12 = np.logical_not (np.logical_xor(r_1, r_2))

else:

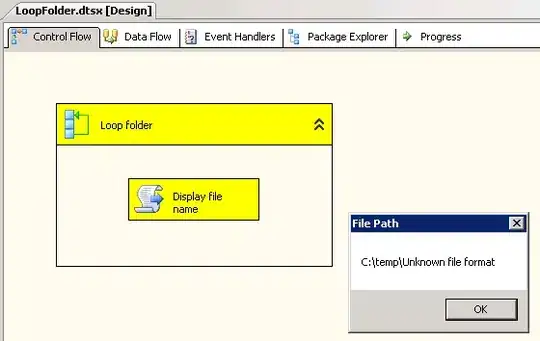

print('Unknown Boolean function')

rs[:, k] = r12

k = k + 1

return rs

def resp2pts(lab, resp):

lab = lab > 0

resp = resp > 0

P = sum(lab)

N = sum(~lab)

if resp.ndim == 1:

num_pts = 1

tp = sum(lab[resp])

fp = sum(~lab[resp])

else:

num_pts = resp.shape[1]

tp = np.empty([num_pts,1])

fp = np.empty([num_pts,1])

for i in np.arange(num_pts):

tp[i] = np.sum( lab[resp[:,i]])

fp[i] = np.sum( ~lab[resp[:,i]])

tp = np.true_divide(tp,P)

fp = np.true_divide(fp,N)

return fp, tp