Generally, floating point error refers to when a number that cannot be stored in the IEEE floating point representation.

Integers are stored with the right-most bit being 1, and each bit to the left being double that (2,4,8,...). It's easy to see that this can store any integer up to 2^n, where n is the number of bits.

The mantissa (decimal part) of a floating point number is stored in a similar way, but moving left to right, and each successive bit being half of the value of the previous one. (It's actually a little more complicated than this, but it will do for now).

Thus, numbers like 0.5 (1/2) are easy to store, but not every number <1 can be created by adding a fixed number of fractions of the form 1/2, 1/4, 1/8, ...

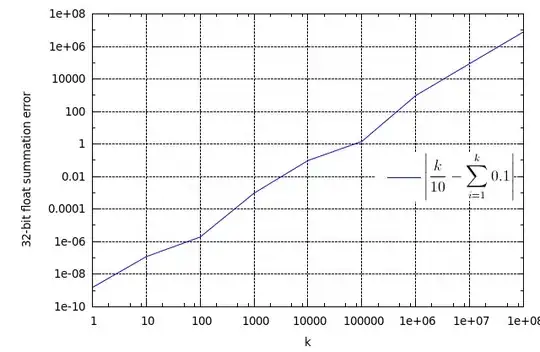

A really simple example is 0.1, or 1/10. This can be done with an infinite series (which I can't really be bothered working out), but whenever a computer stores 0.1, it's not exactly this number that is stored.

If you have access to a Unix machine, it's easy to see this:

Python 2.5.1 (r251:54863, Apr 15 2008, 22:57:26)

[GCC 4.0.1 (Apple Inc. build 5465)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>> 0.1

0.10000000000000001

>>>

You'll want to be really careful with equality tests with floats and doubles, in whatever language you are in.

(As for your example, 0.2 is another one of those pesky numbers that cannot be stored in IEEE binary, but as long as you are testing inequalities, rather than equalities, like p <= 0.2, then you'll be okay.)