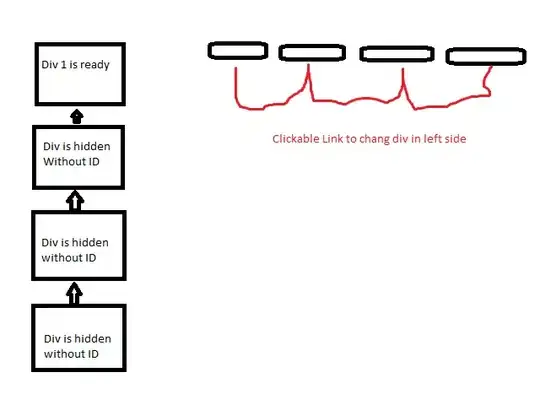

I'm trying to recognize hand positions in OpenCV for Android. I'd like to reduce a detected hand shape to a set of simple lines (= point sequences). I'm using a thinning algorithm to find the skeleton lines of detected hand shapes. Here's an exemplary result (image of my left hand):

In this image I'd like to get the coordinates of the skeleton lines, i.e. "vectorize" the image. I've tried HoughLinesP but this only produces huge sets of very short lines, which is not what I want.

My second approach uses findContours:

// Get contours

Mat skeletonFrame; //image above

ArrayList<MatOfPoint> contours = new ArrayList<MatOfPoint>();

Imgproc.findContours(skeletonFrame, contours, new Mat(), Imgproc.RETR_CCOMP, Imgproc.CHAIN_APPROX_SIMPLE);

// Find longest contour

double maxLen = 0;

MatOfPoint max = null;

for (MatOfPoint c : contours) {

double len = Imgproc.arcLength(Util.convert(c), true); //Util.convert converts between MatOfPoint and MatOfPoint2f

if (len > maxLen) {

maxLen = len;

max = c;

}

}

// Simplify detected contour

MatOfPoint2f result = new MatOfPoint2f();

Imgproc.approxPolyDP(Util.convert(max), result, 5.0, false);

This basically works; however, the contours returned by findContours are always closed, which means that all the skeleton lines are represented twice.

Exemplary result: (gray lines = detected contours, not skeleton lines of first image)

So my question is: How can I avoid these closed contours and only get a collection of "single stroke" point sequences?

Did I miss something in the OpenCV docs? I'm not necessarily asking for code, a hint for an algorithm I could implement myself would also be great. Thanks!