The MethComp package seems to be no longer maintained (was removed from CRAN).

Russel88/COEF allows to use stat_/geom_summary with method="tls" to add an orthogonal regression line.

Based on this and wikipedia:Deming_regression I created the following functions, which allow to use noise ratios other than 1:

deming.fit <- function(x, y, noise_ratio = sd(y)/sd(x)) {

if(missing(noise_ratio) || is.null(noise_ratio)) noise_ratio <- eval(formals(sys.function(0))$noise_ratio) # this is just a complicated way to write `sd(y)/sd(x)`

delta <- noise_ratio^2

x_name <- deparse(substitute(x))

s_yy <- var(y)

s_xx <- var(x)

s_xy <- cov(x, y)

beta1 <- (s_yy - delta*s_xx + sqrt((s_yy - delta*s_xx)^2 + 4*delta*s_xy^2)) / (2*s_xy)

beta0 <- mean(y) - beta1 * mean(x)

res <- c(beta0 = beta0, beta1 = beta1)

names(res) <- c("(Intercept)", x_name)

class(res) <- "Deming"

res

}

deming <- function(formula, data, R = 100, noise_ratio = NULL, ...){

ret <- boot::boot(

data = model.frame(formula, data),

statistic = function(data, ind) {

data <- data[ind, ]

args <- rlang::parse_exprs(colnames(data))

names(args) <- c("y", "x")

rlang::eval_tidy(rlang::expr(deming.fit(!!!args, noise_ratio = noise_ratio)), data, env = rlang::current_env())

},

R=R

)

class(ret) <- c("Deming", class(ret))

ret

}

predictdf.Deming <- function(model, xseq, se, level) {

pred <- as.vector(tcrossprod(model$t0, cbind(1, xseq)))

if(se) {

preds <- tcrossprod(model$t, cbind(1, xseq))

data.frame(

x = xseq,

y = pred,

ymin = apply(preds, 2, function(x) quantile(x, probs = (1-level)/2)),

ymax = apply(preds, 2, function(x) quantile(x, probs = 1-((1-level)/2)))

)

} else {

return(data.frame(x = xseq, y = pred))

}

}

# unrelated hlper function to create a nicer plot:

fix_plot_limits <- function(p) p + coord_cartesian(xlim=ggplot_build(p)$layout$panel_params[[1]]$x.range, ylim=ggplot_build(p)$layout$panel_params[[1]]$y.range)

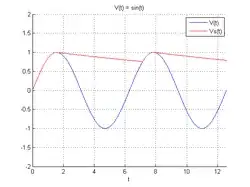

Demonstration:

library(ggplot2)

#devtools::install_github("Russel88/COEF")

library(COEF)

fix_plot_limits(

ggplot(data.frame(x = (1:5) + rnorm(100), y = (1:5) + rnorm(100)*2), mapping = aes(x=x, y=y)) +

geom_point()

) +

geom_smooth(method=deming, aes(color="deming"), method.args = list(noise_ratio=2)) +

geom_smooth(method=lm, aes(color="lm")) +

geom_smooth(method = COEF::tls, aes(color="tls"))

Created on 2019-12-04 by the reprex package (v0.3.0)