I am currently working on an intrusion system based on video surveillance. In order to complete this task, I take a snapshot of the background of my scene (assume it's totally clean, no people or moving objects). Then, I compare the frame I get from the (static) video camera and look for the differences. I have to be able to check any differences, not only human shape or whatever, so I cannot specific feature extraction.

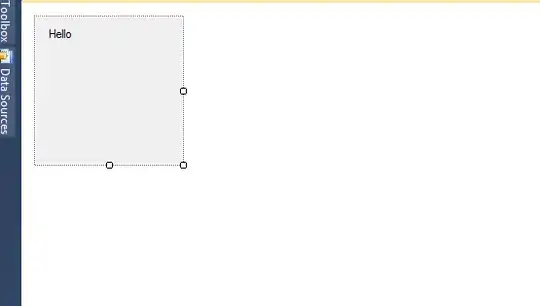

Typically, I have:

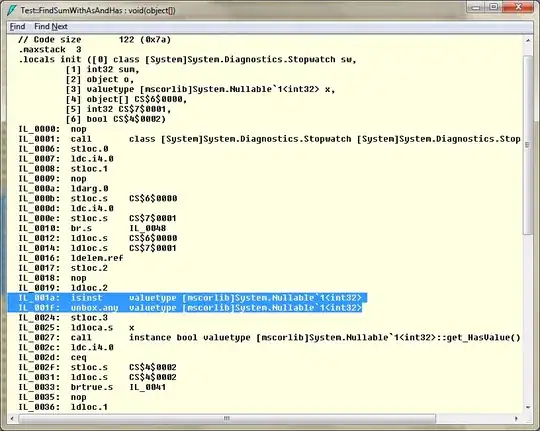

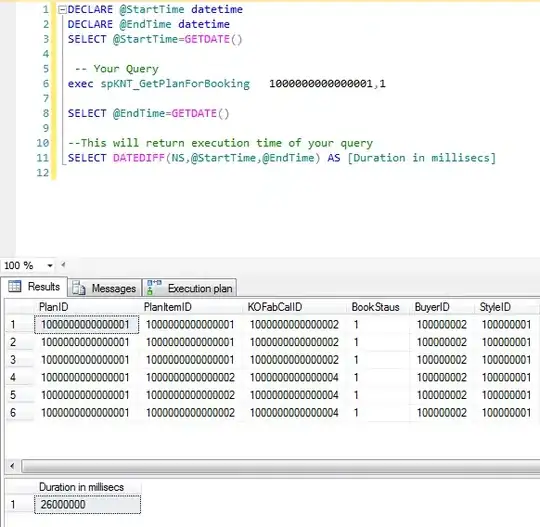

I am using OpenCV, so to compare I basically do:

cv::Mat bg_frame;

cv::Mat cam_frame;

cv::Mat motion;

cv::absdiff(bg_frame, cam_frame, motion);

cv::threshold(motion, motion, 80, 255, cv::THRESH_BINARY);

cv::erode(motion, motion, cv::getStructuringElement(cv::MORPH_RECT, cv::Size(3,3)));

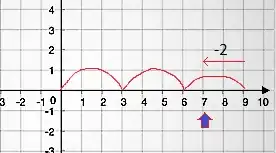

Here is the result:

As you can see, the arm is stripped (due to color differential conflict I guess) and this is sadly not what I want.

I thought about add the use of cv::Canny() in order to detect the edges and fill the missing part of the arm, but sadly (once again), it only solves the problem in few situation not most of them.

Is there any algorithm or technique I could use to obtain an accurate difference report?

PS: Sorry for the images. Due to my newly subscription, I do not have enough reputation.

EDIT I use grayscale image in here, but I am open to any solution.