I'm running Datastax Enterprise in a cluster consisting of 3 nodes. They are all running under the same hardware: 2 Core Intel Xeon 2.2 Ghz, 7 GB RAM, 4 TB Raid-0

This should be enough for running a cluster with a light load, storing less than 1 GB of data.

Most of the time, everything is just fine but it appears that sometimes the running tasks related to the Repair Service in OpsCenter sometimes get stuck; this causes an instability in that node and an increase in load.

However, if the node is restarted, the stuck tasks don't show up and the load is at normal levels again.

Because of the fact that we don't have much data in our cluster we're using the min_repair_time parameter defined in opscenterd.conf to delay the repair service so that it doesn't complete too often.

It really seems a little bit weird that the tasks that says that are marked as "Complete" and are showing a progress of 100% don't go away, and yes, we've waited hours for them to go away but they won't; the only way that we've found to solve this is to restart the nodes.

Edit:

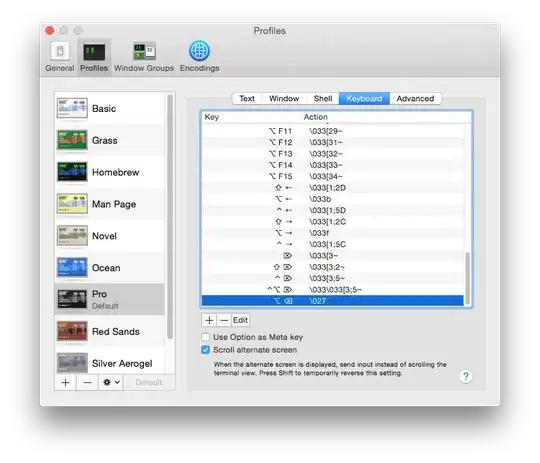

Here's the output from nodetool compactionstats

Edit 2:

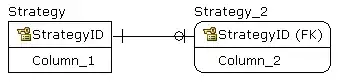

I'm running under Datastax Enterprise v. 4.6.0 with Cassandra v. 2.0.11.83

Edit 3:

This is output from dstat on a node that behaving normally

This is output from dstat on a node with stucked compaction

Edit 4:

Output from iostat on node with stucked compaction, see the high "iowait"