I am working on a project involving OCR-ing handwritten digits which uses a typical preprocessing-segmentation-recognition pipeline. I have done the first two stages manually by adjusting some standard algorithms from OpenCV for my particular task. For the third stage (recognition), I'd like to use a ready-made classifier.

First I tried Tesseract, but it was really bad. So I started looking into the progress on MNIST. Due to its popularity, I'd hoped it would be easy to get a nice high-quality classifier. Indeed, the top answer here suggests using a HOG+SVM tandem, which is conveniently implemented in this OpenCV sample. Unfortunately, it isn't as good as I'd hoped. It keeps confusing 0's for 8's (where it is obvious for my eye that it is actually a 0), which accounts by far for the greatest amount of mistakes my algorithm makes.

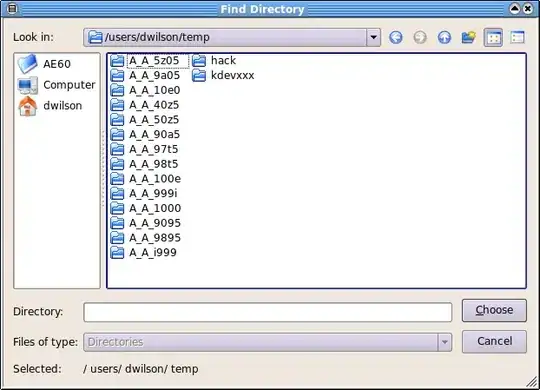

Here are some examples of the errors made by HOG+SVM:

The top line are the original digits extracted from the image (higher-resolution images do not exist), the middle line are these digits deskewed, size-normalized and centered, and the bottom line is the output of HOG+SVM.

I tried to hot-fix this 0-8 error by applying a kNN classifier after HOG+SVM (if HOG+SVM outputs an 8 run kNN and return its output instead), but the results were the same.

Then I tried to adapt this pylearn2 sample which claims to achieve 0.45% MNIST test error. However, after spending a week with pylearn2 I couldn't make it work. It keeps crashing randomly all the time, even in an environment as sterile as an Amazon EC2 g2.2xlarge instance running this image (I don't even mention my own machine).

I know about the existence of Caffe, but I haven't tried it.

What would be the easiest way to set up a high-accuracy (say, MNIST test error <1%) handwritten digit classifier? Preferably, one that does not require an NVIDIA card to run. As far as I understand, pylearn2 (since it heavily relies on cuda-convnet) does. A Python interface and an ability to run on Windows would be a pleasant bonus.

Note: I cannot create a new pylearn2 tag since I don't have enough reputation, but it surely should be there.