I have two images that are similar but differ in orientation and size. One example can be seen below:

Is there a way to match the two images?

I have used Procrustes shape analysis, but are there other ways?

I have two images that are similar but differ in orientation and size. One example can be seen below:

Is there a way to match the two images?

I have used Procrustes shape analysis, but are there other ways?

Here's something to get you started. What you are asking for is a classic problem known as image registration. Image registration seeks to find the correct homography that takes one image and aligns it with another. This involves finding interest or keypoints that are common between the two images and determining which keypoints match up between the two images. Once you have these pairs of points, you determine a homography matrix and warp one of the images so that they are aligned with the other with this said matrix.

I'm going to assume that you have the Computer Vision and Image Processing Toolboxes that are part of MATLAB. If you don't, then the answer that Maurits gave is a good alternative, and the VLFeat Toolbox is one that I have also used.

First, let's read in the images directly from StackOverflow:

im = imread('https://i.stack.imgur.com/vXqe8.png');

im2 = imread('https://i.stack.imgur.com/Pd7pt.png');

im_gray = rgb2gray(im);

im2_gray = rgb2gray(im2);

We also need to convert to grayscale as the keypoint detection algorithms require a grayscale image. Next, we can use any feature detection algorithm that's part of MATLAB's CVST.... I'm going to use SURF as it is essentially the same as SIFT, but with some minor but key differences. You can use the detectSURFFeatures function that's part of the CVST toolbox and it accepts grayscale images. The output is a structure that contains a bunch of information about each feature point the algorithm detected for the image. Let's apply that to both of the images (in grayscale).

points = detectSURFFeatures(im_gray);

points2 = detectSURFFeatures(im2_gray);

Once we detect the features, it's now time to extract the descriptors that describe these keypoints. That can be done with extractFeatures. This takes in a grayscale image and the corresponding structure that was output from detectSURFFeatures. The output is a set of features and valid keypoints after some post-processing.

[features1, validPoints1] = extractFeatures(im_gray, points);

[features2, validPoints2] = extractFeatures(im2_gray, points2);

Now it's time to match the features between the two images. That can be done with matchFeatures and it takes in the features between the two images:

indexPairs = matchFeatures(features1, features2);

indexPairs is a 2D array where the first column tells you which feature point from the first image matched with those from the second image, stored in the second column. We would use this to index into our valid points to flesh out what actually matches.

matchedPoints1 = validPoints1(indexPairs(:, 1), :);

matchedPoints2 = validPoints2(indexPairs(:, 2), :);

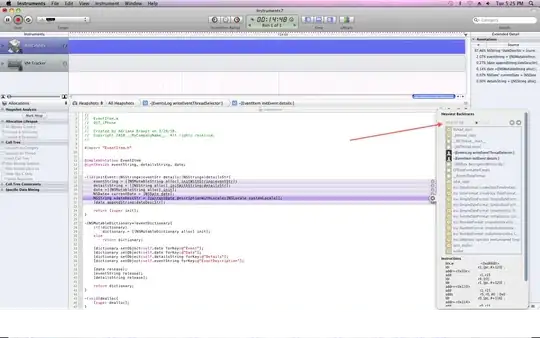

We can then show which points matched by using showMatchedFeatures like so. We can put both images side by side each other and draw lines between the matching keypoints to see which matched.

figure;

showMatchedFeatures(im, im2, matchedPoints1, matchedPoints2, 'montage');

This is what I get:

It isn't perfect, but it most certainly finds consistent matches between the two images.

Now what we need to do next is find the homography matrix and warp the images. I'm going to use estimateGeometricTransform so that we can find a transformation that warps one set of points to another. As Dima noted in his comments to me below, this robustly determines the best homography matrix via RANSAC. We can call estimateGeometricTransform like so:

tform = estimateGeometricTransform(matchedPoints1.Location,...

matchedPoints2.Location, 'projective');

The first input takes in a set of input points, which are the points that you to transform. The second input takes in a set of base points which are the reference points. These points are what we want to match up to.

In our case, we want to warp the points from the first image - the person standing up and make it match the second image - the person leaning on his side, and so the first input is the points from the first image, and the second input is the points from the second image.

For the matched points, we want to reference the Location field because these contain the coordinates of where the actual points matched between the two images. We also use projective to account for scale, shearing and rotation. The output is a structure that contains our transformation of points.

What we will do next is use imwarp to warp the first image so that it aligns up with the second.

out = imwarp(im, tform);

out will contain our warped image. If we showed the second image and this output image side by side:

figure;

subplot(1,2,1);

imshow(im2);

subplot(1,2,2);

imshow(out);

This is what we get:

I'd say that's pretty good, don't you think?

For your copying and pasting pleasure, here's what the full code looks like:

im = imread('https://i.stack.imgur.com/vXqe8.png');

im2 = imread('https://i.stack.imgur.com/Pd7pt.png');

im_gray = rgb2gray(im);

im2_gray = rgb2gray(im2);

points = detectSURFFeatures(im_gray);

points2 = detectSURFFeatures(im2_gray);

[features1, validPoints1] = extractFeatures(im_gray, points);

[features2, validPoints2] = extractFeatures(im2_gray, points2);

indexPairs = matchFeatures(features1, features2);

matchedPoints1 = validPoints1(indexPairs(:, 1), :);

matchedPoints2 = validPoints2(indexPairs(:, 2), :);

figure;

showMatchedFeatures(im, im2, matchedPoints1, matchedPoints2, 'montage');

tform = estimateGeometricTransform(matchedPoints1.Location,...

matchedPoints2.Location, 'projective');

out = imwarp(im, tform);

figure;

subplot(1,2,1);

imshow(im2);

subplot(1,2,2);

imshow(out);

Bear in mind that I used the default parameters for everything... so detectSURFFeatures, matchFeatures, etc. You may have to play around with the parameters to get consistent results across different pairs of images you try. I'll leave that to you as an exercise. Take a look at all of the links I've linked above with regards to each of the functions so that you can play around with the parameters to suit your tastes.

Have fun, and good luck!

Check out Find Image Rotation and Scale Using Automated Feature Matching example in the Computer Vision System Toolbox.

It shows how to detect interest points, extract and match features descriptors, and compute the transformation between the two images.

You can get a reasonable result doing the following:

vl_ubcmatchThe initial result:

This is rather a different approach than the rest. Feature based registration methods are more robust, but my approach may just be useful for you application, so I'm writing it down here.

Histogram Intersection : Calculate the histogram intersection and normalize it by dividing it by the number of pixels in model histogram. This will give you a value between 0 and 1.

image = imread('Pd7pt.png');

model = imread('vXqe8.png');

grImage = rgb2gray(image);

grModel = rgb2gray(model);

hImage = imhist(grImage);

hModel = imhist(grModel);

normhistInterMeasure = sum(min(hImage, hModel))/sum(hModel)

corrMeasure = corr2(hImage, hModel)

For intersection and correlation I get 0.2492 and 0.9999 respectively.