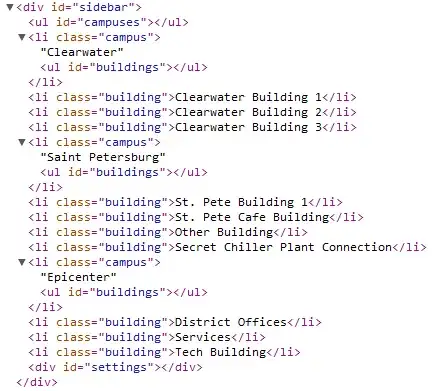

I am running Spark using YARN(Hadoop 2.6) as cluster manager. YARN is running in Pseudo distributed mode. I have started the spark shell with 6 executors and was expecting the same

spark-shell --master yarn --num-executors 6

But whereas in the Spark Web UI, I see only 4 executors

Any reason for this?

PS : I ran the nproc command in my Ubuntu(14.04) and give below is the result. I believe this mean, my system has 8 cores

mountain@mountain:~$ nproc

8