YARN (Yet Another Resource Negotiator) is a key component of second generation Apache Hadoop infrastructure. DO NOT USE THIS for the JavaScript/Node.js Yarn package manager (use [yarnpkg] instead)! Originally described by Apache as a redesigned resource manager, YARN is now characterized as a large-scale, distributed operating system for big data applications including next generation MapReduce (MR2).

In the Big Data business running fewer larger clusters is cheaper than running more small clusters. Larger clusters also process larger data sets and support more jobs and users.

The Apache Hadoop MapReduce framework has hit a scalability limit around 4,000 machines. We are developing the next generation of Apache Hadoop MapReduce that factors the framework into a generic resource scheduler and a per-job, user-defined component that manages the application execution. Since downtime is more expensive at scale high-availability is built-in from the beginning; as are security and multi-tenancy to support many users on the larger clusters. The new architecture will also increase innovation, agility and hardware utilization.

Background

The current implementation of the Hadoop MapReduce framework is showing it’s age.

Given observed trends in cluster sizes and workloads, the MapReduce JobTracker needs a drastic overhaul to address several deficiencies in its scalability, memory consumption, threading-model, reliability and performance. Over the last 5 years, there has been spot fixes, however lately these have come at an ever-growing cost as evinced by the increasing difficulty of making changes to the framework. The architectural deficiencies, and corrective measures, are both old and well understood - even as far back as late 2007, when we documented the proposed fix on MapReduce’s jira: MAPREDUCE-278.

From an operational perspective, the current Hadoop MapReduce framework forces a system-wide upgrade for any minor or major changes such as bug fixes, performance improvements and features. Worse, it forces every single customer of the cluster to upgrade at the same time, regardless of his or her interests; this wastes expensive cycles of customers as they validate the new version of the Hadoop for their applications.

The Next Generation of MapReduce

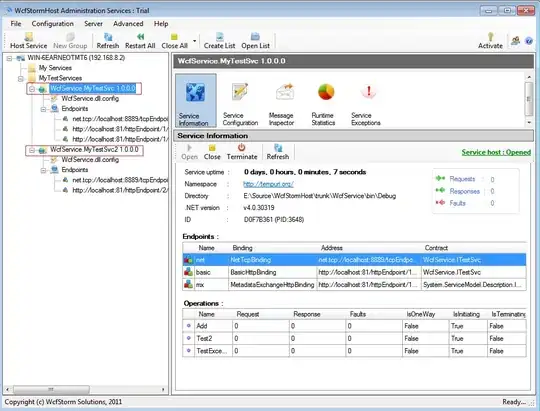

Figure: Yarn Architecture

The fundamental idea of the re-architecture is to divide the two major functions of the JobTracker, resource management and job scheduling/monitoring, into separate components. The new ResourceManager manages the global assignment of compute resources to applications and the per-application ApplicationMaster manages the application’s scheduling and coordination. An application is either a single job in the classic MapReduce jobs or a DAG of such jobs. The ResourceManager and per-machine NodeManager server, which manages the user processes on that machine, form the computation fabric. The per-application ApplicationMaster is, in effect, a framework specific library and is tasked with negotiating resources from the ResourceManager and working with the NodeManager(s) to execute and monitor the tasks.

The ResourceManager supports hierarchical application queues and those queues can be guaranteed a percentage of the cluster resources. It is pure scheduler in the sense that it performs no monitoring or tracking of status for the application. Also, it offers no guarantees on restarting failed tasks either due to application failure or hardware failures.

The ResourceManager performs its scheduling function based the resource requirements of the applications; each application has multiple resource request types that represent the resources required for containers. The resource requests include memory, CPU, disk, network etc. Note that this is a significant change from the current model of fixed-type slots in Hadoop MapReduce, which leads to significant negative impact on cluster utilization. The ResourceManager has a scheduler policy plug-in, which is responsible for partitioning the cluster resources among various queues, applications etc. Scheduler plug-ins can be based, for e.g., on the current CapacityScheduler and FairScheduler.

The NodeManager is the per-machine framework agent who is responsible for launching the applications’ containers, monitoring their resource usage (cpu, memory, disk, network) and reporting the same to the Scheduler.

The per-application ApplicationMaster has the responsibility of negotiating appropriate resource containers from the Scheduler, launching tasks, tracking their status & monitoring for progress, and handling task-failures.

MRV2 maintains API compatibility with previous stable release (hadoop-1.x). This means that all Map-Reduce jobs should still run unchanged on top of MRv2 with just a recompile.