I'm attempting to transform a pandas DataFrame object into a new object that contains a classification of the points based upon some simple thresholds:

- Value transformed to

0if the point isNaN - Value transformed to

1if the point is negative or 0 - Value transformed to

2if it falls outside certain criteria based on the entire column - Value is

3otherwise

Here is a very simple self-contained example:

import pandas as pd

import numpy as np

df=pd.DataFrame({'a':[np.nan,1000000,3,4,5,0,-7,9,10],'b':[2,3,-4,5,6,1000000,7,9,np.nan]})

print(df)

The transformation process created so far:

#Loop through and find points greater than the mean -- in this simple example, these are the 'outliers'

outliers = pd.DataFrame()

for datapoint in df.columns:

tempser = pd.DataFrame(df[datapoint][np.abs(df[datapoint]) > (df[datapoint].mean())])

outliers = pd.merge(outliers, tempser, right_index=True, left_index=True, how='outer')

outliers[outliers.isnull() == False] = 2

#Classify everything else as "3"

df[df > 0] = 3

#Classify negative and zero points as a "1"

df[df <= 0] = 1

#Update with the outliers

df.update(outliers)

#Everything else is a "0"

df.fillna(value=0, inplace=True)

Resulting in:

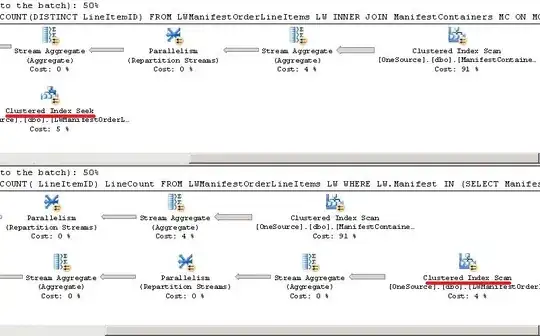

I have tried to use .applymap() and/or .groupby() in order to speed up the process with no luck. I found some guidance in this answer however, I'm still unsure how .groupby() is useful when you're not grouping within a pandas column.