I have a file containing logged events. Each entry has a time and latency. I'm interested in plotting the cumulative distribution function of the latencies. I'm most interested in tail latencies so I want the plot to have a logarithmic y-axis. I'm interested in the latencies at the following percentiles: 90th, 99th, 99.9th, 99.99th, and 99.999th. Here is my code so far that generates a regular CDF plot:

# retrieve event times and latencies from the file

times, latencies = read_in_data_from_file('myfile.csv')

# compute the CDF

cdfx = numpy.sort(latencies)

cdfy = numpy.linspace(1 / len(latencies), 1.0, len(latencies))

# plot the CDF

plt.plot(cdfx, cdfy)

plt.show()

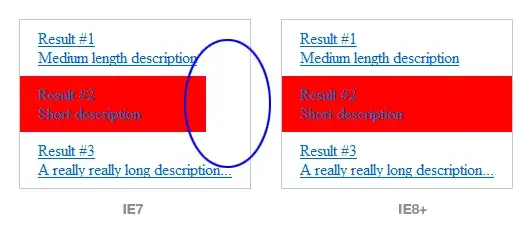

I know what I want the plot to look like, but I've struggled to get it. I want it to look like this (I did not generate this plot):

Making the x-axis logarithmic is simple. The y-axis is the one giving me problems. Using set_yscale('log') doesn't work because it wants to use powers of 10. I really want the y-axis to have the same ticklabels as this plot.

How can I get my data into a logarithmic plot like this one?

EDIT:

If I set the yscale to 'log', and ylim to [0.1, 1], I get the following plot:

The problem is that a typical log scale plot on a data set ranging from 0 to 1 will focus on values close to zero. Instead, I want to focus on the values close to 1.