I want to use LZO compression in MapReduce, but am getting an error when I run my MapReduce job. I am using Ubuntu with a Java program. I am only trying to run this on my local machine. My initial error is

ERROR lzo.GPLNativeCodeLoader: Could not load native gpl library

and down the line

ERROR lzo.LzoCodec: Cannot load native-lzo without native-hadoop

and then

java.lang.RuntimeException: native-lzo library not available

I have followed a number of online and textual instructions on how to download and configure files for use with LZO compression. Here you can see my hadoop-lzo jar file in the lib folder

I have changed my configurations as such. Here is my core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>com.hadoop.compression.lzo.LzoCodec,com.hadoop.compression.lzo.LzopCodec</value>

</property>

<property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>

<property>

<name>mapred.output.compress</name>

<value>true</value>

</property>

<property>

<name>mapred.output.compression.codec</name>

<value>com.hadoop.compression.lzo.LzopCodec</value>

</property>

</configuration>

and my mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

<property>

<name>mapred.compress.map.output</name>

<value>true</value>

</property>

<property>

<name>mapred.map.output.compression.codec</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>

<property>

<name>mapred.child.env</name>

<value>JAVA_LIBRARY_PATH=$JAVA_LIBRARY_PATH:/home/matthew/hadoop/lib/native/lib/lib</value>

</property>

</configuration>

I have also modified my hadoop-env.sh in the same conf folder with these lines

export HADOOP_CLASSPATH=/home/hadoop/lib/hadoop-lzo-0.4.13.jar

export JAVA_LIBRARY=/home/hadoop/lib/native/lib/lib

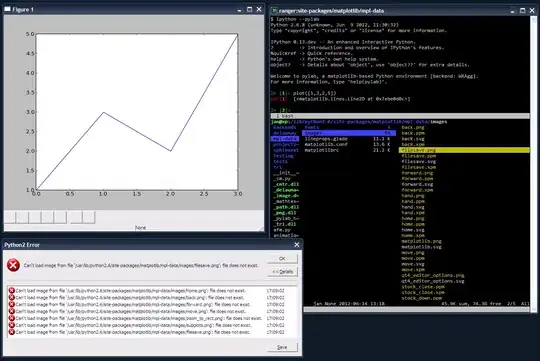

If you are interested in what is in /home/hadoop/lib/native/lib/lib

For what it's worth, here is my Driver class that does all of the compressing

//import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

//import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

//import org.apache.hadoop.io.compress.CompressionCodec;

import com.hadoop.compression.lzo.LzopCodec;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

//import org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class FuzzyJoinDriver extends Configured implements Tool{

public static void main(String[] args) throws Exception {

int exitCode = ToolRunner.run(new Configuration(), new FuzzyJoinDriver(),args);

System.exit(exitCode);

}

@Override

public int run(String[] args) throws Exception {

if (args.length != 2) {

System.err.println("Usage: FuzzyJoinDriver <input path> <output path>");

System.exit(-1);

}

Configuration conf = new Configuration();

//Used to compress map output

//conf.setBoolean("mapred.compres.map.output", true);

//conf.setClass("mapred.map.output.compression.code", GzipCodec.class, CompressionCodec.class);

Job job = new Job(conf);

job.setJarByClass(FuzzyJoinDriver.class);

job.setJobName("Fuzzy Join");

//Distributed Cache

//DistributedCache.addCacheFile(new URI("/cache/ReducerCount.txt"), job.getConfiguration());

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(FuzzyJoinMapper.class);

job.setReducerClass(FuzzyJoinReducer.class);

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(RelationTuple.class);

job.setPartitionerClass(JoinKeyPartitioner.class);

job.setOutputKeyClass(NullWritable.class);

job.setOutputValueClass(Text.class);

//Used to compress output from reducer

FileOutputFormat.setCompressOutput(job, true);

FileOutputFormat.setOutputCompressorClass(job, LzopCodec.class);

//SequenceFileOutputFormat.setOutputCompressionType(job, org.apache.hadoop.io.SequenceFile.CompressionType.BLOCK);

return(job.waitForCompletion(true) ? 0 : 1);

}

}

It compiles with no errors.

I worry that my lack of understanding of the configuration and other steps is leading me down the wrong path and perhaps I'm missing something simple to those with a better comprehension of the matter than I have. Thanks for making it this far. I know it's a lengthy post.