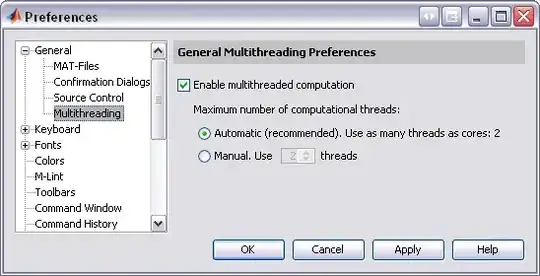

EDIT 2: I changed the amount of executor memory and found that the number of executors is limited by the amount of memory allocated. spark.executor.memory is the amount of memory allocated to each executor, not the total amount of memory available for all executors. So now I have 11 executors spawning. The issue now is still each executor only utilizing one core.

I am running Spark on an EMR cluster and for some reason I can't seem to get jobs to utilize the full system resources. I started the cluster with Spark maximizeResourceAllocation True, but I have edited the configuration to:

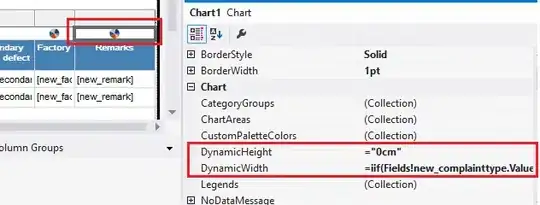

spark.executor.instances 11

spark.executor.cores 5

spark.executor.memory 10g

spark.default.parallelism 16

spark.yarn.executor.memoryOverhead 1049

The cluster has four worker nodes with 8 cores x 2 threads, 16GB RAM each:

I chose the spark settings based on this post.

As I understand it, that should start up 11 executors, 3 on each (I might have misunderstood where the application master runs), and each executor will have access to 5 cores.

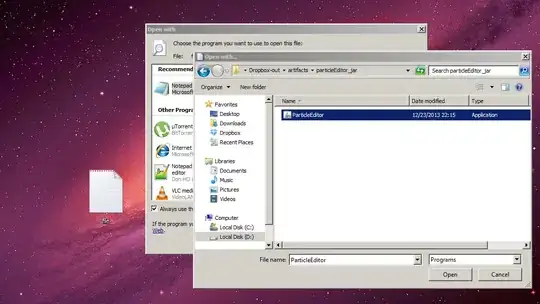

However, when I submit a job via spark-submit, I see the following:

This appears to show only 4 cores being used. Further, only three executors are spawned:

Is there something that I am missing here? Even if I am not understanding the configuration parameters correctly, I don't see why this should be the case.

edit: I have tried submitting my job with a number of different options, but I get the same behavior. spark-submit --deploy-mode client --master yarn[*] --num-executors 11 --executor-cores 5 --verbose parse_ad_logs.py

I included yarn[*] based on the linked possible dupe question - There much relevant information I could find there though.