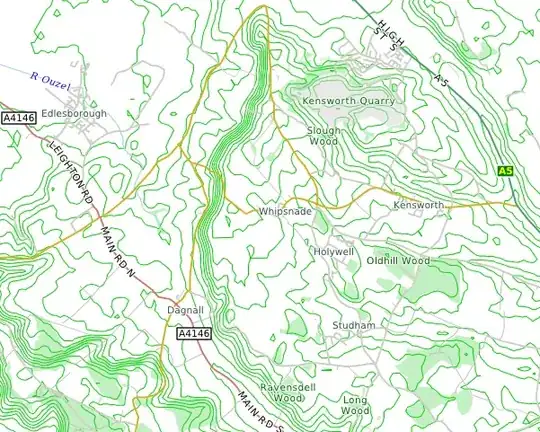

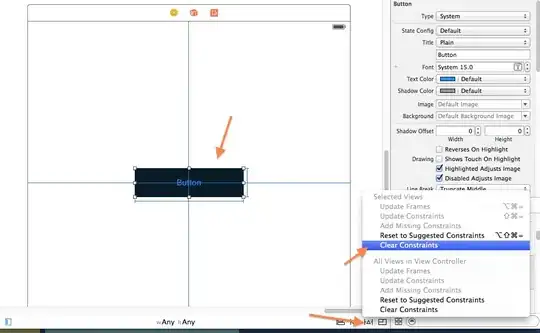

I am new to OpenCV4Android. Here is some code I wrote to detect blue colored blob in an image. Among the following images, image 1 was in my laptop. I run the application and the frame captured by the OpenCV camera is image 2. You can look at the code to see what the rest of the images are. (As you can see in the code, all the images are saved in the SD card.)

I have the following questions:.

Why hass the color of the light-blue blob turned out to be light-yellow in the rgba frame captured by the camera (shown in image 2).

I created a

boundingRectaround the largest blue colored blob, but thenROIby doingrgbaFrame.submat(detectedBlobRoi). But you can see in the last image, it just looks like a couple of grey pixels. I was expecting the blue colored sphere separated from the rest of the image.

What am I missing or doing wrong?

CODE:

private void detectColoredBlob () {

Highgui.imwrite("/mnt/sdcard/DCIM/rgbaFrame.jpg", rgbaFrame);//check

Mat hsvImage = new Mat();

Imgproc.cvtColor(rgbaFrame, hsvImage, Imgproc.COLOR_RGB2HSV_FULL);

Highgui.imwrite("/mnt/sdcard/DCIM/hsvImage.jpg", hsvImage);//check

Mat maskedImage = new Mat();

Scalar lowerThreshold = new Scalar(170, 0, 0);

Scalar upperThreshold = new Scalar(270, 255, 255);

Core.inRange(hsvImage, lowerThreshold, upperThreshold, maskedImage);

Highgui.imwrite("/mnt/sdcard/DCIM/maskedImage.jpg", maskedImage);//check

Mat dilatedMat= new Mat();

Imgproc.dilate(maskedImage, dilatedMat, new Mat() );

Highgui.imwrite("/mnt/sdcard/DCIM/dilatedMat.jpg", dilatedMat);//check

List<MatOfPoint> contours = new ArrayList<MatOfPoint>();

Imgproc.findContours(dilatedMat, contours, new Mat(), Imgproc.RETR_LIST, Imgproc.CHAIN_APPROX_SIMPLE);

//Use only the largest contour. Other contours (any other possible blobs of this color range) will be ignored.

MatOfPoint largestContour = contours.get(0);

double largestContourArea = Imgproc.contourArea(largestContour);

for ( int i=1; i<contours.size(); ++i) {//NB Notice the prefix increment.

MatOfPoint currentContour = contours.get(0);

double currentContourArea = Imgproc.contourArea(currentContour);

if (currentContourArea > largestContourArea) {

largestContourArea = currentContourArea;

largestContour = currentContour;

}

}

Rect detectedBlobRoi = Imgproc.boundingRect(largestContour);

Mat detectedBlobRgba = rgbaFrame.submat(detectedBlobRoi);

Highgui.imwrite("/mnt/sdcard/DCIM/detectedBlobRgba.jpg", detectedBlobRgba);//check

}

- Original Image in computer, This was captured by placing phone's camera infront of laptop screen.

- rgbaFrame.jpg

- hsvImage.jpg

- dilatedImage.jpg

- maskedMat.jpg

- detectedBlobRgba.jpg

EDIT:

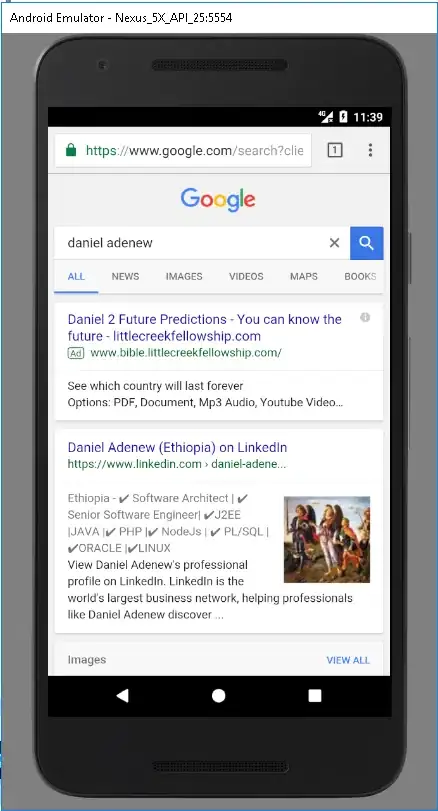

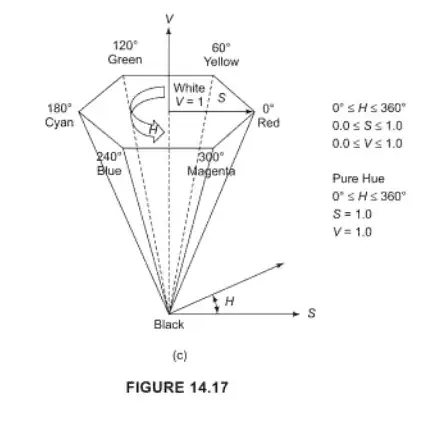

I just used Core.inRange(hsvImage, new Scalar(0,50,40), new Scalar(10,255,255), maskedImage);//3, 217, 225 --- 6, 85.09, 88.24 ...... 3 219 255, and I captured a screeshot of the website colorizer.org by giving it a custom HSV values for red color, i.e. for the OpenCV red Scalar(3, 217, 255) (which falls in the range set in the given inRange function, I scaled the channel values to the scale of colorizer.org, which is H=0-360, S=0-100, V=0-100, by multiplying H value by 2, and dividing both the S and V values by 255 and multiplying by 100. This gave me 6, 85.09, 88.24 which I set on the website, and took a screenshot (the first in the following images).

- Original screenshot, I captured this frame.

- rgbaFrame.jpg

- hsvImage.jpg

- maskedImage.jpg

- dilatedMat.jpg

- detectedBlobRgba.jpg

IMPORTANT:

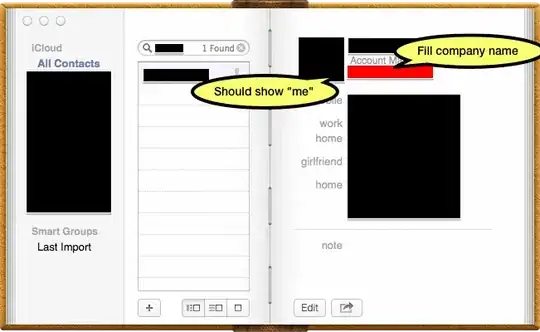

The method given is actually invoked in my test application when I touch inside the rgbaFrame (i.e. it is invoked inside onTouch method). I am also using the following code to print to a TextView the Hue, Saturation, and Value values of the colored blob that I have touched. When I run this application, I touched the red colored blob, and got the following values: Hue:3, Saturation:219, Value:255.

public boolean onTouch(View v, MotionEvent motionEvent) { detectColoredBlob(); int cols = rgbaFrame.cols(); int rows = rgbaFrame.rows();

int xOffset = (openCvCameraBridge.getWidth() - cols) / 2;

int yOffset = (openCvCameraBridge.getHeight() - rows) / 2;

int x = (int) motionEvent.getX() - xOffset;

int y = (int) motionEvent.getY() - yOffset;

Log.i(TAG, "Touch image coordinates: (" + x + ", " + y + ")");//check

if ((x < 0) || (y < 0) || (x > cols) || (y > rows)) { return false; }

Rect touchedRect = new Rect();

touchedRect.x = (x > 4) ? x - 4 : 0;

touchedRect.y = (y > 4) ? y - 4 : 0;

touchedRect.width = (x + 4 < cols) ? x + 4 - touchedRect.x : cols - touchedRect.x;

touchedRect.height = (y + 4 < rows) ? y + 4 - touchedRect.y : rows - touchedRect.y;

Mat touchedRegionRgba = rgbaFrame.submat(touchedRect);

Mat touchedRegionHsv = new Mat();

Imgproc.cvtColor(touchedRegionRgba, touchedRegionHsv, Imgproc.COLOR_RGB2HSV_FULL);

double[] channelsDoubleArray = touchedRegionHsv.get(0, 0);//**********

float[] channelsFloatArrayScaled = new float[3];

for (int i = 0; i < channelsDoubleArray.length; i++) {

if (i == 0) {

channelsFloatArrayScaled[i] = ((float) channelsDoubleArray[i]) * 2;// TODO Wrap an ArrayIndexOutOfBoundsException wrapper

} else if (i == 1 || i == 2) {

channelsFloatArrayScaled[i] = ((float) channelsDoubleArray[i]) / 255;// TODO Wrap an ArrayIndexOutOfBoundsException wrapper

}

}

int androidColor = Color.HSVToColor(channelsFloatArrayScaled);

view.setBackgroundColor(androidColor);

textView.setText("Hue : " + channelsDoubleArray[0] + "\nSaturation : " + channelsDoubleArray[1] + "\nValue : "

+ channelsDoubleArray[2]);

touchedRegionHsv.release();

return false; // don't need subsequent touch events

}