I know I can measure the execution time of a call to sess.run(), but is it possible to get a finer granularity and measure the execution time of individual operations?

- 124,992

- 159

- 614

- 958

- 1,157

- 1

- 9

- 12

10 Answers

I have used the Timeline object to get the time of execution for each node in the graph:

- you use a classic

sess.run()but also specify the optional argumentsoptionsandrun_metadata - you then create a

Timelineobject with therun_metadata.step_statsdata

Here is an example program that measures the performance of a matrix multiplication:

import tensorflow as tf

from tensorflow.python.client import timeline

x = tf.random_normal([1000, 1000])

y = tf.random_normal([1000, 1000])

res = tf.matmul(x, y)

# Run the graph with full trace option

with tf.Session() as sess:

run_options = tf.RunOptions(trace_level=tf.RunOptions.FULL_TRACE)

run_metadata = tf.RunMetadata()

sess.run(res, options=run_options, run_metadata=run_metadata)

# Create the Timeline object, and write it to a json

tl = timeline.Timeline(run_metadata.step_stats)

ctf = tl.generate_chrome_trace_format()

with open('timeline.json', 'w') as f:

f.write(ctf)

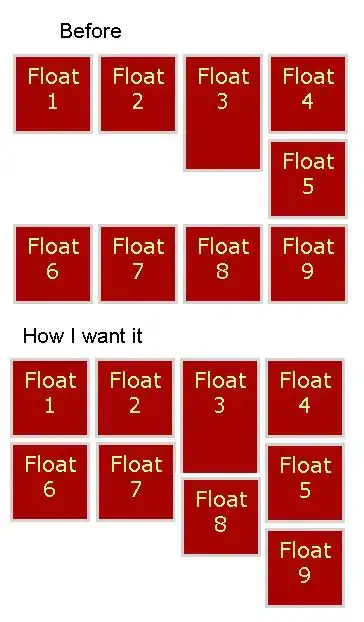

You can then open Google Chrome, go to the page chrome://tracing and load the timeline.json file.

You should see something like:

- 27,908

- 11

- 92

- 91

-

1Hi! I tried creating a Timeline for my Network training, but unfortunately doing it as you showed only produces a timeline for the last invocation of session.run. Is there a way to aggregate the timeline over all sessions? – fat-lobyte Jul 22 '16 at 10:01

-

6Using TensorFlow 0.12.0-rc0, I found that I needed to make sure that libcupti.so/libcupti.dylib was in the library path in order for this to work. For me (on Mac), I added `/usr/local/cuda/extras/CUPTI/lib` to the `DYLD_LIBRARY_PATH`. – Daniel Trebbien Dec 12 '16 at 16:41

-

1Or `LD_LIBRARY_PATH=/usr/local/cuda/extras/CUPTI/lib64:${LD_LIBRARY_PATH}` on Ubuntu – Justin Harris Apr 30 '18 at 23:40

-

Why is there an add operator here? – user2991421 Aug 07 '18 at 00:35

-

Because when calling `tf.random_normal`, TensorFlow first create a random tensor with mean 0 and variance 1. It then multiplies by the standard deviation (1 here) and adds the mean (0 here). – Olivier Moindrot Aug 07 '18 at 10:03

-

My `.json` is around 1.7 GB and I'm unable to open it in Chrome. Is there any other way to open it like in tensorboard ? – Vedanshu Feb 25 '20 at 09:06

There is not yet a way to do this in the public release. We are aware that it's an important feature and we are working on it.

- 2,584

- 2

- 19

- 20

-

14Is it possible that there is an update to this answer? Because https://github.com/tensorflow/tensorflow/issues/899 seems as if one could probably calculate the FLOPs for individual operations which could give insights into the execution time. – Martin Thoma Dec 20 '16 at 22:07

Since this is high up when googling for "Tensorflow Profiling", note that the current (late 2017, TensorFlow 1.4) way of getting the Timeline is using a ProfilerHook. This works with the MonitoredSessions in tf.Estimator where tf.RunOptions are not available.

estimator = tf.estimator.Estimator(model_fn=...)

hook = tf.train.ProfilerHook(save_steps=10, output_dir='.')

estimator.train(input_fn=..., steps=..., hooks=[hook])

- 705

- 5

- 10

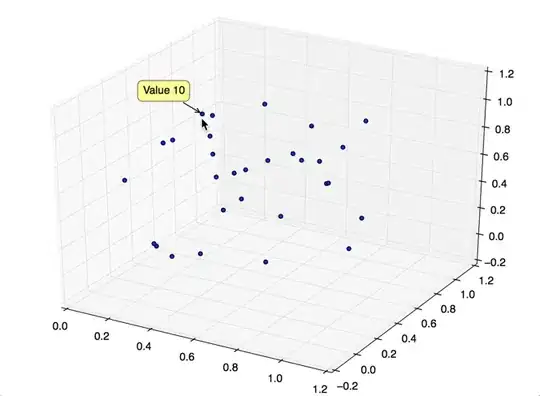

You can extract this information using runtime statistics. You will need to do something like this (check the full example in the above-mentioned link):

run_options = tf.RunOptions(trace_level=tf.RunOptions.FULL_TRACE)

run_metadata = tf.RunMetadata()

sess.run(<values_you_want_to_execute>, options=run_options, run_metadata=run_metadata)

your_writer.add_run_metadata(run_metadata, 'step%d' % i)

Better than just printing it you can see it in tensorboard:

Additionally, clicking on a node will display the exact total memory, compute time, and tensor output sizes.

- 14,689

- 5

- 57

- 69

- 214,103

- 147

- 703

- 753

-

1The link (https://www.tensorflow.org/programmers_guide/graph_viz#runtime_statistics) has been updated. – benjaminplanche Apr 04 '18 at 17:09

To update this answer, we do have some functionality for CPU profiling, focused on inference. If you look at https://github.com/tensorflow/tensorflow/tree/master/tensorflow/tools/benchmark you'll see a program you can run on a model to get per-op timings.

- 2,866

- 1

- 13

- 12

For the comments of fat-lobyte under Olivier Moindrot's answer, if you want to gather the timeline over all sessions, you can change "open('timeline.json', 'w')" to "open('timeline.json', 'a')".

- 29

- 2

2.0 Compatible Answer: You can use Profiling in Keras Callback.

Code for that is :

log_dir="logs/profile/" + datetime.now().strftime("%Y%m%d-%H%M%S")

tensorboard_callback = tf.keras.callbacks.TensorBoard(log_dir=log_dir, histogram_freq=1, profile_batch = 3)

model.fit(train_data,

steps_per_epoch=20,

epochs=5,

callbacks=[tensorboard_callback])

For more details on how to Profiling, refer this Tensorboard Link.

This works with Tensorflow 2 (tested with TF 2.5 and 2.8):

import tensorflow as tf

tf.profiler.experimental.start(r'/path/to/logdir')

with tf.profiler.experimental.Trace("My cool model", _r=1):

run_model_that_you_want_to_profile()

tf.profiler.experimental.stop()

Then you can see the trace in Tensorboard (tensorboard --logdir /path/to/logdir, then open http://localhost:6006/#profile in the browser).

Might also be useful:

- Guide: Optimize TensorFlow performance using the Profiler

tf.summary.trace_on()(didn't try it myself)- This colab tutorial on using the Tensorboard profiler

- 3,976

- 1

- 34

- 40

Recently released by Uber SBNet custom op library (http://www.github.com/uber/sbnet) has an implementation of cuda event based timers, which can be used in the following manner:

with tf.control_dependencies([input1, input2]):

dt0 = sbnet_module.cuda_timer_start()

with tf.control_dependencies([dt0]):

input1 = tf.identity(input1)

input2 = tf.identity(input2)

### portion of subgraph to time goes in here

with tf.control_dependencies([result1, result2, dt0]):

cuda_time = sbnet_module.cuda_timer_end(dt0)

with tf.control_dependencies([cuda_time]):

result1 = tf.identity(result1)

result2 = tf.identity(result2)

py_result1, py_result2, dt = session.run([result1, result2, cuda_time])

print "Milliseconds elapsed=", dt

Note that any portion of subgraph can be asynchronous you should be very careful with specifying all the input and output dependencies for the timer ops. Otherwise, the timer might get inserted into the graph out of order and you can get erroneous time. I found both the timeline and time.time() timing of very limited utility for profiling Tensorflow graphs. Also note that cuda_timer APIs will synchronize on the default stream, which is currently by design because TF uses multiple streams.

Having said this I personally recommend switching to PyTorch :) Development iteration is faster, code runs faster and everything is a lot less painful.

Another somewhat hacky and arcane approach to subtracting the overhead from tf.Session (which can be enormous) is to replicate the graph N times and run it for a variable N, solving for an equation of unknown fixed overhead. I.e. you'd measure around session.run() with N1=10 and N2=20 and you know that your time is t and overhead is x. So something like

N1*x+t = t1

N2*x+t = t2

Solve for x and t. Downside is this might require a lot of memory and is not necessarily accurate :) Also make sure that your inputs are completely different/random/independent otherwise TF will fold the entire subgraph and not run it N times... Have fun with TensorFlow :)

- 16,113

- 6

- 53

- 101

- 3,590

- 3

- 26

- 17

-

This example is lacking a complete set of variables or suggestion on how to create them. When I clicked on the sbnet repo in Github, it appears to be 3-4 years stale anyway. – brethvoice Aug 19 '21 at 17:07