My goal is to identify times (in datetime format below) at which there is a local maximum above a certain threshold. I appreciate there are other related responses which deal with numpy and scipy techniques for finding local maxima and minima, but none to my knowledge address a threshold level.

I have the following pandas.Series, represented as df_1, which stores integer values for given times:

t_min

2015-12-26 14:45:00 46

2015-12-26 14:46:00 25

2015-12-26 14:47:00 39

2015-12-26 14:48:00 58

2015-12-26 14:49:00 89

2015-12-26 14:50:00 60

2015-12-26 14:51:00 57

2015-12-26 14:52:00 60

2015-12-26 14:53:00 46

2015-12-26 14:54:00 31

2015-12-26 14:55:00 66

2015-12-26 14:56:00 78

2015-12-26 14:57:00 49

2015-12-26 14:58:00 47

2015-12-26 14:59:00 31

2015-12-26 15:00:00 55

2015-12-26 15:01:00 19

2015-12-26 15:02:00 10

2015-12-26 15:03:00 31

2015-12-26 15:04:00 36

2015-12-26 15:05:00 61

2015-12-26 15:06:00 29

2015-12-26 15:07:00 32

2015-12-26 15:08:00 49

2015-12-26 15:09:00 35

2015-12-26 15:10:00 17

2015-12-26 15:11:00 22

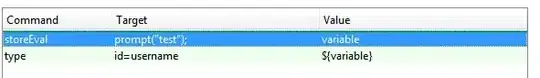

I use the following to deduce the array indexes at which local maxima occurs as per a response in another answer here:

x = np.array(df_1, dtype=np.float)

# for local maxima

print argrelextrema(x, np.greater)

However I wish to produce an array of the TIMES at which these maxima occur and not the integer (now converted to float) values at these indexes as I could find using x[argrelextrema(x, np.greater)[0]] – any idea how I could obtain an array of said times?

Proceeding this I also aim to refine this list of times by only selecting maxima above a certain threshold, i.e. whose slope is above a certain limit. This would allow me to avoid obtaining every single local maxima but instead to identify the most significant “peaks”. Would anyone have a suggestion on how to do this?