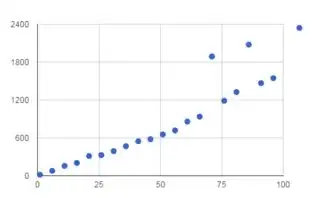

Short answer: str slices, in general, copy. That means that your function that does a slice for each of your string's n suffixes is doing O(n2) work. That said, you can avoid copies if you can work with bytes-like objects using memoryviews to get zero-copy views of the original bytes data. See How you can do zero copy slicing below for how to make it work.

Long answer: (C)Python str do not slice by referencing a view of a subset of the data. There are exactly three modes of operation for str slicing:

- Complete slice, e.g.

mystr[:]: Returns a reference to the exact same str (not just shared data, the same actual object, mystr is mystr[:] since str is immutable so there is no risk to doing so)

- The zero length slice and (implementation dependent) cached length 1 slices; the empty string is a singleton (

mystr[1:1] is mystr[2:2] is ''), and low ordinal strings of length one are cached singletons as well (on CPython 3.5.0, it looks like all characters representable in latin-1, that is Unicode ordinals in range(256), are cached)

- All other slices: The sliced

str is copied at creation time, and thereafter unrelated to the original str

The reason why #3 is the general rule is to avoid issues with large str being kept in memory by a view of a small portion of it. If you had a 1GB file, read it in and sliced it like so (yes, it's wasteful when you can seek, this is for illustration):

with open(myfile) as f:

data = f.read()[-1024:]

then you'd have 1 GB of data being held in memory to support a view that shows the final 1 KB, a serious waste. Since slices are usually smallish, it's almost always faster to copy on slice instead of create views. It also means str can be simpler; it needs to know its size, but it need not track an offset into the data as well.

How you can do zero copy slicing

There are ways to perform view based slicing in Python, and in Python 2, it will work on str (because str is bytes-like in Python 2, supporting the buffer protocol). With Py2 str and Py3 bytes (as well as many other data types like bytearray, array.array, numpy arrays, mmap.mmaps, etc.), you can create a memoryview that is a zero copy view of the original object, and can be sliced without copying data. So if you can use (or encode) to Py2 str/Py3 bytes, and your function can work with arbitrary bytes-like objects, then you could do:

def do_something_on_all_suffixes(big_string):

# In Py3, may need to encode as latin-1 or the like

remaining_suffix = memoryview(big_string)

# Rather than explicit loop, just replace view with one shorter view

# on each loop

while remaining_suffix: # Stop when we've sliced to empty view

some_constant_time_operation(remaining_suffix)

remaining_suffix = remaining_suffix[1:]

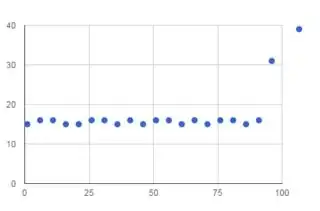

The slices of memoryviews do make new view objects (they're just ultra-lightweight with fixed size unrelated to the amount of data they view), just not any data, so some_constant_time_operation can store a copy if needed and it won't be changed when we slice it down later. Should you need a proper copy as Py2 str/Py3 bytes, you can call .tobytes() to get the raw bytes obj, or (in Py3 only it appears), decode it directly to a str that copies from the buffer, e.g. str(remaining_suffix[10:20], 'latin-1').