I've implemented multiple variations of Steep Parallax, Relief and Parallax Occlusion mappings, and they all have a bug of only working correctly in one direction. Which leads me to believe the problem is one of the values being calculated outside of Parallax mapping. I cannot figure out what is wrong.

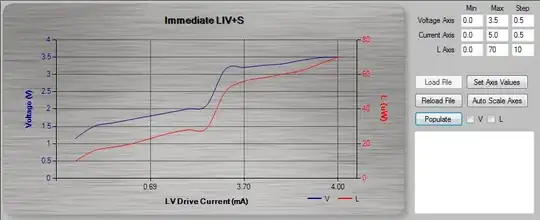

Here's a cube with Parallax mapping from different angles, as you can see Parallax effect is correct on only one side of the cube:

Here is the GLSL code that is used:

Vertex shader:

layout(location = 0) in vec3 vertexPosition;

layout(location = 1) in vec3 vertexNormal;

layout(location = 2) in vec2 textureCoord;

layout(location = 3) in vec3 vertexTangent;

layout(location = 4) in vec3 vertexBitangent;

out vec3 eyeVec;

out vec2 texCoord;

uniform vec3 cameraPosVec; // Camera's position

uniform mat4 modelMat; // Model matrix

void main(void)

{

texCoord = textureCoord;

fragPos = vec3(modelMat * vec4(vertexPosition, 1.0));

// Compute TBN matrix

mat3 normalMatrix = transpose(inverse(mat3(modelMat)));

TBN = mat3(normalMatrix * vertexTangent,

normalMatrix * vertexBitangent,

normalMatrix * vertexNormal);

eyeVec = TBN * (fragPos - cameraPosVec);

}

Fragment shader:

layout(location = 1) out vec4 diffuseBuffer;

// Variables from vertex shader

in vec3 eyeVec;

in vec3 fragPos;

in vec2 texCoord;

uniform sampler2D diffuseTexture;

uniform sampler2D heightTexture;

vec2 parallaxOcclusionMapping(vec2 p_texCoords, vec3 p_viewDir)

{

// number of depth layers

float numLayers = 50;

// calculate the size of each layer

float layerDepth = 1.0 / numLayers;

// depth of current layer

float currentLayerDepth = 0.0;

// the amount to shift the texture coordinates per layer (from vector P)

vec2 P = p_viewDir.xy / p_viewDir.z * 0.025;

return p_viewDir.xy / p_viewDir.z;

vec2 deltaTexCoords = P / numLayers;

// get initial values

vec2 currentTexCoords = p_texCoords;

float currentDepthMapValue = texture(heightTexture, currentTexCoords).r;

float previousDepth = currentDepthMapValue;

while(currentLayerDepth < currentDepthMapValue)

{

// shift texture coordinates along direction of P

currentTexCoords -= deltaTexCoords;

// get depthmap value at current texture coordinates

currentDepthMapValue = texture(heightTexture, currentTexCoords).r;

previousDepth = currentDepthMapValue;

// get depth of next layer

currentLayerDepth += layerDepth;

}

// -- parallax occlusion mapping interpolation from here on

// get texture coordinates before collision (reverse operations)

vec2 prevTexCoords = currentTexCoords + deltaTexCoords;

// get depth after and before collision for linear interpolation

float afterDepth = currentDepthMapValue - currentLayerDepth;

float beforeDepth = texture(heightTexture, prevTexCoords).r - currentLayerDepth + layerDepth;

// interpolation of texture coordinates

float weight = afterDepth / (afterDepth - beforeDepth);

vec2 finalTexCoords = prevTexCoords * weight + currentTexCoords * (1.0 - weight);

return finalTexCoords;

}

void main(void)

{

vec2 newCoords = parallaxOcclusionMapping(texCoord, eyeVec);

// Get diffuse color

vec4 diffuse = texture(diffuseTexture, newCoords).rgba;

// Write diffuse color to the diffuse buffer

diffuseBuffer = diffuse;

}

The code is literally copy-pasted from this tutorial

P.S. Inverting different values (x,y,z) of eyeVar vector (after transforming it with TBN matrix), changes the direction at which parallax works. For instance, inverting the X component, makes it parallax work on polygons facing upwards:

which suggests that there might be a problem with tangents/bitangents or the TBN matrix, but I have not found anything. Also, rotation the object with a model matrix does not affect the direction at which the parallax effect works.

EDIT:

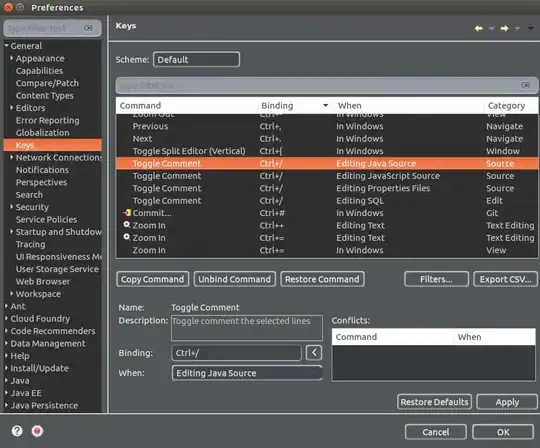

I have fixed the issue by changing how I calculate tangents/bitangents, moving the viewDirection calculation to the fragment shader, and by not doing the matrix inverse for TBN for just this one calculation. See the newly added shader code here:

Vertex shader:

out vec3 TanViewPos;

out vec3 TanFragPos;

void main(void)

{

vec3 T = normalize(mat3(modelMat) * vertexTangent);

vec3 B = normalize(mat3(modelMat) * vertexBitangent);

vec3 N = normalize(mat3(modelMat) * vertexNormal);

TBN = transpose(inverse(mat3(T, B, N)));

mat3 TBN2 = transpose((mat3(T, B, N)));

TanViewPos = TBN2 * cameraPosVec;

TanFragPos = TBN2 * fragPos;

}

Fragment shader:

in vec3 TanViewPos;

in vec3 TanFragPos;

void main(void)

{

vec3 viewDir = normalize(TanViewPos - TanFragPos);

vec2 newCoords = parallaxOcclusionMapping(texCoord, viewDir);

}

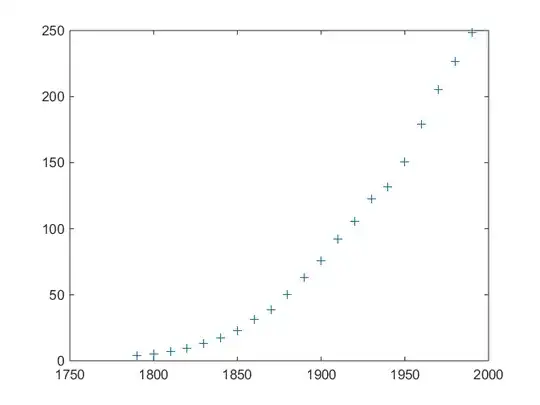

Regarding the tangent/bitangent calculation, I previously used ASSIMP to generate them for me. Now I've written code to manually calculate tangents and bitangents, however my new code generates "flat/hard" tangents (i.e. same tangent/bitangent for all 3 vertices of a triangle), instead of smooth ones. See the difference between ASSIMP (smooth tangents) and my (flat tangents) implementations:

The parallax mapping now works in all directions:

However, this now introduces another problem, which makes all the rounded objects have flat shading (after performing normal mapping):

Is this problem specific to my engine (i.e. a bug somewhere) or is this a common issue that other engines somehow deal with?