I understand F1-measure is a harmonic mean of precision and recall. But what values define how good/bad a F1-measure is? I can't seem to find any references (google or academic) answering my question.

-

5What counts as good or bad depends on how hard the task is. – Aaron Apr 19 '16 at 21:11

2 Answers

Consider sklearn.dummy.DummyClassifier(strategy='uniform') which is a classifier that make random guesses (a.k.a bad classifier). We can view DummyClassifier as a benchmark to beat, now let's see it's f1-score.

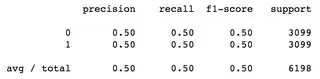

In a binary classification problem, with balanced dataset: 6198 total sample, 3099 samples labelled as 0 and 3099 samples labelled as 1, f1-score is 0.5 for both classes, and weighted average is 0.5:

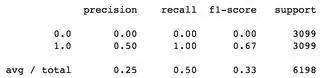

Second example, using DummyClassifier(strategy='constant'), i.e. guessing the same label every time, guessing label 1 every time in this case, average of f1-scores is 0.33, while f1 for label 0 is 0.00:

I consider these to be bad f1-scores, given the balanced dataset.

PS. summary generated using sklearn.metrics.classification_report

- 3,529

- 2

- 33

- 48

-

4To summarize your answer, anything below 0.5 is bad, right? – Alisson Reinaldo Silva Feb 28 '19 at 01:06

You did not find any reference for f1 measure range because there is not any range. The F1 measure is a combined matrix of precision and recall.

Let's say you have two algorithms, one has higher precision and lower recall. By this observation , you can not tell that which algorithm is better, unless until your goal is to maximize precision.

So, given this ambiguity about how to select superior algorithm among two (one with higher recall and other with higher precision), we use f1-measure to select superior among them.

f1-measure is a relative term that's why there is no absolute range to define how better your algorithm is.

- 2,124

- 4

- 24

- 46

-

1Though if classification of class A has 0.9 F1, and classification of class B has 0.3. No matter how you play with the threshold to tradeoff precision and recall, the 0.3 will never be reaching to 0.9. So in this hypothetical case, can't we be sure that the performance for classifying class A is much better than classifying class B using just the F1 score? – KubiK888 Apr 19 '16 at 19:58

-

1we compare precision, recall and f1 score between two algorithms/approaches, not between two classes. – saurabh agarwal Apr 21 '16 at 04:40

-

1F1 score - F1 Score is the weighted average of Precision and Recall. Therefore, this score takes both false positives and false negatives into account. Intuitively it is not as easy to understand as accuracy, but F1 is usually more useful than accuracy, especially if you have an uneven class distribution. Accuracy works best if false positives and false negatives have similar cost. If the cost of false positives and false negatives are very different, it’s better to look at both Precision and Recall. F1 Score = 2*(Recall * Precision) / (Recall + Precision) – kamran kausar Jul 24 '18 at 07:05