Precision and Recall are statistical measures of performance for information retrieval algorithms based on binary classification. Precision is a measure of the percent of all classifications (items retrieved) that are relevant. Recall is a measure of the percent of relevant classifications (items retrieved) successfully found by the algorithm, relative to all relevant items that exist and could have been found.

Precision and Recall are statistical measures of performance for information retrieval algorithms based on binary classification. Precision is a measure of the percent of all classifications (items retrieved) that are relevant. Recall is a measure of the percent of relevant classifications (items retrieved) successfully found by the algorithm, relative to all relevant items that exist and could have been found.

For binary classification, the fundamental quantities are the percent of True Positive, False Positive, True Negative, and False Negative classifications obtained by a classification algorithm. In the context of information retrieval, a "Positive" result is an item retrieved by the algorithm, while a "Negative" result is an item not retrieved by the algorithm.

"True" or "False" refer to whether the classification made by the algorithm was or was not in fact correct.

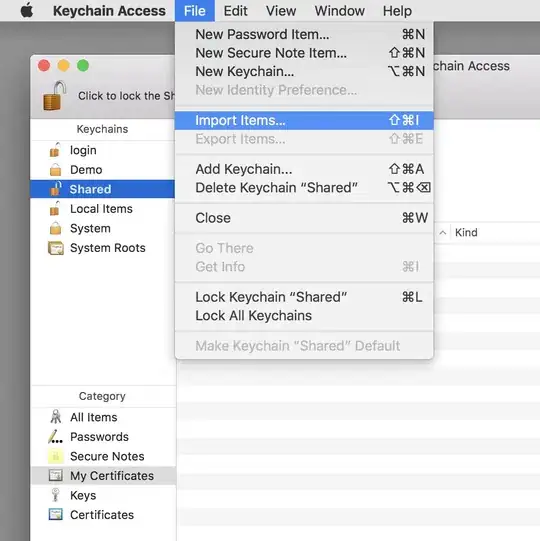

Illustration File:Precisionrecall.svg (CC BY-SA) by Walber, Wikipedia

So in the context of information retrieval, "True Positive" means the percent of cases where the algorithm retrieved an item and should have, "True Negative" means the algorithm did not retrieve an item and should not have. "False Positive" means the algorithm retrieved an item and should not have, "False Negative" means the algorithm failed to retrieve an item, but should have.

Precision is defined as True Positive/(True Positive + False Positive). Thus it is a measure of the fraction of the classifications (items retrieved by the algorithm) that are correct or relevant, as a percentage of all items that the algorithm retrieves.

Recall is defined as True Positive/(True Positive + False Negative). Thus it is a measure of the fraction of the correct or relevant classifications (items retrieved by the algorithm) that were actually found, relative to the total number of relevant items.