I am working on a Video making app.

In that I need to record a video in first View and after that display in second View.

For recording a video I followed this tutorial.

In that I have made some changes as per my need in didFinishRecordingToOutputFileAtURL method.

Here is my updated method.

- (void)captureOutput:(AVCaptureFileOutput *)captureOutput didFinishRecordingToOutputFileAtURL:(NSURL *)outputFileURL fromConnections:(NSArray *)connections error:(NSError *)error

{

NSLog(@"didFinishRecordingToOutputFileAtURL - enter");

BOOL RecordedSuccessfully = YES;

if ([error code] != noErr)

{

// A problem occurred: Find out if the recording was successful.

id value = [[error userInfo] objectForKey:AVErrorRecordingSuccessfullyFinishedKey];

if (value)

{

RecordedSuccessfully = [value boolValue];

}

}

else {

NSLog(@"didFinishRecordingToOutputFileAtURL error:%@",error);

}

if (RecordedSuccessfully)

{

//----- RECORDED SUCESSFULLY -----

NSLog(@"didFinishRecordingToOutputFileAtURL - success");

ALAssetsLibrary *library = [[ALAssetsLibrary alloc] init];

if ([library videoAtPathIsCompatibleWithSavedPhotosAlbum:outputFileURL])

{

AVMutableComposition *mixComposition = [[AVMutableComposition alloc] init];

AVMutableCompositionTrack *track = [mixComposition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

AVAsset *asset = [AVAsset assetWithURL:outputFileURL];

[track insertTimeRange:CMTimeRangeMake(kCMTimeZero, asset.duration) ofTrack:[[asset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0] atTime:CMTimeMake(0, 1) error:nil];

NSArray *paths = NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES);

NSString *documentsDirectory = [paths objectAtIndex:0];

NSString *myPathDocs = [documentsDirectory stringByAppendingPathComponent:

[NSString stringWithFormat:@"%@%d.mov",NSBundle.mainBundle.infoDictionary[@"CFBundleExecutable"],++videoCounter]];

[[NSFileManager defaultManager] removeItemAtPath:myPathDocs error:nil];

NSURL *url = [NSURL fileURLWithPath:myPathDocs];

AVMutableVideoCompositionInstruction *instruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

instruction.timeRange = CMTimeRangeMake(kCMTimeZero, asset.duration);

AVMutableVideoCompositionLayerInstruction *layerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:track];

AVAssetTrack *videoAssetTrack = [[asset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

UIImageOrientation videoAssetOrientation_ = UIImageOrientationUp;

BOOL isVideoAssetPortrait_ = NO;

CGAffineTransform videoTransform = videoAssetTrack.preferredTransform;

if (videoTransform.a == 0 && videoTransform.b == 1.0 && videoTransform.c == -1.0 && videoTransform.d == 0) {

videoAssetOrientation_ = UIImageOrientationRight;

isVideoAssetPortrait_ = YES;

if ([[[NSUserDefaults standardUserDefaults] stringForKey:@"orientation"] isEqualToString:@"landscape"]) {

videoAssetOrientation_ = UIImageOrientationUp;

}

}

if (videoTransform.a == 0 && videoTransform.b == -1.0 && videoTransform.c == 1.0 && videoTransform.d == 0) {

videoAssetOrientation_ = UIImageOrientationLeft;

isVideoAssetPortrait_ = YES;

}

if (videoTransform.a == 1.0 && videoTransform.b == 0 && videoTransform.c == 0 && videoTransform.d == 1.0) {

videoAssetOrientation_ = UIImageOrientationUp;

}

if (videoTransform.a == -1.0 && videoTransform.b == 0 && videoTransform.c == 0 && videoTransform.d == -1.0) {

videoAssetOrientation_ = UIImageOrientationDown;

}

CGSize naturalSize;

if(isVideoAssetPortrait_){

naturalSize = CGSizeMake(videoAssetTrack.naturalSize.height, videoAssetTrack.naturalSize.width);

} else {

naturalSize = videoAssetTrack.naturalSize;

}

float renderWidth, renderHeight;

if (![self.ratioLabel.text isEqualToString:@"16:9"]) {

renderWidth = naturalSize.width;

renderHeight = naturalSize.width;

NSLog(@"Video:: width=%f height=%f",naturalSize.width,naturalSize.height);

}

else {

renderWidth = naturalSize.width;

renderHeight = naturalSize.height;

NSLog(@"Video:: width=%f height=%f",naturalSize.width,naturalSize.height);

}

if (![self.ratioLabel.text isEqualToString:@"16:9"])

{

CGAffineTransform t1 = CGAffineTransformMakeTranslation(videoAssetTrack.naturalSize.height, -(videoAssetTrack.naturalSize.width - videoAssetTrack.naturalSize.height) /2);

CGAffineTransform t2 = CGAffineTransformRotate(t1, M_PI_2);

[layerInstruction setTransform:t2 atTime:kCMTimeZero];

}

else

{

CGAffineTransform t2 = CGAffineTransformMakeRotation( M_PI_2);

[layerInstruction setTransform:t2 atTime:kCMTimeZero];

}

AVCaptureDevicePosition position = [[VideoInputDevice device] position];

if (position == AVCaptureDevicePositionFront)

{

/* For front camera only */

CGAffineTransform t = CGAffineTransformMakeScale(-1.0f, 1.0f);

t = CGAffineTransformTranslate(t, -videoAssetTrack.naturalSize.width, 0);

t = CGAffineTransformRotate(t, (DEGREES_TO_RADIANS(90.0)));

t = CGAffineTransformTranslate(t, 0.0f, -videoAssetTrack.naturalSize.width);

[layerInstruction setTransform:t atTime:kCMTimeZero];

/* For front camera only */

}

[layerInstruction setOpacity:0.0 atTime:asset.duration];

instruction.layerInstructions = [NSArray arrayWithObjects:layerInstruction,nil];

AVMutableVideoComposition *mainCompositionInst = [AVMutableVideoComposition videoComposition];

mainCompositionInst.renderSize = CGSizeMake(renderWidth, renderHeight);

mainCompositionInst.instructions = [NSArray arrayWithObject:instruction];

mainCompositionInst.frameDuration = CMTimeMake(1, 30);

AVAssetExportSession *exporter;

exporter = [[AVAssetExportSession alloc] initWithAsset:mixComposition presetName:AVAssetExportPreset1280x720];

exporter.videoComposition = mainCompositionInst;

exporter.outputURL=url;

exporter.outputFileType = AVFileTypeQuickTimeMovie;

exporter.shouldOptimizeForNetworkUse = YES;

[exporter exportAsynchronouslyWithCompletionHandler:^{

dispatch_async(dispatch_get_main_queue(), ^{

self.doneButton.userInteractionEnabled = YES;

if(videoAddr==nil)

{

videoAddr = [[NSMutableArray alloc] init];

}

[videoAddr addObject:exporter.outputURL];

[[PreviewLayer connection] setEnabled:YES];

AVAsset *asset = [AVAsset assetWithURL:exporter.outputURL];

NSLog(@"remaining seconds before:%f",lastSecond);

double assetDuration = CMTimeGetSeconds(asset.duration);

if (assetDuration>3.0)

assetDuration = 3.0;

lastSecond = lastSecond- assetDuration;

NSLog(@"remaining seconds after:%f",lastSecond);

self.secondsLabel.text = [NSString stringWithFormat:@"%0.1fs",lastSecond];

self.secondsLabel.hidden = NO;

NSData *data = [NSKeyedArchiver archivedDataWithRootObject:videoAddr];

[[NSUserDefaults standardUserDefaults] setObject:data forKey:@"videoAddr"];

[[NSUserDefaults standardUserDefaults] synchronize];

videoURL = outputFileURL;

flagAutorotate = NO;

self.cancelButton.hidden = self.doneButton.hidden = NO;

imgCancel.hidden = imgDone.hidden = NO;

if ([[NSUserDefaults standardUserDefaults] boolForKey:@"Vibration"])

AudioServicesPlayAlertSound(kSystemSoundID_Vibrate);

[[UIApplication sharedApplication] endIgnoringInteractionEvents];

});

}];

}

else {

UIAlertView *alert = [[UIAlertView alloc] initWithTitle:@"Error" message:[NSString stringWithFormat:@"Video can not be saved\nPlease free some storage space"] delegate:self cancelButtonTitle:nil otherButtonTitles:nil, nil];

[alert show];

dispatch_after(dispatch_time(DISPATCH_TIME_NOW, (int64_t)(2.0 * NSEC_PER_SEC)), dispatch_get_main_queue(), ^{

[alert dismissWithClickedButtonIndex:0 animated:YES];

});

}

}

}

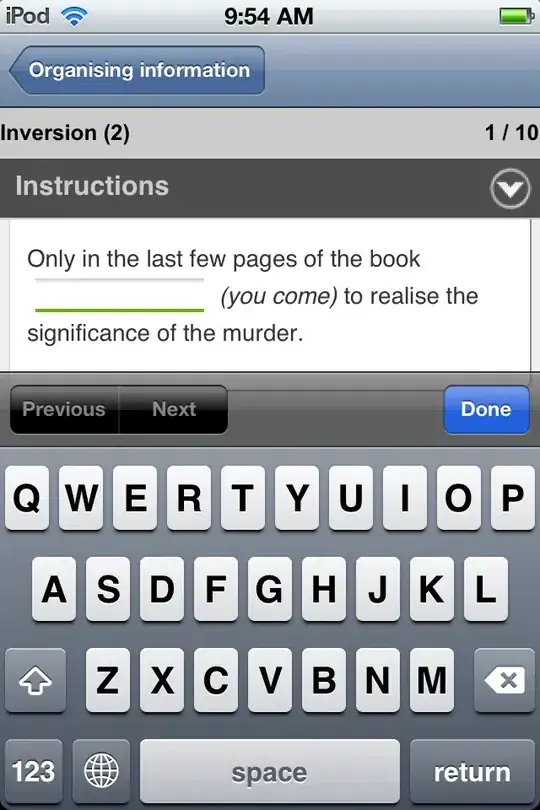

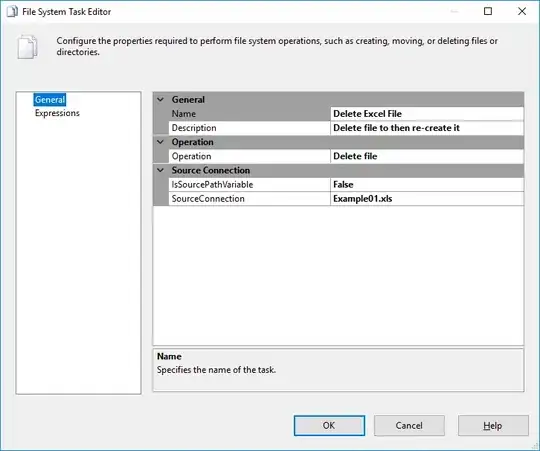

But here Is the issue.

Video is not being recorded exactly shown in preview.

See these 2 screenShots.

Video recording preview

Video Playing View