I don't understand, why will be problems without release sequence, if we have 2 threads in the example below. We have only 2 operations on the atomic variable count. count is decremented sequently as shown in the output.

From C++ Concurrency in Action by Antony Williams:

I mentioned that you could get a

synchronizes-with relationshipbetween astoreto an atomic variable and aloadof that atomic variable from another thread, even when there’s a sequence ofread-modify-writeoperations between thestoreand theload, provided all the operations are suitably tagged. If the store is tagged withmemory_order_release,memory_order_acq_rel, ormemory_order_seq_cst, and the load is tagged withmemory_order_consume,memory_order_acquire, ormemory_order_seq_cst, and each operation in the chain loads the value written by the previous operation, then the chain of operations constitutes a release sequence and the initial storesynchronizes-with(formemory_order_acquireormemory_order_seq_cst) or isdependency-ordered-before(formemory_order_consume) the final load. Any atomic read-modify-write operations in the chain can have any memory ordering (evenmemory_order_relaxed).To see what this means (release sequence) and why it’s important, consider an

atomic<int>being used as a count of the number of items in a shared queue, as in the following listing.One way to handle things would be to have the thread that’s producingthe data store the items in a shared buffer and then do

count.store(number_of_items, memory_order_release)#1 to let the other threads know that data is available. The threads consuming the queue items might then docount.fetch_sub(1,memory_ order_acquire)#2 to claim an item from the queue, prior to actually reading the shared buffer #4. Once the count becomes zero, there are no more items, and the thread must wait #3.

#include <atomic>

#include <thread>

#include <vector>

#include <iostream>

#include <mutex>

std::vector<int> queue_data;

std::atomic<int> count;

std::mutex m;

void process(int i)

{

std::lock_guard<std::mutex> lock(m);

std::cout << "id " << std::this_thread::get_id() << ": " << i << std::endl;

}

void populate_queue()

{

unsigned const number_of_items = 20;

queue_data.clear();

for (unsigned i = 0;i<number_of_items;++i)

{

queue_data.push_back(i);

}

count.store(number_of_items, std::memory_order_release); //#1 The initial store

}

void consume_queue_items()

{

while (true)

{

int item_index;

if ((item_index = count.fetch_sub(1, std::memory_order_acquire)) <= 0) //#2 An RMW operation

{

std::this_thread::sleep_for(std::chrono::milliseconds(500)); //#3

continue;

}

process(queue_data[item_index - 1]); //#4 Reading queue_data is safe

}

}

int main()

{

std::thread a(populate_queue);

std::thread b(consume_queue_items);

std::thread c(consume_queue_items);

a.join();

b.join();

c.join();

}

output (VS2015):

id 6836: 19

id 6836: 18

id 6836: 17

id 6836: 16

id 6836: 14

id 6836: 13

id 6836: 12

id 6836: 11

id 6836: 10

id 6836: 9

id 6836: 8

id 13740: 15

id 13740: 6

id 13740: 5

id 13740: 4

id 13740: 3

id 13740: 2

id 13740: 1

id 13740: 0

id 6836: 7

If there’s one consumer thread, this is fine; the

fetch_sub()is a read, withmemory_order_acquiresemantics, and the store hadmemory_order_releasesemantics, so the store synchronizes-with the load and the thread can read the item from the buffer.If there are two threads reading, the second

fetch_sub()will see the value written by the first and not the value written by the store. Without the rule about therelease sequence, this second thread wouldn’t have ahappens-before relationshipwith the first thread, and it wouldn’t be safe to read the shared buffer unless the firstfetch_sub()also hadmemory_order_releasesemantics, which would introduce unnecessary synchronization between the two consumer threads. Without therelease sequencerule ormemory_order_releaseon thefetch_suboperations, there would be nothing to require that the stores to thequeue_datawere visible to the second consumer, and you would have a data race.

What does he mean? That both threads should see the value of count is 20? But in my output count is sequently decremented in threads.

Thankfully, the first

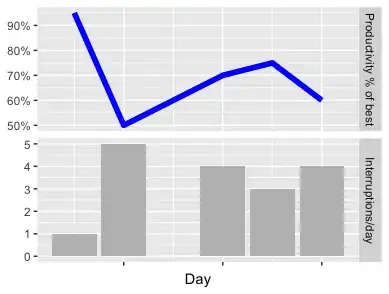

fetch_sub()does participate in the release sequence, and so thestore()synchronizes-with the secondfetch_sub(). There’s still no synchronizes-with relationship between the two consumer threads. This is shown in figure 5.7. The dotted lines in figure 5.7 show the release sequence, and the solid lines show thehappens-before relationships