Given a set of N bounding boxes with vertex coordinates:

"vertices": [

{

"y": 486,

"x": 336

},

{

"y": 486,

"x": 2235

},

{

"y": 3393,

"x": 2235

},

{

"y": 3393,

"x": 336

}

]

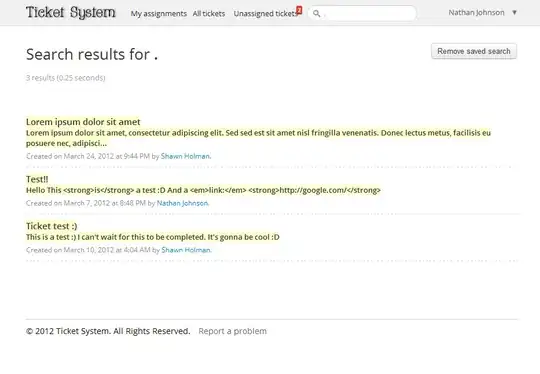

I would like to group the bounding boxes into rows. In other words, given the pictorial representation of bounding boxes in this image:

I would like an algorithm that returns:

[1,2,3]

[4,5,6]

[7,8]

[Edit: Clarification] The grouping decisions (e.g. [4,5,6] and [7,8]) should be based on some kind of error minimisation such as least squares.

Is there an algorithm or library (preferably in python) that does this?