I have many data and I have experimented with partitions of cardinality [20k, 200k+].

I call it like that:

from pyspark.mllib.clustering import KMeans, KMeansModel

C0 = KMeans.train(first, 8192, initializationMode='random', maxIterations=10, seed=None)

C0 = KMeans.train(second, 8192, initializationMode='random', maxIterations=10, seed=None)

and I see that initRandom() calls takeSample() once.

Then the takeSample() implementation doesn't seem to call itself or something like that, so I would expect KMeans() to call takeSample() once. So why the monitor shows two takeSample()s per KMeans()?

Note: I execute more KMeans() and they all invoke two takeSample()s, regardless of the data being .cache()'d or not.

Moreover, the number of partitions doesn't affect the number takeSample() is called, it's constant to 2.

I am using Spark 1.6.2 (and I cannot upgrade) and my application is in Python, if that matters!

I brought this to the mailing list of the Spark devs, so I am updating:

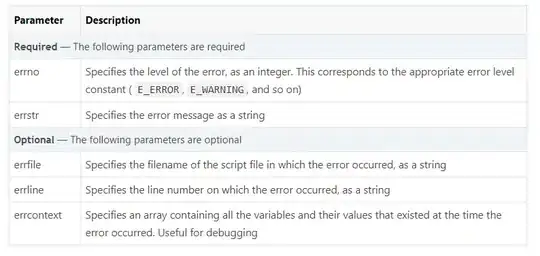

Details of 1st takeSample():

Details of 2nd takeSample():

where one can see that the same code is executed.