KMeans has several parameters for its training, with initialization mode defaulted to kmeans||. The problem is that it marches quickly (less than 10min) to the first 13 stages, but then hangs completely, without yielding an error!

Minimal Example which reproduces the issue (it will succeed if I use 1000 points or random initialization):

from pyspark.context import SparkContext

from pyspark.mllib.clustering import KMeans

from pyspark.mllib.random import RandomRDDs

if __name__ == "__main__":

sc = SparkContext(appName='kmeansMinimalExample')

# same with 10000 points

data = RandomRDDs.uniformVectorRDD(sc, 10000000, 64)

C = KMeans.train(data, 8192, maxIterations=10)

sc.stop()

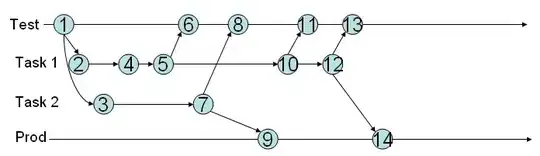

The job does nothing (it doesn't succeed, fail or progress..), as shown below. There are no active/failed tasks in the Executors tab. Stdout and Stderr Logs don't have anything particularly interesting:

If I use k=81, instead of 8192, it will succeed:

Notice that the two calls of takeSample(), should not be an issue, since there were called twice in the random initialization case.

So, what is happening? Is Spark's Kmeans unable to scale? Does anybody know? Can you reproduce?

If it was a memory issue, I would get warnings and errors, as I had been before.

Note: placeybordeaux's comments are based on the execution of the job in client mode, where the driver's configurations are invalidated, causing the exit code 143 and such (see edit history), not in cluster mode, where there is no error reported at all, the application just hangs.

From zero323: Why is Spark Mllib KMeans algorithm extremely slow? is related, but I think he witnesses some progress, while mine hangs, I did leave a comment...