Enhancing dynamic range and normalizing illumination

The point is to normalize background to seamless color first. There are many methods to do this. Here is what I have tried for your image:

create paper/ink cell table for the image (in the same manner as in the linked answer). So you select grid cell size big enough to distinct character features from background. For your image I choose 8x8 pixels. So divide the image into squares and compute the avg color and abs difference of color for each of them. Then mark saturated ones (small abs difference) and set them as paper or ink cells according to avg color in comparison to whole image avg color.

Now just process all lines of image and for each pixel just obtain the left and right paper cells. and linearly interpolate between those values. That should lead you to actual background color of that pixel so just substract it from image.

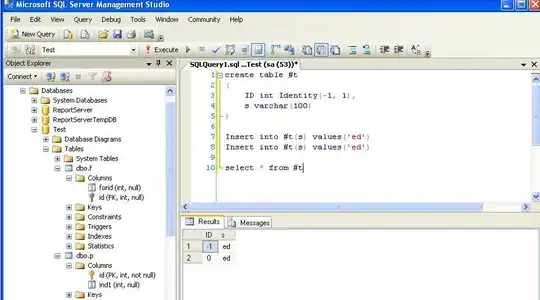

My C++ implementation for this looks like this:

color picture::normalize(int sz,bool _recolor,bool _sbstract)

{

struct _cell { color col; int a[4],da,_paper; _cell(){}; _cell(_cell& x){ *this=x; }; ~_cell(){}; _cell* operator = (const _cell *x) { *this=*x; return this; }; /*_cell* operator = (const _cell &x) { ...copy... return this; };*/ };

int i,x,y,tx,ty,txs,tys,a0[4],a1[4],n,dmax;

int x0,x1,y0,y1,q[4][4][2],qx[4],qy[4];

color c;

_cell **tab;

// allocate grid table

txs=xs/sz; tys=ys/sz; n=sz*sz; c.dd=0;

if ((txs<2)||(tys<2)) return c;

tab=new _cell*[tys]; for (ty=0;ty<tys;ty++) tab[ty]=new _cell[txs];

// compute grid table

for (y0=0,y1=sz,ty=0;ty<tys;ty++,y0=y1,y1+=sz)

for (x0=0,x1=sz,tx=0;tx<txs;tx++,x0=x1,x1+=sz)

{

for (i=0;i<4;i++) a0[i]=0;

for (y=y0;y<y1;y++)

for (x=x0;x<x1;x++)

{

dec_color(a1,p[y][x],pf);

for (i=0;i<4;i++) a0[i]+=a1[i];

}

for (i=0;i<4;i++) tab[ty][tx].a[i]=a0[i]/n;

enc_color(tab[ty][tx].a,tab[ty][tx].col,pf);

tab[ty][tx].da=0;

for (i=0;i<4;i++) a0[i]=tab[ty][tx].a[i];

for (y=y0;y<y1;y++)

for (x=x0;x<x1;x++)

{

dec_color(a1,p[y][x],pf);

for (i=0;i<4;i++) tab[ty][tx].da+=abs(a1[i]-a0[i]);

}

tab[ty][tx].da/=n;

}

// compute max safe delta dmax = avg(delta)

for (dmax=0,ty=0;ty<tys;ty++)

for (tx=0;tx<txs;tx++)

dmax+=tab[ty][tx].da;

dmax/=(txs*tys);

// select paper cells and compute avg paper color

for (i=0;i<4;i++) a0[i]=0; x0=0;

for (ty=0;ty<tys;ty++)

for (tx=0;tx<txs;tx++)

if (tab[ty][tx].da<=dmax)

{

tab[ty][tx]._paper=1;

for (i=0;i<4;i++) a0[i]+=tab[ty][tx].a[i]; x0++;

}

else tab[ty][tx]._paper=0;

if (x0) for (i=0;i<4;i++) a0[i]/=x0;

enc_color(a0,c,pf);

// remove saturated ink cells from paper (small .da but wrong .a[])

for (ty=1;ty<tys-1;ty++)

for (tx=1;tx<txs-1;tx++)

if (tab[ty][tx]._paper==1)

if ((tab[ty][tx-1]._paper==0)

||(tab[ty][tx+1]._paper==0)

||(tab[ty-1][tx]._paper==0)

||(tab[ty+1][tx]._paper==0))

{

x=0; for (i=0;i<4;i++) x+=abs(tab[ty][tx].a[i]-a0[i]);

if (x>dmax) tab[ty][tx]._paper=2;

}

for (ty=0;ty<tys;ty++)

for (tx=0;tx<txs;tx++)

if (tab[ty][tx]._paper==2)

tab[ty][tx]._paper=0;

// piecewise linear interpolation H-lines

int ty0,ty1,tx0,tx1,d;

if (_sbstract) for (i=0;i<4;i++) a0[i]=0;

for (y=0;y<ys;y++)

{

ty=y/sz; if (ty>=tys) ty=tys-1;

// first paper cell

for (tx=0;(tx<txs)&&(!tab[ty][tx]._paper);tx++); tx1=tx;

if (tx>=txs) continue; // no paper cell found

for (;tx<txs;)

{

// fnext paper cell

for (tx++;(tx<txs)&&(!tab[ty][tx]._paper);tx++);

if (tx<txs)

{

tx0=tx1; x0=tx0*sz;

tx1=tx; x1=tx1*sz;

d=x1-x0;

}

else x1=xs;

// interpolate

for (x=x0;x<x1;x++)

{

dec_color(a1,p[y][x],pf);

for (i=0;i<4;i++) a1[i]-=tab[ty][tx0].a[i]+(((tab[ty][tx1].a[i]-tab[ty][tx0].a[i])*(x-x0))/d)-a0[i];

if (pf==_pf_s ) for (i=0;i<1;i++) clamp_s32(a1[i]);

if (pf==_pf_u ) for (i=0;i<1;i++) clamp_u32(a1[i]);

if (pf==_pf_ss ) for (i=0;i<2;i++) clamp_s16(a1[i]);

if (pf==_pf_uu ) for (i=0;i<2;i++) clamp_u16(a1[i]);

if (pf==_pf_rgba) for (i=0;i<4;i++) clamp_u8 (a1[i]);

enc_color(a1,p[y][x],pf);

}

}

}

// recolor paper cells with avg color (remove noise)

if (_recolor)

for (y0=0,y1=sz,ty=0;ty<tys;ty++,y0=y1,y1+=sz)

for (x0=0,x1=sz,tx=0;tx<txs;tx++,x0=x1,x1+=sz)

if (tab[ty][tx]._paper)

for (y=y0;y<y1;y++)

for (x=x0;x<x1;x++)

p[y][x]=c;

// free grid table

for (ty=0;ty<tys;ty++) delete[] tab[ty]; delete[] tab;

return c;

}

See the linked answer for more details. Here result for your input image after switching to gray-scale <0,765> and using pic1.normalize(8,false,true);

Binarize

I tried naive simple range tresholding first so if all color channel values (R,G,B) are in range <min,max> it is recolored to c1 else to c0:

void picture::treshold_AND(int min,int max,int c0,int c1) // all channels tresholding: c1 <min,max>, c0 (-inf,min)+(max,+inf)

{

int x,y,i,a[4],e;

for (y=0;y<ys;y++)

for (x=0;x<xs;x++)

{

dec_color(a,p[y][x],pf);

for (e=1,i=0;i<3;i++) if ((a[i]<min)||(a[i]>max)){ e=0; break; }

if (e) for (i=0;i<4;i++) a[i]=c1;

else for (i=0;i<4;i++) a[i]=c0;

enc_color(a,p[y][x],pf);

}

}

after applying pic1.treshold_AND(0,127,765,0); and converting back to RGBA I got this result:

The gray noise is due to JPEG compression (PNG would be too big). As you can see the result is more or less acceptable.

In case this is not enough you can divide your image into segments. Compute histogram for each segment (it should be bimodal) then find the color between the 2 maximums which is your treshold value. The problem is that the background covers much more area so the ink peak is relatively small and sometimes hard to spot in linear scales see full image histogram:

When you do this for each segment it will be much better (as there will be much less background/text color bleedings around the tresholds) so the gap will be more visible. Also do not forget to ignore the small gaps (missing vertical lines in the histogram) as they are just related to quantization/encoding/rounding (not all gray shades are present in the image) so you should filter out gaps smaller then few intensities replacing them with avg of last and next valid histogram entry.