In this case using outer would be a more natural choice

outer(1:6, 1:6)

In general for two numerical vectors x and y, the matrix rank-1 operation can be computed as

outer(x, y)

If you want to resort to real matrix multiplication routines, use tcrossprod:

tcrossprod(x, y)

If either of your x and y is a matrix with dimension, use as.numeric to cast it as a vector first.

It is not recommended to use general matrix multiplication operation "%*%" for this. But if you want, make sure you get comformable dimension: x is a one-column matrix and y is a one-row matrix, so x %*% y.

Can you say anything about efficiency?

Matrix rank-1 operation is known to be memory-bound. So make sure we use gc() for garbage collection to tell R to release memory from heap after every replicate (otherwise your system will stall):

x <- runif(500)

y <- runif(500)

xx <- matrix(x, ncol = 1)

yy <- matrix(y, nrow = 1)

system.time(replicate(200, {outer(x,y); gc();}))

# user system elapsed

# 4.484 0.324 4.837

system.time(replicate(200, {tcrossprod(x,y); gc();}))

# user system elapsed

# 4.320 0.324 4.653

system.time(replicate(200, {xx %*% yy; gc();}))

# user system elapsed

# 4.372 0.324 4.708

In terms of performance, they are all very alike.

Follow-up

When I came back I saw another answer with a different benchmark. Well, the thing is, it depends on the problem size. If you just try a small example you can not eliminate function interpretation / calling overhead for all three functions. If you do

x <- y <- runif(500)

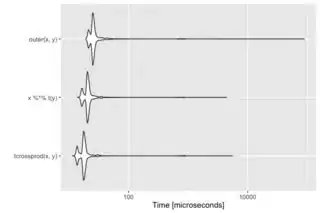

microbenchmark(tcrossprod(x,y), x %*% t(y), outer(x,y), times = 200)

you will see roughly identical performance again.

#Unit: milliseconds

# expr min lq mean median uq max neval cld

# tcrossprod(x, y) 2.09644 2.42466 3.402483 2.60424 3.94238 35.52176 200 a

# x %*% t(y) 2.22520 2.55678 3.707261 2.66722 4.05046 37.11660 200 a

# outer(x, y) 2.08496 2.55424 3.695660 2.69512 4.08938 35.41044 200 a