I am trying to perform a linear regression on experimental data consisting of replicate measures of the same condition (for several conditions) to check for the reliability of the experimental data. For each condition I have ~5k-10k observations stored in a data frame df:

[1] cond1 repA cond1 repB cond2 repA cond2 repB ...

[2] 4.158660e+06 4454400.703 ...

[3] 1.458585e+06 4454400.703 ...

[4] NA 887776.392 ...

...

[5024] 9571785.382 9.679092e+06 ...

I use the following code to plot scatterplot + lm + R^2 values (stored in rdata) for the different conditions:

for (i in seq(1,13,2)){

vec <- matrix(0, nrow = nrow(df), ncol = 2)

vec[,1] <- df[,i]

vec[,2] <- df[,i+1]

vec <- na.exclude(vec)

plot(log10(vec[,1]),log10(vec[,2]), xlab = 'rep A', ylab = 'rep B' ,col="#00000033")

abline(fit<-lm(log10(vec[,2])~log10(vec[,1])), col='red')

legend("topleft",bty="n",legend=paste("R2 is",rdata[1,((i+1)/2)] <- format(summary(fit)$adj.r.squared,digits=4)))

}

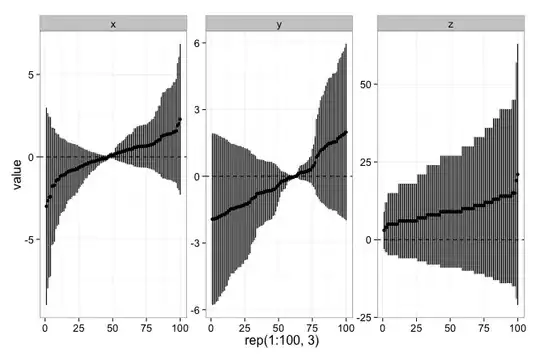

However, the lm seems to be shifted so that it does not fit the trend I see in the experimental data:

It consistently occurs for every condition. I unsuccesfully tried to find an explanation by looking up the scource code and browsing different forums and posts (this or here).