Yes, there is attribute coef_ for SVM classifier but it only works for SVM with linear kernel. For other kernels it is not possible because data are transformed by kernel method to another space, which is not related to input space, check the explanation.

from matplotlib import pyplot as plt

from sklearn import svm

def f_importances(coef, names):

imp = coef

imp,names = zip(*sorted(zip(imp,names)))

plt.barh(range(len(names)), imp, align='center')

plt.yticks(range(len(names)), names)

plt.show()

features_names = ['input1', 'input2']

svm = svm.SVC(kernel='linear')

svm.fit(X, Y)

f_importances(svm.coef_, features_names)

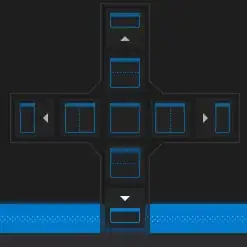

And the output of the function looks like this: