I have done some research on the subject, but I think my question is significantly different from what has been asked before.

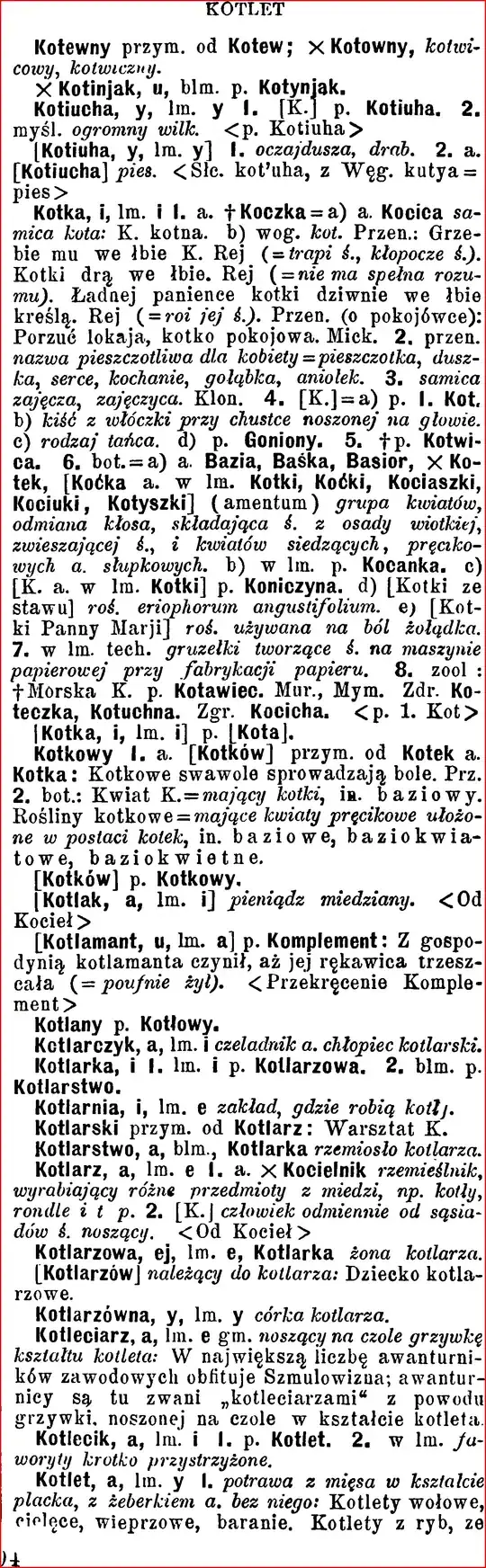

My PhD thesis deals with OCR-ing an old dictionary and converting the result into an XML-like database automatically. This part I have figured out. However, I'd like to enrich the final result by displaying a fragment of scan used for each entry/headword. As the dictionary is almost 9000 pages long, doing it manually is out of the question.

This is how a random page looks: http://i.imgur.com/X2mPZr0.png

As each entry always equals one paragraph, I would like to find a way to split every image into rectangles with text (no OCR needed) as separate files, like this (without drawing the rectangles): https://i.stack.imgur.com/21ggN.png

The good thing is that the scans I have are identical in shape and size, and similar in terms of margins/text alignment. Every paragraph always has an identation, too.

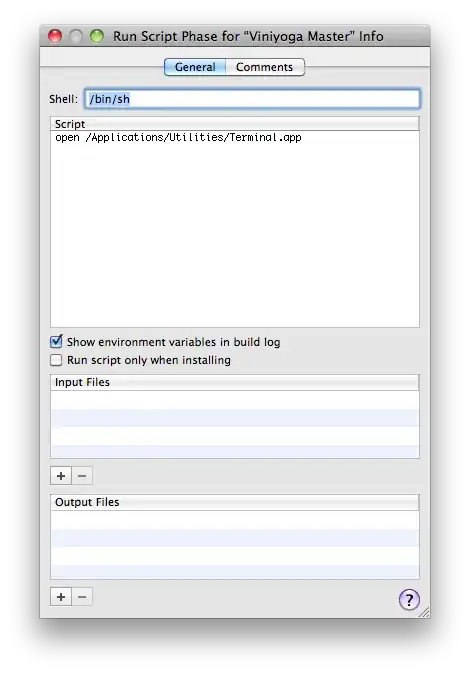

The bad thing is that I am mostly a linguist and not much of a programmer. Most of my experience is with Ruby, XML and CSS. And that some paragraphs are only one-line long.

I am aware of some ways do to a similar thing:

- Algorithm to detect presence of text on image

- http://www.danvk.org/2015/01/07/finding-blocks-of-text-in-an-image-using-python-opencv-and-numpy.html

- http://answers.opencv.org/question/27411/use-opencv-to-detect-text-blocks-send-to-tesseract-ios/

- https://github.com/kanaadp/iReader

but they are require significant amount of time for me to learn (especially that I have 0 knowledge in Python) and I don't know if they allow not only for text detection, but also paragraph detection.

Any input/suggestion on the matter would be greatly appreciated, especially newbie-friendly.