This is a typical use case for FEM/FVM equation systems, so is perhaps of broader interest. From a triangular mesh à la

I would like to create a scipy.sparse.csr_matrix. The matrix rows/columns represent values at the nodes of the mesh. The matrix has entries on the main diagonal and wherever two nodes are connected by an edge.

Here's an MWE that first builds a node->edge->cells relationship and then builds the matrix:

import numpy

import meshzoo

from scipy import sparse

nx = 1600

ny = 1000

verts, cells = meshzoo.rectangle(0.0, 1.61, 0.0, 1.0, nx, ny)

n = len(verts)

nds = cells.T

nodes_edge_cells = numpy.stack([nds[[1, 2]], nds[[2, 0]],nds[[0, 1]]], axis=1)

# assign values to each edge (per cell)

alpha = numpy.random.rand(3, len(cells))

vals = numpy.array([

[alpha**2, -alpha],

[-alpha, alpha**2],

])

# Build I, J, V entries for COO matrix

I = []

J = []

V = []

#

V.append(vals[0][0])

V.append(vals[0][1])

V.append(vals[1][0])

V.append(vals[1][1])

#

I.append(nodes_edge_cells[0])

I.append(nodes_edge_cells[0])

I.append(nodes_edge_cells[1])

I.append(nodes_edge_cells[1])

#

J.append(nodes_edge_cells[0])

J.append(nodes_edge_cells[1])

J.append(nodes_edge_cells[0])

J.append(nodes_edge_cells[1])

# Create suitable data for coo_matrix

I = numpy.concatenate(I).flat

J = numpy.concatenate(J).flat

V = numpy.concatenate(V).flat

matrix = sparse.coo_matrix((V, (I, J)), shape=(n, n))

matrix = matrix.tocsr()

With

python -m cProfile -o profile.prof main.py

snakeviz profile.prof

one can create and view a profile of the above:

The method tocsr() takes the lion share of the runtime here, but this is also true when building alpha is more complex. Consequently, I'm looking for ways to speed this up.

What I've already found:

Due to the structure of the data, the values on the diagonal of the matrix can be summed up in advance, i.e.,

V.append(vals[0, 0, 0] + vals[1, 1, 2]) I.append(nodes_edge_cells[0, 0]) # == nodes_edge_cells[1, 2] J.append(nodes_edge_cells[0, 0]) # == nodes_edge_cells[1, 2]This makes

I,J,Vshorter and thus speeds uptocsr.Right now, edges are "per cell". I could identify equal edges with each other using

numpy.unique, effectively saving about half ofI,J,V. However, I found that this too takes some time. (Not surprising.)

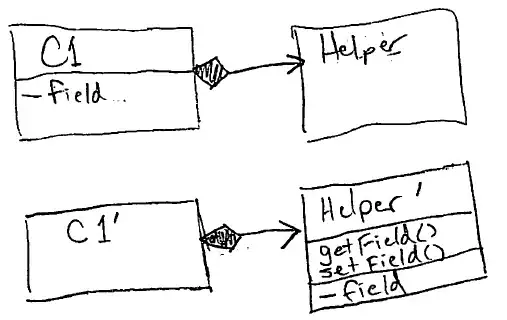

One other thought that I had was that that I could replace the diagonal V, I, J by a simple numpy.add.at if there was a csr_matrix-like data structure where the main diagonal is kept separately. I know that this exists in some other software packages, but couldn't find it in scipy. Correct?

Perhaps there's a sensible way to construct CSR directly?