I recently watched the OSCON Austin 2016 talk on "Detecting outliers and anomalies in realtime at Datadog" by Homin Lee and found proper motivation to ask the following question.

Basically what I am trying to do is find anomalies in graphs that do not necessarily start at the same time (t) but are quite similar (in family) in shape.

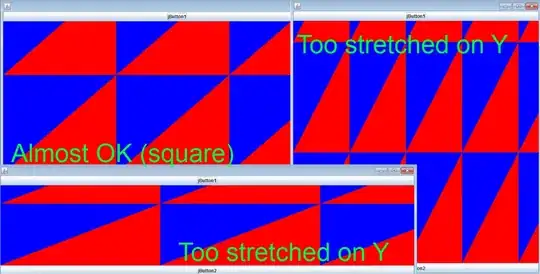

Separated:

Combined:

As depicted in my (rough) concept drawing, given two similar frequency (f) functions of time, I want to line them up on top of each other on the basis of where each of their inflection points are. One of the frequency graphs starts at t=-2 and the other t=5. They both have inflection points around t_1=8.5 and t_2=1.5. This is where I want to line them up. Essentially, the image drawn should be the final output from my algorithm and listing any anomalies that trigger like say for the green curve, if f=0.2 at t_1=12, then that should be an anomaly because it is not in family. And as Homin Lee says it, the graph would not be "within the trained envelope."

Now I want to lay out what my particular approach would be and see if you think the same or have a better approach to develop this algorithm. Before we choose which anomaly detection algorithm to use, we need to discuss how to prepare this data. We will continue to use frequency-versus-time data for example purposes. To prepare the data, we need to (1) find the inflection points, (2) scale the data so that the data all have the same time domain duration (i.e., 12-5=7=7=5-(-2)), and (3) find a way to match (line up) the times of each graph (i.e., 5 to -2, 6 to -1, and so on).

- Finding the inflection points would not be too hard as all we have to do is detect where changes in concavity occur on each of the graphs. In our example that would be at t_1=7.5 and t_2=1.5. This algorithm written here seems to have potential.

- In order to scale the data, we would want to put the inflection point towards the middle of the graph so |t_min-t_I|=|t_max-t_I| where t_I is when the inflection point occurs. The frequency would be scaled to some decently big range. I am assuming that this scaling would be something like this approach.

- Finding a way to match (line up) the times of each graph is going to be the most difficult objective out of the three and I am not exactly sure how to do this however I will make my proposal. I was thinking maybe we could use what was discussed here or define discrete Fourier Transforms for the data set in order to determine a common data domain for both graphs. This section is very much in the unknown and I would like to open this up for suggestions.

Once the data is prepared, now it is onto the algorithm. For (robust) anomaly detection, I was thinking about using one-class/multi-class Support Vector Machines (SVM) because we are going to be training a huge set of graphs to form the "envelope." This section is also open for suggestions.

As a moonshot thought, I would like to eventually be able to put all of the graphs onto one huge plot and point out the anomalies from there. The problem is that there would be so many different time intervals. So one solution would be to create a singular universal (u) time interval so that you don't have to deal with the differing intervals (e.g., t_1=5,9 would become t_u=1,5 and same goes for t_2).

So to recap, I am looking to analyze similar graphs on different time intervals for anomalies. Find critical/key points (not necessarily inflection points), scale, plot graphs, and check for anomalies.

I have rambled on for long enough but if something does not make sense and you would like me to clarify or elaborate, let me know and I will. Feel free to make suggestions, submit to me some code, and/or any other ideas or approaches that I did not necessary think of before.

Thank you.

P.S., sorry about the drawings; I tried my best. :P