TL;DR

The discrepancy you are observing between RDTSC and REFTSC and is due to TurboBoost P-state transitions. During these transitions, most of the core, including the fixed-function performance counter REF_TSC, is halted for approximately 20000-21000 cycles (8.5us), but rdtsc continues at its invariant frequency. rdtsc is probably in an isolated power and clock domain because it is so important and because of its documented wallclock-like behaviour.

The RDTSC-REFTSC Discrepancy

The discrepancy manifests itself as a tendency for RDTSC to overcount REFTSC. The longer the program runs, the more positive the difference RDTSC-REFTSC tends to be. Over very long stretches it can mount as high as 1%-2% or even higher.

Of course, it has been observed by yourself already that the overcounting disappears when TurboBoost is disabled, which can be done as follows when using intel_pstate:

echo 1 > /sys/devices/system/cpu/intel_pstate/no_turbo

But that does not tell us for sure that TurboBoost is at fault for the discrepancy; It could be that the higher P-States enabled by TurboBoost eat up the available headroom, causing thermal throttling and halts.

Possible Throttling?

TurboBoost is a dynamic frequency and voltage scaling solution to opportunistically take advantage of headroom in the operating envelope (thermal or electrical). When possible, TurboBoost will then scale up the core frequency and voltage of the processor beyond their nominal value, thus improving performance at the expense of higher power consumption.

The higher power consumption of course increases core temperature and power consumption. Eventually, some sort of limit will be hit, and TurboBoost will have to crank down performance.

TM1 Thermal Throttling?

I began by investigating whether the Thermal Control Circuitry (TCC) for Thermal Monitor 1 (TM1) or 2 (TM2) was causing thermal throttling. TM1 reduces power consumption by inserting TM stop-clock cycles, and these are one of the conditions documented to lead to a halt of REFTSC. TM2, on the other hand, does not gate the clock; It only scales the frequency.

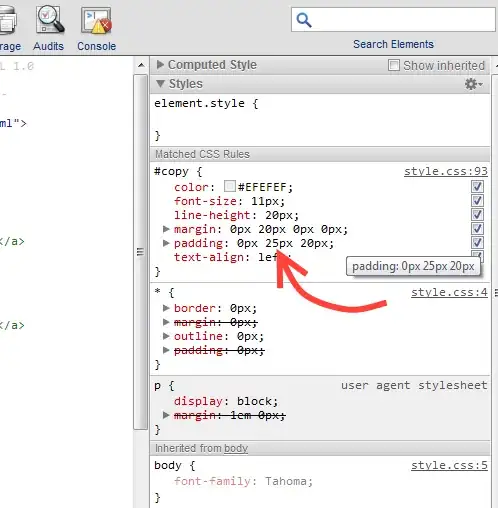

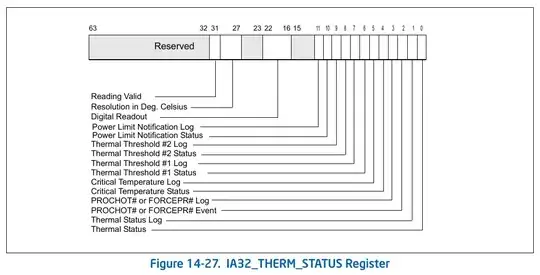

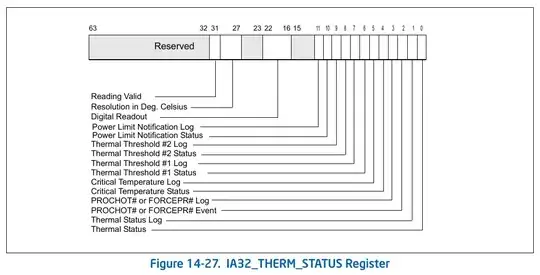

I modified libpfc() to enable me to read select MSRs, specifically the IA32_PACKAGE_THERM_STATUS and IA32_THERM_STATUS MSRs. Both contain a read-only Status and a read-write, hardware-sticky Log flag for various thermal conditions:

(The

(The IA32_PACKAGE_THERM_STATUS register is substantially the same)

While some of these bits were on occasion set (especially when blocking laptop air vents!), they did not seem to correlate with RDTSC overcounting, which would reliably occur regardless of thermal status.

Hardware Duty Cycling? C-State Residency?

Digging elsewhere in the SDM for stop-clock-like hardware I happened upon HDC (Hardware Duty Cycle), a mechanism by which the OS can manually request the CPU to operate only a fixed proportion of the time; HDC hardware implements this by running the processor for 1-15 clock cycles per 16-clock period, and force-idling it for the remaining 15-1 clock cycles of that period.

HDC offers very useful registers, in particular the MSRs:

IA32_THREAD_STALL: Counts the number of cycles stalled due to forced idling on this logical processor.MSR_CORE_HDC_RESIDENCY: Same as above but for the physical processor, counts cycles when one or more logical processors of this core are force-idling.MSR_PKG_HDC_SHALLOW_RESIDENCY: Counts cycles that the package was in C2 state and at least one logical processor was force-idling.MSR_PKG_HDC_DEEP_RESIDENCY: Counts cycles that the package was in a deeper (which precisely is configurable) C-state and at least one logical processor was force-idling.

For further details refer to the Intel SDM Volume 3, Chapter 14, §14.5.1 Hardware Duty Cycling Programming Interface.

But my i7-4700MQ 2.4 GHz CPU doesn't support HDC, and so that was that for HDC.

Other Sources of Throttling?

Digging some more still in the Intel SDM I found a very, very juicy MSR: MSR_CORE_PERF_LIMIT_REASONS. This register reports a large number of very useful Status and sticky Log bits:

690H MSR_CORE_PERF_LIMIT_REASONS - Package - Indicator of Frequency Clipping in Processor Cores

- Bit

0: PROCHOT Status

- Bit

1: Thermal Status

- Bit

4: Graphics Driver Status. When set, frequency is reduced below the operating system request due to Processor Graphics driver override.

- Bit

5: Autonomous Utilization-Based Frequency Control Status. When set, frequency is reduced below the operating system request because the processor has detected that utilization is low.

- Bit

6: Voltage Regulator Thermal Alert Status. When set, frequency is reduced below the operating system request due to a thermal alert from the Voltage Regulator.

- Bit

8: Electrical Design Point Status. When set, frequency is reduced below the operating system request due to electrical design point constraints (e.g. maximum electrical current consumption).

- Bit

9: Core Power Limiting Status. When set, frequency is reduced below the operating system request due to domain-level power limiting.

- Bit

10: Package-Level Power Limiting PL1 Status. When set, frequency is reduced below the operating system request due to package-level power limiting PL1.

- Bit

11: Package-Level Power Limiting PL2 Status. When set, frequency is reduced below the operating system request due to package-level power limiting PL2.

- Bit

12: Max Turbo Limit Status. When set, frequency is reduced below the operating system request due to multi-core turbo limits.

- Bit

13: Turbo Transition Attenuation Status. When set, frequency is reduced below the operating system request due to Turbo transition attenuation. This prevents performance degradation due to frequent operating ratio changes.

- Bit

16: PROCHOT Log

- Bit

17: Thermal Log

- Bit

20: Graphics Driver Log

- Bit

21: Autonomous Utilization-Based Frequency Control Log

- Bit

22: Voltage Regulator Thermal Alert Log

- Bit

24: Electrical Design Point Log

- Bit

25: Core Power Limiting Log

- Bit

26: Package-Level Power Limiting PL1 Log

- Bit

27: Package-Level Power Limiting PL2 Log

- Bit

28: Max Turbo Limit Log

- Bit

29: Turbo Transition Attenuation Log

pfc.ko now supports this MSR, and a demo prints which of these log bits is active. The pfc.ko driver clears the sticky bits on every read.

I reran your experiments while printing the bits, and my CPU reports under very heavy load (all 4 cores/8 threads active) several limiting factors, including Electrical Design Point and Core Power Limiting. The Package-Level PL2 and Max Turbo Limit bits are always set on my CPU for reasons unknown to me. I also saw on occasion Turbo Transition Attenuation.

While none of these bits exactly correlated with the presence of the RDTSC-REFTSC discrepancy, the last bit gave me food for thought. The mere existence of Turbo Transition Attenuation implies that switching P-States has a substantial-enough cost that it must be rate-limited with some hysteresis mechanism. When I could not find an MSR that counted these transitions, I decided to do the next best thing - I'll use the magnitude of the RDTSC-REFTSC overcount to characterize the performance implications of a TurboBoost transition.

Experiment

The experiment setup is as follows. On my i7-4700MQ CPU, nominal speed 2.4GHz and max Turbo Speed 3.4 GHz, I'll offline all cores except 0 (the boot processor) and 3 (a convenient victim core not numbered 0 and not a logical sibling of 0). We will then ask the intel_pstate driver to give us a package performance of no less than 98% and no higher than 100%; This constrains the processor to oscillate between the second-highest and highest P-states (3.3 GHz and 3.4 GHz). I do this as follows:

echo 0 > /sys/devices/system/cpu/cpu1/online

echo 0 > /sys/devices/system/cpu/cpu2/online

echo 0 > /sys/devices/system/cpu/cpu4/online

echo 0 > /sys/devices/system/cpu/cpu5/online

echo 0 > /sys/devices/system/cpu/cpu6/online

echo 0 > /sys/devices/system/cpu/cpu7/online

echo 98 > /sys/devices/system/cpu/intel_pstate/min_perf_pct

echo 100 > /sys/devices/system/cpu/intel_pstate/max_perf_pct

I ran the demo application for 10000 samples at

1000, 1500, 2500, 4000, 6300,

10000, 15000, 25000, 40000, 63000,

100000, 150000, 250000, 400000, 630000,

1000000, 1500000, 2500000, 4000000, 6300000,

10000000, 15000000, 25000000, 40000000, 63000000

nanoseconds per add_calibration() executed at nominal CPU frequency (multiply the numbers above by 2.4 to get the actual argument to add_calibration()).

Results

This produces logs that look like this (case of 250000 nanos):

CPU 0, measured CLK_REF_TSC MHz : 2392.56

CPU 0, measured rdtsc MHz : 2392.46

CPU 0, measured add MHz : 3286.30

CPU 0, measured XREF_CLK time (s) : 0.00018200

CPU 0, measured delta time (s) : 0.00018258

CPU 0, measured tsc_delta time (s) : 0.00018200

CPU 0, ratio ref_tsc :ref_xclk : 24.00131868

CPU 0, ratio ref_core:ref_xclk : 33.00071429

CPU 0, ratio rdtsc :ref_xclk : 24.00032967

CPU 0, core CLK cycles in OS : 0

CPU 0, User-OS transitions : 0

CPU 0, rdtsc-reftsc overcount : -18

CPU 0, MSR_IA32_PACKAGE_THERM_STATUS : 000000008819080a

CPU 0, MSR_IA32_PACKAGE_THERM_INTERRUPT: 0000000000000003

CPU 0, MSR_CORE_PERF_LIMIT_REASONS : 0000000018001000

PROCHOT

Thermal

Graphics Driver

Autonomous Utilization-Based Frequency Control

Voltage Regulator Thermal Alert

Electrical Design Point (e.g. Current)

Core Power Limiting

Package-Level PL1 Power Limiting

* Package-Level PL2 Power Limiting

* Max Turbo Limit (Multi-Core Turbo)

Turbo Transition Attenuation

CPU 0, measured CLK_REF_TSC MHz : 2392.63

CPU 0, measured rdtsc MHz : 2392.62

CPU 0, measured add MHz : 3288.03

CPU 0, measured XREF_CLK time (s) : 0.00018192

CPU 0, measured delta time (s) : 0.00018248

CPU 0, measured tsc_delta time (s) : 0.00018192

CPU 0, ratio ref_tsc :ref_xclk : 24.00000000

CPU 0, ratio ref_core:ref_xclk : 32.99983509

CPU 0, ratio rdtsc :ref_xclk : 23.99989006

CPU 0, core CLK cycles in OS : 0

CPU 0, User-OS transitions : 0

CPU 0, rdtsc-reftsc overcount : -2

CPU 0, MSR_IA32_PACKAGE_THERM_STATUS : 000000008819080a

CPU 0, MSR_IA32_PACKAGE_THERM_INTERRUPT: 0000000000000003

CPU 0, MSR_CORE_PERF_LIMIT_REASONS : 0000000018001000

PROCHOT

Thermal

Graphics Driver

Autonomous Utilization-Based Frequency Control

Voltage Regulator Thermal Alert

Electrical Design Point (e.g. Current)

Core Power Limiting

Package-Level PL1 Power Limiting

* Package-Level PL2 Power Limiting

* Max Turbo Limit (Multi-Core Turbo)

Turbo Transition Attenuation

CPU 0, measured CLK_REF_TSC MHz : 2284.69

CPU 0, measured rdtsc MHz : 2392.63

CPU 0, measured add MHz : 3151.99

CPU 0, measured XREF_CLK time (s) : 0.00018121

CPU 0, measured delta time (s) : 0.00019036

CPU 0, measured tsc_delta time (s) : 0.00018977

CPU 0, ratio ref_tsc :ref_xclk : 24.00000000

CPU 0, ratio ref_core:ref_xclk : 33.38540919

CPU 0, ratio rdtsc :ref_xclk : 25.13393301

CPU 0, core CLK cycles in OS : 0

CPU 0, User-OS transitions : 0

CPU 0, rdtsc-reftsc overcount : 20548

CPU 0, MSR_IA32_PACKAGE_THERM_STATUS : 000000008819080a

CPU 0, MSR_IA32_PACKAGE_THERM_INTERRUPT: 0000000000000003

CPU 0, MSR_CORE_PERF_LIMIT_REASONS : 0000000018000000

PROCHOT

Thermal

Graphics Driver

Autonomous Utilization-Based Frequency Control

Voltage Regulator Thermal Alert

Electrical Design Point (e.g. Current)

Core Power Limiting

Package-Level PL1 Power Limiting

* Package-Level PL2 Power Limiting

* Max Turbo Limit (Multi-Core Turbo)

Turbo Transition Attenuation

CPU 0, measured CLK_REF_TSC MHz : 2392.46

CPU 0, measured rdtsc MHz : 2392.45

CPU 0, measured add MHz : 3287.80

CPU 0, measured XREF_CLK time (s) : 0.00018192

CPU 0, measured delta time (s) : 0.00018249

CPU 0, measured tsc_delta time (s) : 0.00018192

CPU 0, ratio ref_tsc :ref_xclk : 24.00000000

CPU 0, ratio ref_core:ref_xclk : 32.99978012

CPU 0, ratio rdtsc :ref_xclk : 23.99989006

CPU 0, core CLK cycles in OS : 0

CPU 0, User-OS transitions : 0

CPU 0, rdtsc-reftsc overcount : -2

CPU 0, MSR_IA32_PACKAGE_THERM_STATUS : 000000008819080a

CPU 0, MSR_IA32_PACKAGE_THERM_INTERRUPT: 0000000000000003

CPU 0, MSR_CORE_PERF_LIMIT_REASONS : 0000000018001000

PROCHOT

Thermal

Graphics Driver

Autonomous Utilization-Based Frequency Control

Voltage Regulator Thermal Alert

Electrical Design Point (e.g. Current)

Core Power Limiting

Package-Level PL1 Power Limiting

* Package-Level PL2 Power Limiting

* Max Turbo Limit (Multi-Core Turbo)

Turbo Transition Attenuation

I made several observations about the logs, but one stood out:

For nanos < ~250000, there is negligible RDTSC overcounting. For nanos > ~250000, one may reliably observe overcounting clock cycle quanta of just over 20000 clock cycles. But they are not due to User-OS transitions.

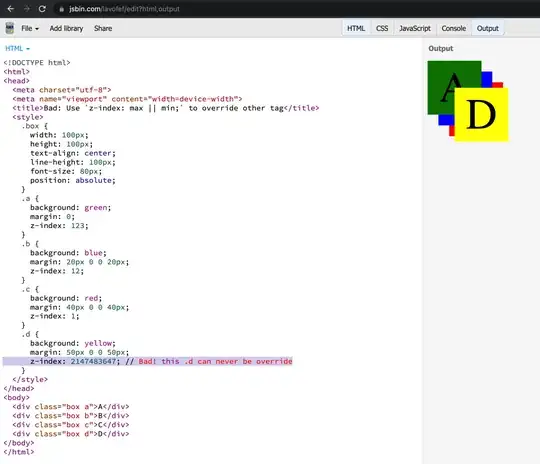

Here is a visual plot:

Saturated Blue Dots: 0 standard deviations (close to mean)

Saturated Blue Dots: 0 standard deviations (close to mean)

Saturated Red Dots: +3 standard deviations (above mean)

Saturated Green Dots: -3 standard deviations (below mean)

There is a marked difference before, during and after roughly 250000 nanoseconds of sustained decrementing.

Nanos < 250000

Before the threshold, the CSV logs look like this:

24.00,33.00,24.00,-14,0,0

24.00,33.00,24.00,-20,0,0

24.00,33.00,24.00,-4,3639,1

24.00,33.00,24.00,-20,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,-14,0,0

24.00,33.00,24.00,-14,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,-44,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,-14,0,0

24.00,33.00,24.00,-20,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,-20,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,12,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,10,0,0

24.00,33.00,24.00,-20,0,0

24.00,33.00,24.00,32,3171,1

24.00,33.00,24.00,-20,0,0

24.00,33.00,24.00,10,0,0

Indicating a TurboBoost ratio perfectly stable at 33x, an RDTSC that counts in synchrony with REFTSC at 24x the rate of REF_XCLK (100 MHz), negligible overcounting, typically 0 cycles spent in the kernel and thus 0 transitions into the kernel. Kernel interrupts take approximately 3000 reference cycles to service.

Nanos == 250000

At the critical threshold, the log contains clumps of 20000 cycle overcounts, and the overcounts correlate very well with non-integer estimated multiplier values between 33x and 34x:

24.00,33.00,24.00,-2,0,0

24.00,33.00,24.00,-2,0,0

24.00,33.00,24.00,2,0,0

24.00,33.00,24.00,22,0,0

24.00,33.00,24.00,-2,0,0

24.00,33.00,24.00,-2,0,0

24.00,33.00,24.00,-2,0,0

24.00,33.05,25.11,20396,0,0

24.00,33.38,25.12,20212,0,0

24.00,33.39,25.12,20308,0,0

24.00,33.42,25.12,20296,0,0

24.00,33.43,25.11,20158,0,0

24.00,33.43,25.11,20178,0,0

24.00,33.00,24.00,-4,0,0

24.00,33.00,24.00,20,3920,1

24.00,33.00,24.00,-2,0,0

24.00,33.00,24.00,-4,0,0

24.00,33.44,25.13,20396,0,0

24.00,33.46,25.11,20156,0,0

24.00,33.46,25.12,20268,0,0

24.00,33.41,25.12,20322,0,0

24.00,33.40,25.11,20216,0,0

24.00,33.46,25.12,20168,0,0

24.00,33.00,24.00,-2,0,0

24.00,33.00,24.00,-2,0,0

24.00,33.00,24.00,-2,0,0

24.00,33.00,24.00,22,0,0

Nanos > 250000

The TurboBoost from 3.3 GHz to 3.4 GHz now happens reliably. As the nanos increase, the logs are filled with roughly integer multiples of 20000-cycle quanta. Eventually there are so many nanos that the Linux scheduler interrupts become permanent fixtures, but preemption is easily detected with the performance counters, and its effect is not at all similar to the TurboBoost halts.

24.00,33.75,24.45,20166,0,0

24.00,33.78,24.45,20302,0,0

24.00,33.78,24.45,20202,0,0

24.00,33.68,24.91,41082,0,0

24.00,33.31,24.90,40998,0,0

24.00,33.70,25.30,58986,3668,1

24.00,33.74,24.42,18798,0,0

24.00,33.74,24.45,20172,0,0

24.00,33.77,24.45,20156,0,0

24.00,33.78,24.45,20258,0,0

24.00,33.78,24.45,20240,0,0

24.00,33.77,24.42,18826,0,0

24.00,33.75,24.45,20372,0,0

24.00,33.76,24.42,18798,4081,1

24.00,33.74,24.41,18460,0,0

24.00,33.75,24.45,20234,0,0

24.00,33.77,24.45,20284,0,0

24.00,33.78,24.45,20150,0,0

24.00,33.78,24.45,20314,0,0

24.00,33.78,24.42,18766,0,0

24.00,33.71,25.36,61608,0,0

24.00,33.76,24.45,20336,0,0

24.00,33.78,24.45,20234,0,0

24.00,33.78,24.45,20210,0,0

24.00,33.78,24.45,20210,0,0

24.00,33.00,24.00,-10,0,0

24.00,33.00,24.00,4,0,0

24.00,33.00,24.00,18,0,0

24.00,33.00,24.00,2,4132,1

24.00,33.00,24.00,44,0,0

Conclusions

The TurboBoost machinery is responsible for the discrepancy in RDTSC-REFTSC. This discrepancy can be used to determine that a TurboBoost state transition from 3.3 GHz to 3.4 GHz takes approximately 20500 reference clock cycles (8.5us), and is triggered no later than about 250000 ns (250us; 600000 reference clock cycles) after entry into add_reference(), when the processor decides that the workload is sufficiently intense as to deserve a frequency-voltage scaling.

Future Work

More research needs to be done to determine how the transition cost varies with frequency, and whether the hardware selecting the power state can be tuned. Of particular interest to me are "Turbo Attenuation Units", hints of which I've seen in the far reaches of the web. Perhaps the Turbo hardware has a configurable timewindow? Currently the ratio of time spend deciding to time spent transitioning is 30:1 (600us:20us). Can it be tuned?