I'm looking to perform optical character recognition (OCR) on a display, and want the program to work under different light conditions. To do this, I need to process and threshold the image such that there is no noise surrounding each digit, allowing me to detect the contour of the digit and perform OCR from there. I need the threshold value I use to be adaptable to these different light conditions. I've tried adaptive thresholding, but I haven't been able to get it to work.

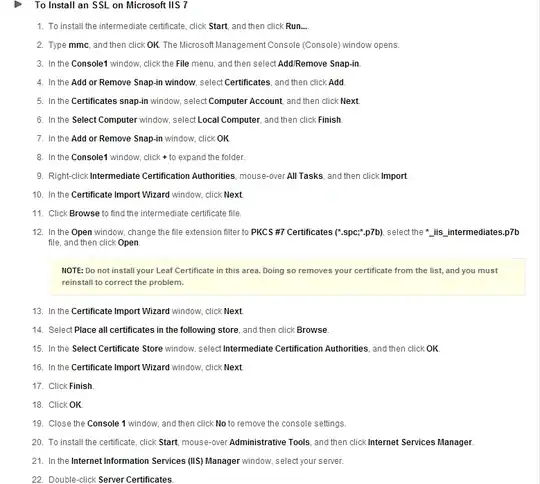

My image processing is simple: load the image (i), grayscale i (g), apply a histogram equalization to g (h), and apply a binary threshold to h with a threshold value = t. I've worked with a couple of different datasets, and found that the optimal threshold value to make the OCR work consistently lies within the range of highest density in a histogram plot of (h) (the only part of the plot without gaps). ![A histogram of (h). The values t=[190,220] are optimal for OCR](../../images/3819048084.webp)

A histogram of (h). The values t=[190,220] are optimal for OCR. A more complete set of images describing my problem is available here: https://i.stack.imgur.com/qsFp0.jpg

My current solution, which works but is clunky and slow, checks for:

1. There must be 3 digits

2. The first digit must be reasonably small in size

3. There must be at least one contour recognized as a digit

4. The digit must be recognized in the digit dictionary

Barring all cases being accepted, the threshold is increased by 10 (beginning at a low value) and an attempt is made again.

The fact that I can recognize the optimal threshold value on the histogram plot of (h) may just be confirmation bias, but I'd like to know if there's a way I can extract the value. This is different from how I've worked with histograms before, which has been more on finding peaks/valleys.

I'm using cv2 for image processing and matplotlib.pyplot for the histogram plots.