I was doing a test that required an algorithm for Binary Tomography. A set of 38 test values are supplied that test correctness, but there is also a time limit of 1 CPU sec to complete all the tests. The problem is as follows:

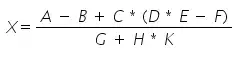

Output “Yes” if there exists an m-by-n matrix A, with each element either being 0 or 1, such that

Otherwise output “No”.

For each test, 2 arrays are provided:

- r (the sum of each row in the matrix)

- c (the sum of each column in the matrix)

In the equation:

- m is the length of the r array, where 1 <= m

- n is the length of the c array, where n <= 1000

- ri is an element of r, where 0 <= ri <= n

- cj is an element of c, where 0 <= cj <= m

A "Yes" example

m = 3; n = 4; r = [2, 3, 2]; c = [1, 1, 3, 2];

A "No" example

m = 3; n = 3; r = [0, 0, 3]; c = [0, 0, 3];

I have a solution that appears to give correct answers, however it only manages 12 / 38 tests before the 1 second of CPU time is exceeded.

I originally wrote the code in ES5 and then went back and converted to to ES3 to try and get more performance out of it. (originally managed 9 tests as ES5). There doesn't seem a great deal left that I can do to the current algorithm to improve the performance (unless I am mistaken). This leads me to believe that my algorithm is at fault an that there must be a faster algorithm for doing this. I did a ton of reading trying to find one and ended up with a headache :)

So I'm turning to the community to see if anyone can suggest a faster algorithm than I am currently using.

'use strict';

const ZEROS = (function (seed) {

let string = seed;

for (let i = 0; i < 19; i += 1) {

string += seed;

}

return string;

}('00000000000000000000000000000000000000000000000000'));

const ZEROSLEN = ZEROS.length;

const permutate = function (n, ri) {

const result = [];

const memoize = {};

let count = 0;

do {

const bin = count.toString(2);

if (ZEROSLEN + bin.length > ZEROSLEN + n) {

break;

}

if (!memoize[bin] && (bin.split('1').length - 1) === ri) {

const string = (ZEROS + bin).slice(-n);

const sLen = string.length;

const perm = new Array(sLen);

for (let i = sLen - 1; i >= 0; i -= 1) {

perm[i] = +string[i];

}

memoize[bin] = result.push(perm);

}

count += 1;

} while (count);

return result;

};

const getMatrixSum = function (n, matrix) {

const mLength = matrix.length;

const rows = new Array(mLength);

const a = new Array(n);

const last = mLength - 1;

for (let x = n - 1; x >= 0; x -= 1) {

for (let y = last; y >= 0; y -= 1) {

rows[y] = matrix[y][x];

}

let sum = 0;

for (let i = rows.length - 1; i >= 0; i -= 1) {

sum += rows[i];

}

a[x] = sum;

}

return a;

};

const isEqual = function (a, b) {

const length = a.length;

if (length !== b.length) {

return false;

}

for (let i = length - 1; i >= 0; i -= 1) {

if (a[i] !== b[i]) {

return false;

}

}

return true;

};

const addRow = function (i, prev, r, c, result) {

if (result) {

return result;

}

const n = c.length;

const ri = r[i];

if (ri < 0 || ri > n) {

throw new RangeError('ri out of range');

}

const p = permutate(n, ri);

const m = r.length;

const rsLast = m - 1;

const nextI = i + 1;

for (let x = p.length - 1; x >= 0; x -= 1) {

const permutation = p[x];

const next = prev.slice();

next.push(permutation);

const sums = getMatrixSum(n, next);

if (i < rsLast) {

let memo = 0;

for (let j = sums.length - 1; j >= 0; j -= 1) {

if (sums[j] > c[j]) {

memo += 1;

}

}

if (!memo && addRow(nextI, next, r, c, result)) {

return true;

}

} else if (isEqual(sums, c)) {

return true;

}

}

return false;

};

const isSolvable = function (r, c) {

const m = r.length;

const n = c.length;

if (m < 1 || n > 1000) {

throw new Error('Bad data');

}

for (let j = n; j >= 0; j -= 1) {

const cj = c[j];

if (cj < 0 || cj > m) {

throw new RangeError('cj out of range');

}

}

return addRow(0, [], r, c, false) ? 'Yes' : 'No';

};

console.log(isSolvable([2, 3, 2], [1, 1, 3, 2]));

console.log(isSolvable([0, 0, 3], [0, 0, 3]));It may be worth noting that the tests are being run on SpiderMonkey version JavaScript-C24.2.0

Refs: