After I got the feed-back, that I didn't match the expectations of the questioner in my first answer, I modified my sources to draw the DXT1 raw-data into an OpenGL texture.

So, this answer addresses specifically this part of the question:

However, I don't understand how to construct a usable texture object out of the raw data and draw that to the frame buffer.

The modifications are strongly "inspired" by the Qt docs Cube OpenGL ES 2.0 example.

The essential part is how the QOpenGLTexture is constructed out of the DXT1 raw data:

_pQGLTex = new QOpenGLTexture(QOpenGLTexture::Target2D);

_pQGLTex->setFormat(QOpenGLTexture::RGB_DXT1);

_pQGLTex->setSize(_img.w, _img.h);

_pQGLTex->allocateStorage(QOpenGLTexture::RGBA, QOpenGLTexture::UInt8);

_pQGLTex->setCompressedData((int)_img.data.size(), _img.data.data());

_pQGLTex->setMinificationFilter(QOpenGLTexture::Nearest);

_pQGLTex->setMagnificationFilter(QOpenGLTexture::Linear);

_pQGLTex->setWrapMode(QOpenGLTexture::ClampToEdge);

And, this is the complete sample code DXT1-QTexture-QImage.cc:

#include <cstdint>

#include <fstream>

#include <QtWidgets>

#include <QOpenGLFunctions_4_0_Core>

#ifndef _WIN32

typedef quint32 DWORD;

#endif // _WIN32

/* borrowed from:

* https://msdn.microsoft.com/en-us/library/windows/desktop/bb943984(v=vs.85).aspx

*/

struct DDS_PIXELFORMAT {

DWORD dwSize;

DWORD dwFlags;

DWORD dwFourCC;

DWORD dwRGBBitCount;

DWORD dwRBitMask;

DWORD dwGBitMask;

DWORD dwBBitMask;

DWORD dwABitMask;

};

/* borrowed from:

* https://msdn.microsoft.com/en-us/library/windows/desktop/bb943982(v=vs.85).aspx

*/

struct DDS_HEADER {

DWORD dwSize;

DWORD dwFlags;

DWORD dwHeight;

DWORD dwWidth;

DWORD dwPitchOrLinearSize;

DWORD dwDepth;

DWORD dwMipMapCount;

DWORD dwReserved1[11];

DDS_PIXELFORMAT ddspf;

DWORD dwCaps;

DWORD dwCaps2;

DWORD dwCaps3;

DWORD dwCaps4;

DWORD dwReserved2;

};

struct Image {

int w, h;

std::vector<std::uint8_t> data;

explicit Image(int w = 0, int h = 0):

w(w), h(h), data(((w + 3) / 4) * ((h + 3) / 4) * 8)

{ }

~Image() = default;

Image(const Image&) = delete;

Image& operator=(const Image&) = delete;

Image(Image &&img): w(img.w), h(img.h), data(move(img.data)) { }

};

Image loadDXT1(const char *file)

{

std::ifstream fIn(file, std::ios::in | std::ios::binary);

// read magic code

enum { sizeMagic = 4 }; char magic[sizeMagic];

if (!fIn.read(magic, sizeMagic)) {

return Image(); // ERROR: read failed

}

if (strncmp(magic, "DDS ", sizeMagic) != 0) {

return Image(); // ERROR: wrong format (wrong magic code)

}

// read header

DDS_HEADER header;

if (!fIn.read((char*)&header, sizeof header)) {

return Image(); // ERROR: read failed

}

qDebug() << "header size:" << sizeof header;

// get raw data (size computation unclear)

Image img(header.dwWidth, header.dwHeight);

qDebug() << "data size:" << img.data.size();

if (!fIn.read((char*)img.data.data(), img.data.size())) {

return Image(); // ERROR: read failed

}

qDebug() << "processed image size:" << fIn.tellg();

// done

return img;

}

const char *vertexShader =

"#ifdef GL_ES\n"

"// Set default precision to medium\n"

"precision mediump int;\n"

"precision mediump float;\n"

"#endif\n"

"\n"

"uniform mat4 mvp_matrix;\n"

"\n"

"attribute vec4 a_position;\n"

"attribute vec2 a_texcoord;\n"

"\n"

"varying vec2 v_texcoord;\n"

"\n"

"void main()\n"

"{\n"

" // Calculate vertex position in screen space\n"

" gl_Position = mvp_matrix * a_position;\n"

"\n"

" // Pass texture coordinate to fragment shader\n"

" // Value will be automatically interpolated to fragments inside polygon faces\n"

" v_texcoord = a_texcoord;\n"

"}\n";

const char *fragmentShader =

"#ifdef GL_ES\n"

"// Set default precision to medium\n"

"precision mediump int;\n"

"precision mediump float;\n"

"#endif\n"

"\n"

"uniform sampler2D texture;\n"

"\n"

"varying vec2 v_texcoord;\n"

"\n"

"void main()\n"

"{\n"

" // Set fragment color from texture\n"

"#if 0 // test check tex coords\n"

" gl_FragColor = vec4(1, v_texcoord.x, v_texcoord.y, 1);\n"

"#else // (not) 0;\n"

" gl_FragColor = texture2D(texture, v_texcoord);\n"

"#endif // 0\n"

"}\n";

struct Vertex {

QVector3D coord;

QVector2D texCoord;

Vertex(qreal x, qreal y, qreal z, qreal s, qreal t):

coord(x, y, z), texCoord(s, t)

{ }

};

class OpenGLWidget: public QOpenGLWidget, public QOpenGLFunctions_4_0_Core {

private:

const Image &_img;

QOpenGLShaderProgram _qGLSProg;

QOpenGLBuffer _qGLBufArray;

QOpenGLBuffer _qGLBufIndex;

QOpenGLTexture *_pQGLTex;

public:

explicit OpenGLWidget(const Image &img):

QOpenGLWidget(nullptr),

_img(img),

_qGLBufArray(QOpenGLBuffer::VertexBuffer),

_qGLBufIndex(QOpenGLBuffer::IndexBuffer),

_pQGLTex(nullptr)

{ }

virtual ~OpenGLWidget()

{

makeCurrent();

delete _pQGLTex;

_qGLBufArray.destroy();

_qGLBufIndex.destroy();

doneCurrent();

}

// disabled: (to prevent accidental usage)

OpenGLWidget(const OpenGLWidget&) = delete;

OpenGLWidget& operator=(const OpenGLWidget&) = delete;

protected:

virtual void initializeGL() override

{

initializeOpenGLFunctions();

glClearColor(0, 0, 0, 1);

initShaders();

initGeometry();

initTexture();

}

virtual void paintGL() override

{

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

_pQGLTex->bind();

QMatrix4x4 mat; mat.setToIdentity();

_qGLSProg.setUniformValue("mvp_matrix", mat);

_qGLSProg.setUniformValue("texture", 0);

// draw geometry

_qGLBufArray.bind();

_qGLBufIndex.bind();

quintptr offset = 0;

int coordLocation = _qGLSProg.attributeLocation("a_position");

_qGLSProg.enableAttributeArray(coordLocation);

_qGLSProg.setAttributeBuffer(coordLocation, GL_FLOAT, offset, 3, sizeof(Vertex));

offset += sizeof(QVector3D);

int texCoordLocation = _qGLSProg.attributeLocation("a_texcoord");

_qGLSProg.enableAttributeArray(texCoordLocation);

_qGLSProg.setAttributeBuffer(texCoordLocation, GL_FLOAT, offset, 2, sizeof(Vertex));

glDrawElements(GL_TRIANGLE_STRIP, 4, GL_UNSIGNED_SHORT, 0);

//glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_SHORT, 0);

}

private:

void initShaders()

{

if (!_qGLSProg.addShaderFromSourceCode(QOpenGLShader::Vertex,

QString::fromLatin1(vertexShader))) close();

if (!_qGLSProg.addShaderFromSourceCode(QOpenGLShader::Fragment,

QString::fromLatin1(fragmentShader))) close();

if (!_qGLSProg.link()) close();

if (!_qGLSProg.bind()) close();

}

void initGeometry()

{

Vertex vertices[] = {

// x y z s t

{ -1.0f, -1.0f, 0.0f, 0.0f, 0.0f },

{ +1.0f, -1.0f, 0.0f, 1.0f, 0.0f },

{ +1.0f, +1.0f, 0.0f, 1.0f, 1.0f },

{ -1.0f, +1.0f, 0.0f, 0.0f, 1.0f }

};

enum { nVtcs = sizeof vertices / sizeof *vertices };

// OpenGL ES doesn't have QUAD. A TRIANGLE_STRIP does as well.

GLushort indices[] = { 3, 0, 2, 1 };

//GLushort indices[] = { 0, 1, 2, 0, 2, 3 };

enum { nIdcs = sizeof indices / sizeof *indices };

_qGLBufArray.create();

_qGLBufArray.bind();

_qGLBufArray.allocate(vertices, nVtcs * sizeof (Vertex));

_qGLBufIndex.create();

_qGLBufIndex.bind();

_qGLBufIndex.allocate(indices, nIdcs * sizeof (GLushort));

}

void initTexture()

{

#if 0 // test whether texturing works at all

//_pQGLTex = new QOpenGLTexture(QImage("test.png").mirrored());

_pQGLTex = new QOpenGLTexture(QImage("test-dxt1.dds").mirrored());

#else // (not) 0

_pQGLTex = new QOpenGLTexture(QOpenGLTexture::Target2D);

_pQGLTex->setFormat(QOpenGLTexture::RGB_DXT1);

_pQGLTex->setSize(_img.w, _img.h);

_pQGLTex->allocateStorage(QOpenGLTexture::RGBA, QOpenGLTexture::UInt8);

_pQGLTex->setCompressedData((int)_img.data.size(), _img.data.data());

#endif // 0

_pQGLTex->setMinificationFilter(QOpenGLTexture::Nearest);

_pQGLTex->setMagnificationFilter(QOpenGLTexture::Nearest);

_pQGLTex->setWrapMode(QOpenGLTexture::ClampToEdge);

}

};

int main(int argc, char **argv)

{

qDebug() << "Qt Version:" << QT_VERSION_STR;

QApplication app(argc, argv);

// load a DDS image to get DTX1 raw data

Image img = loadDXT1("test-dxt1.dds");

// setup GUI

QMainWindow qWin;

OpenGLWidget qGLView(img);

/* I apply brute-force to get main window to sufficient size

* -> not appropriate for a productive solution...

*/

qGLView.setMinimumSize(img.w, img.h);

qWin.setCentralWidget(&qGLView);

qWin.show();

// exec. application

return app.exec();

}

For the test, I used (again) the sample file test-dxt1.dds.

And this is, how it looks (sample compiled with VS2013 and Qt 5.9.2):

Notes:

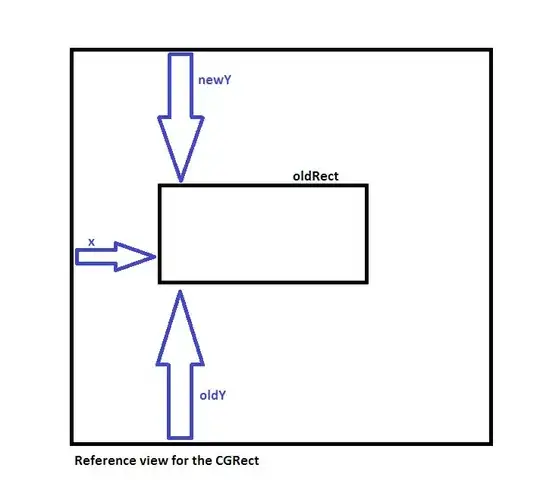

The texture is displayed upside-down. Please, consider that the original sample as well as my (excluded) code for texture loading from QImage applies a QImage::mirror(). It seems that QImage stores the data from top to bottom where OpenGL textures expect the opposite – from bottom to top. I guess the most easy would be to fix this after the texture is converted back to QImage.

My original intention was to implement also the part to read the texture back to a QImage (as described/sketched in the question). In general, I already did something like this in OpenGL (but without Qt). (I recently posted another answer OpenGL offscreen render about this. I have to admit that I had to cancel this plan due to a "time-out" issue. This was caused by some issues for which I needed quite long until I could fix them. I will share this experiences in the following as I'm thinking this could be helpful for others.

To find sample code for the initialization of the QOpenGLTexture with DXT1 data, I did a long google research – without success. Hence, I eye-scanned the Qt doc. of QOpenGLTexture for methods which looked promising/nessary to get it working. (I have to admit that I already did OpenGL texturing successfully but in pure OpenGL.) Finally, I got the actual set of necessary functions. It compiled and started but all I got was a black window. (Everytimes, I start something new with OpenGL it first ends up in a black or blue window – depending on what clear color I used resp...) So, I had a look into the qopengltexture.cpp on woboq.org (specifically in the implementation of QOpenGLTexture::QOpenGLTexture(QImage&, ...)). This didn't help much – they do it very similar as I tried.

The most essential problem, I could fix discussing this program with a colleague who contributed the final hint: I tried to get this running using QOpenGLFunctions. The last steps (toward the final fix) were trying this out with

_pQGLTex = new QOpenGLTexture(QImage("test.png").mirrored());

(worked) and

_pQGLTex = new QOpenGLTexture(QImage("test-dxt1.dds").mirrored());

(did not work).

This brought as to the idea that QOpenGLFunctions (which is claimed to be compatible to OpenGL ES 2.0) just seems not to enable S3 Texture loading. Hence, we replaced QOpenGLFunctions by QOpenGLFunctions_4_0_Core and, suddenly, it worked.

I did not overload the QOpenGLWidget::resizeGL() method as I use an identity matrix for the model-view-projection transformation of the OpenGL rendering. This is intended to have model space and clip space identical. Instead, I built a rectangle (-1, -1, 0) - (+1, +1, 0) which should exactly fill the (visible part of) the clip space x-y plane (and it does).

This can be checked visually by enabling the left-in debug code in the shader

gl_FragColor = vec4(1, v_texcoord.x, v_texcoord.y, 1);

which uses texture coordinates itself as green and blue color component. This makes a nice colored rectangle with red in the lower-left corner, magenta (red and blue) in the upper-left, yellow (red and green) in the lower-right, and white (red, green, and blue) in the upper-right corner. It shows that the rectangle fits perfectly.

As I forced the minimum size of the OpenGLWidget to the exact size of the texture image the texture to pixel mapping should be 1:1. I checked out what happens if magnification is set to Nearest – there was no visual difference.

I have to admit that the DXT1 data rendered as texture looks much better than the decompression I've exposed in my other answer. Considering, that these are the exact same data (read by my nearly identical loader) this let me think my own uncompress algorithm does not yet consider something (in other words: it still seems to be buggy). Hmm... (It seems that needs additional fixing.)