I'm struggling to come up with a non-obfuscating, efficient way of using numpy to compute a self correlation function in a set of 3D vectors.

I have a set of vectors in a 3d space, saved in an array

a = array([[ 0.24463039, 0.58350592, 0.77438803],

[ 0.30475903, 0.73007075, 0.61165238],

[ 0.17605543, 0.70955876, 0.68229821],

[ 0.32425896, 0.57572195, 0.7506 ],

[ 0.24341381, 0.50183697, 0.83000565],

[ 0.38364726, 0.62338687, 0.68132488]])

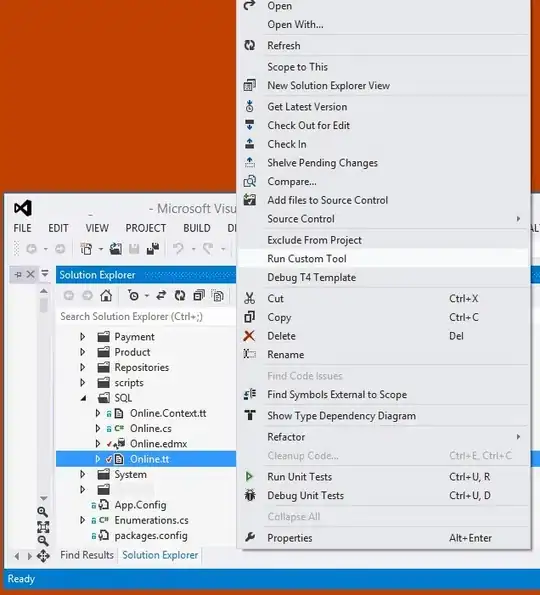

their self correlation function is defined as

in case the image above doesn't stay available, the formula is also printed below: C(t,{v}n) = \frac 1{n-t}\sum{i=0}^{n-1-t}\vec v_i\cdot\vec v_{i+t}

I'm struggling to code this up in an efficient way, non-obfuscating way. I can compute this expliticly with two nested for loops, but that's slow. There is a fast way of doing it by using one of the embedded functions in numpy, but they seem to be using an entirely different definition of correlation function. A similar problem has been solved here, How can I use numpy.correlate to do autocorrelation? but that doesn't handle vectors. Do you know how can I solve this?