I have a sentence like say

25 August 2003 League of Extraordinary Gentlemen: Sean Connery is one of the all time greats I have been a fan of his since the 1950's. 25 August 2003 League of Extraordinary Gentlemen

I pass it through the openai sentiment code which gives me some neuron weights which can be equal or little greater then number of words.

Neuron weights are

[0.01258736, 0.03544582, 0.05184804, 0.05354257, 0.07339437,

0.07021661, 0.06993681, 0.06021424, 0.0601177 , 0.04100083,

0.03557627, 0.02574683, 0.02565657, 0.03435502, 0.04881989,

0.08868718, 0.06816255, 0.05957553, 0.06767794, 0.06561323,

0.06339648, 0.06271613, 0.06312297, 0.07370538, 0.08369936,

0.09008111, 0.09059132, 0.08732472, 0.08742133, 0.08792272,

0.08504769, 0.08541565, 0.09255819, 0.09240738, 0.09245031,

0.09080137, 0.08733468, 0.08705935, 0.09201239, 0.113047 ,

0.14285286, 0.15205048, 0.15249513, 0.14051639, 0.14070784,

0.14526351, 0.14548902, 0.12730363, 0.11916814, 0.11097522,

0.11390981, 0.12734678, 0.13625301, 0.13386811, 0.13413942,

0.13782364, 0.14033082, 0.14971626, 0.14988877, 0.14171578,

0.13999145, 0.1408006 , 0.1410009 , 0.13423227, 0.16819029,

0.18822579, 0.18462598, 0.18283379, 0.16304792, 0.1634682 ,

0.18733767, 0.22205424, 0.22615907, 0.22679318, 0.2353312 ,

0.24562076, 0.24771859, 0.24478345, 0.25780812, 0.25183237,

0.24660441, 0.2522405 , 0.26310056, 0.26156184, 0.26127928,

0.26154354, 0.2380443 , 0.2447366 , 0.24580643, 0.22959644,

0.23065038, 0.228564 , 0.23980206, 0.23410076, 0.40933537,

0.436683 , 0.5319608 , 0.5273239 , 0.54030097, 0.55781454,

0.5665511 , 0.58764166, 0.58651507, 0.5870301 , 0.5893866 ,

0.58905166, 0.58955604, 0.5872186 , 0.58744675, 0.58569545,

0.58279306, 0.58205146, 0.6251827 , 0.6278348 , 0.63121724,

0.7156403 , 0.715524 , 0.714875 , 0.71317464, 0.7630029 ,

0.75933087, 0.7571995 , 0.7563375 , 0.7583521 , 0.75923103,

0.8155783 , 0.8082132 , 0.8096348 , 0.8114364 , 0.82923543,

0.8229595 , 0.8196689 , 0.8070393 , 0.808637 , 0.82305557,

0.82719535, 0.8210828 , 0.8697561 , 0.8547278 , 0.85224617,

0.8521625 , 0.84694564, 0.8472206 , 0.8432255 , 0.8431826 ,

0.8394848 , 0.83804935, 0.83134645, 0.8234757 , 0.82382894,

0.82562804, 0.80014366, 0.7866942 , 0.78344023, 0.78955245,

0.7862923 , 0.7851586 , 0.7805863 , 0.780684 , 0.79073226,

0.79341674, 0.7970072 , 0.7966449 , 0.79455364, 0.7945448 ,

0.79476243, 0.7928985 , 0.79307675, 0.79677683, 0.79655904,

0.79619783, 0.7947823 , 0.7915144 , 0.7912799 , 0.795091 ,

0.8032384 , 0.810835 , 0.8084989 , 0.8094493 , 0.8045582 ,

0.80466574, 0.8074054 , 0.8075554 , 0.80178404, 0.7978776 ,

0.78742194, 0.8119776 , 0.8119776 , 0.8119776 , 0.8119776 ,

0.8119776 , 0.8119776 ]

The funda is that the text's background color should shades w.r.t. the neuron weights provided. (For positive weights green color for negative weights red color and some yellow when the weight value is near 0)

So for above the shading should be (Green for positive and red shade for negative)

But what it really plotting is

The function which is shading the text w.r.t. to neuron weights is

def plot_neuron_heatmap(text, values, n_limit=80, savename='fig1.png',

cell_height=0.325, cell_width=0.15, dpi=100):

text = text.replace('\n', '\\n')

text = np.array(list(text + ' ' * (-len(text) % n_limit)))

if len(values) > text.size:

values = np.array(values[:text.size])

else:

t = values

values = np.zeros(text.shape, dtype=np.int)

values[:len(t)] = t

text = text.reshape(-1, n_limit)

values = values.reshape(-1, n_limit)

mask = np.zeros(values.shape, dtype=np.bool)

mask.ravel()[values.size:] = True

mask = mask.reshape(-1, n_limit)

plt.figure(figsize=(cell_width * n_limit, cell_height * len(text)))

hmap = sns.heatmap(values, annot=text,mask=mask, fmt='', vmin=-5, vmax=5, cmap='RdYlGn', xticklabels=False, yticklabels=False, cbar=False)

plt.subplots_adjust()

plt.savefig(savename if savename else 'fig1.png', dpi=dpi)

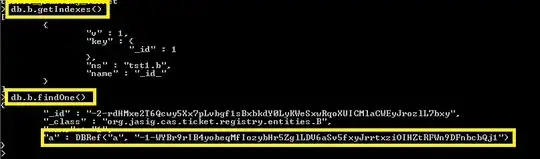

Where I am wrong?

Above defintion refined by @Mad Physicist link