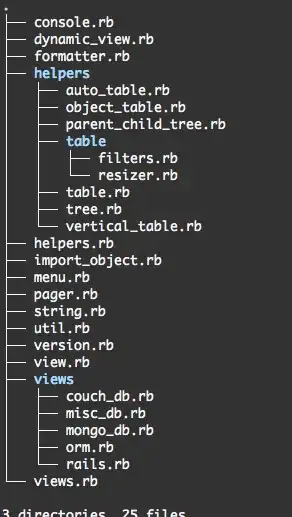

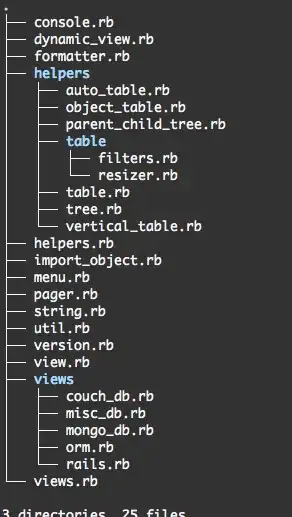

The fastest option depends not only on length of the DataFrame (in this case, around 13M rows) but also on the number of groups. Below are perfplots which compare a number of ways of finding the maximum in each group:

If there an only a few (large) groups, using_idxmax may be the fastest option:

If there are many (small) groups and the DataFrame is not too large, using_sort_drop may be the fastest option:

Keep in mind, however, that while using_sort_drop, using_sort and using_rank start out looking very fast, as N = len(df) increases, their speed relative to the other options disappears quickly. For large enough N, using_idxmax becomes the fastest option, even if there are many groups.

using_sort_drop, using_sort and using_rank sorts the DataFrame (or groups within the DataFrame). Sorting is O(N * log(N)) on average, while the other methods use O(N) operations. This is why methods like using_idxmax beats using_sort_drop for very large DataFrames.

Be aware that benchmark results may vary for a number of reasons, including machine specs, OS, and software versions. So it is important to run benchmarks on your own machine, and with test data tailored to your situation.

Based on the perfplots above, using_sort_drop may be an option worth considering for your DataFrame of 13M rows, especially if it has many (small) groups. Otherwise, I would suspect using_idxmax to be the fastest option -- but again, it's important that you check benchmarks on your machine.

Here is the setup I used to make the perfplots:

import numpy as np

import pandas as pd

import perfplot

def make_df(N):

# lots of small groups

df = pd.DataFrame(np.random.randint(N//10+1, size=(N, 2)), columns=['Id','delta'])

# few large groups

# df = pd.DataFrame(np.random.randint(10, size=(N, 2)), columns=['Id','delta'])

return df

def using_idxmax(df):

return df.loc[df.groupby("Id")['delta'].idxmax()]

def max_mask(s):

i = np.asarray(s).argmax()

result = [False]*len(s)

result[i] = True

return result

def using_custom_mask(df):

mask = df.groupby("Id")['delta'].transform(max_mask)

return df.loc[mask]

def using_isin(df):

idx = df.groupby("Id")['delta'].idxmax()

mask = df.index.isin(idx)

return df.loc[mask]

def using_sort(df):

df = df.sort_values(by=['delta'], ascending=False, kind='mergesort')

return df.groupby('Id', as_index=False).first()

def using_rank(df):

mask = (df.groupby('Id')['delta'].rank(method='first', ascending=False) == 1)

return df.loc[mask]

def using_sort_drop(df):

# Thanks to jezrael

# https://stackoverflow.com/questions/50381064/select-the-max-row-per-group-pandas-performance-issue/50389889?noredirect=1#comment87795818_50389889

return df.sort_values(by=['delta'], ascending=False, kind='mergesort').drop_duplicates('Id')

def using_apply(df):

selected_idx = df.groupby("Id").apply(lambda df: df.delta.argmax())

return df.loc[selected_idx]

def check(df1, df2):

df1 = df1.sort_values(by=['Id','delta'], kind='mergesort').reset_index(drop=True)

df2 = df2.sort_values(by=['Id','delta'], kind='mergesort').reset_index(drop=True)

return df1.equals(df2)

perfplot.show(

setup=make_df,

kernels=[using_idxmax, using_custom_mask, using_isin, using_sort,

using_rank, using_apply, using_sort_drop],

n_range=[2**k for k in range(2, 20)],

logx=True,

logy=True,

xlabel='len(df)',

repeat=75,

equality_check=check)

Another way to benchmark is to use IPython %timeit:

In [55]: df = make_df(2**20)

In [56]: %timeit using_sort_drop(df)

1 loop, best of 3: 403 ms per loop

In [57]: %timeit using_rank(df)

1 loop, best of 3: 1.04 s per loop

In [58]: %timeit using_idxmax(df)

1 loop, best of 3: 15.8 s per loop