I have a large dataset stored in tfrecord file like 333 for training so I shrard the data into multiple files like 1024 tfrecords file instead of one. And I used the input pipeline in tf.Dataset Api. like:

ds= ds.TFRecordsDataset(files).shuffle().repeat().shuffle().repeat()

ds = ds.prefetch(1)

And I have my own generator that yields batch_x, batch_y.

My problem is the code is working only when I set the workers=0 in fit_generator().

Whenever I set it to more than 0 , it will give the following error

ValueError: Tensor("PrefetchDataset:0", shape=(), dtype=variant) must be from the same graph as Tensor("Iterator:0", shape=(), dtype=resource).

And the documentation about what it means if workers = 0 is not enough, they said

If 0, will execute the generator on the main thread.

I found similar problem in github here, but with no solution yet.

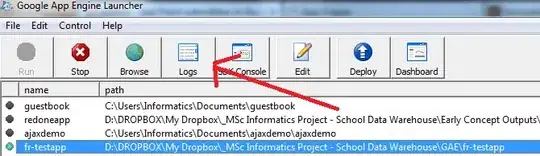

There is another kind of similar problem one posted here, but I am different because I used Keras not tensor flow and I didn't use with tf.Graph().as_default(). The suggested that there is two graph instead of one, so the solution was by removing tf.Graph().as_default(). When I checked the graph, I noticed that the all map function related to my input pipeline is in different graph( subgraph) and it can not attached to the main graph. like below:

And I should mention that this is a two stage training. First I build one network taking image_based dataset and the network ispre trained on imagenet and I just trained my classifier. The dataset was in hdf5 file and it can be fit in memory. While in second stage I am using the trained network in first stage and attached some block to it and my dataset here is tfrecod file thats why I used a tf.Dataset API for my input pipeline. So this new input pipeline is not exsit in the first graph for the first stage. But it should not matter, I just used the pretreated network as a base mode then I add to it different block . So it is totally new model.

And the main reason I want to change the worker, because my GPU utils always zero,thats mean the CPU is bottleneck, meaning that the Cpu take a lot of time to extract the data. And my GPU always waiting. Thats why the training take along time, like one epoch 9 hours.

Can any one explain what the error means?