This is a sort-of follow-up question from :Convert image to specific palette using PIL without dithering

I, too, want to create a script that can convert an image to a specific set of colors without dithering.

I have implemented the work-around "custom quantization" function given as the answer for the questions. Most of the scripts works well except for 1 big problem.

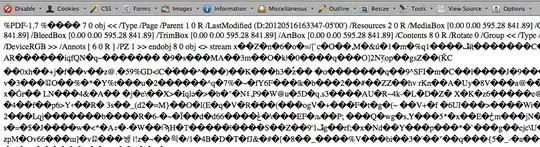

Light green color RGB(130,190,40) is replaced by a light brown color RGB(166, 141, 95). (see the light green on the top left of the mane.)

from PIL import Image

def customConvert(silf, palette, dither=False):

''' Convert an RGB or L mode image to use a given P image's palette.

PIL.Image.quantize() forces dither = 1.

This custom quantize function will force it to 0.

https://stackoverflow.com/questions/29433243/convert-image-to-specific-palette-using-pil-without-dithering

'''

silf.load()

# use palette from reference image made below

palette.load()

im = silf.im.convert("P", 0, palette.im)

# the 0 above means turn OFF dithering making solid colors

return silf._new(im)

palette = [

0,0,0,

0,0,255,

15,29,15,

26,141,52,

41,41,41,

65,105,225,

85,11,18,

128,0,128,

135,206,236,

144,238,144,

159,30,81,

165,42,42,

166,141,95,

169,169,169,

173,216,230,

211,211,211,

230,208,122,

245,245,220,

247,214,193,

255,0,0,

255,165,0,

255,192,203,

255,255,0,

255,255,255

] + [0,] * 232 * 3

# a palette image to use for quant

paletteImage = Image.new('P', (1, 1), 0)

paletteImage.putpalette(palette)

# open the source image

imageOrginal = Image.open('lion.png').convert('RGB')

# convert it using our palette image

imageCustomConvert = customConvert(imageOrginal, paletteImage, dither=False).convert('RGB')

CIE76 Delta-E:

Currently: RGB(130,190,40) --> RGB(166, 141, 95) = 57.5522

Expected: RGB(130,190,40) --> RGB(144,238,144) = 31.5623

Can someone explain if I wrote the code incorrectly or suggestions how to get it to work.