working on a project that will load 100+ GB text files and one of the processes is to count rows in the specified file. I have to do it the following way to not get out of memory exception. is there a faster way or what is the most efficient way to multitask this. (i know that you can do something like run it on 4 threads and divide the combined output by 4. don't know the most efficient way)

uint loadCount2 = 0;

foreach (var line in File.ReadLines(currentPath))

{

loadCount2++;

}

planed on running the program on a server with 4 dual cores CPUs and 40 GB RAM when I have fixed the location for it. currently, it runs on a temporary small 4 core 8GB RAM server. (don't know how threading would behave over multiple CPUs.)

I tested a lot of your suggestions.

Stopwatch sw2 = Stopwatch.StartNew();

{

using (FileStream fs = File.Open(json, FileMode.Open))

CountLinesMaybe(fs);

}

TimeSpan t = TimeSpan.FromMilliseconds(sw2.ElapsedMilliseconds);

string answer = string.Format("{0:D2}h:{1:D2}m:{2:D2}s:{3:D3}ms", t.Hours, t.Minutes, t.Seconds, t.Milliseconds);

Console.WriteLine(answer);

sw2.Restart();

loadCount2 = 0;

Parallel.ForEach(File.ReadLines(json), (line) =>

{

loadCount2++;

});

t = TimeSpan.FromMilliseconds(sw2.ElapsedMilliseconds);

answer = string.Format("{0:D2}h:{1:D2}m:{2:D2}s:{3:D3}ms", t.Hours, t.Minutes, t.Seconds, t.Milliseconds);

Console.WriteLine(answer);

sw2.Restart();

loadCount2 = 0;

foreach (var line in File.ReadLines(json))

{

loadCount2++;

}

t = TimeSpan.FromMilliseconds(sw2.ElapsedMilliseconds);

answer = string.Format("{0:D2}h:{1:D2}m:{2:D2}s:{3:D3}ms", t.Hours, t.Minutes, t.Seconds, t.Milliseconds);

Console.WriteLine(answer);

sw2.Restart();

loadCount2 = 0;

int query = (int)Convert.ToByte('\n');

using (var stream = File.OpenRead(json))

{

int current;

do

{

current = stream.ReadByte();

if (current == query)

{

loadCount2++;

continue;

}

} while (current != -1);

}

t = TimeSpan.FromMilliseconds(sw2.ElapsedMilliseconds);

answer = string.Format("{0:D2}h:{1:D2}m:{2:D2}s:{3:D3}ms", t.Hours, t.Minutes, t.Seconds, t.Milliseconds);

Console.WriteLine(answer);

Console.ReadKey();

private const char CR = '\r';

private const char LF = '\n';

private const char NULL = (char)0;

public static long CountLinesMaybe(Stream stream)

{

//Ensure.NotNull(stream, nameof(stream));

var lineCount = 0L;

var byteBuffer = new byte[1024 * 1024];

const int BytesAtTheTime = 4;

var detectedEOL = NULL;

var currentChar = NULL;

int bytesRead;

while ((bytesRead = stream.Read(byteBuffer, 0, byteBuffer.Length)) > 0)

{

var i = 0;

for (; i <= bytesRead - BytesAtTheTime; i += BytesAtTheTime)

{

currentChar = (char)byteBuffer[i];

if (detectedEOL != NULL)

{

if (currentChar == detectedEOL) { lineCount++; }

currentChar = (char)byteBuffer[i + 1];

if (currentChar == detectedEOL) { lineCount++; }

currentChar = (char)byteBuffer[i + 2];

if (currentChar == detectedEOL) { lineCount++; }

currentChar = (char)byteBuffer[i + 3];

if (currentChar == detectedEOL) { lineCount++; }

}

else

{

if (currentChar == LF || currentChar == CR)

{

detectedEOL = currentChar;

lineCount++;

}

i -= BytesAtTheTime - 1;

}

}

for (; i < bytesRead; i++)

{

currentChar = (char)byteBuffer[i];

if (detectedEOL != NULL)

{

if (currentChar == detectedEOL) { lineCount++; }

}

else

{

if (currentChar == LF || currentChar == CR)

{

detectedEOL = currentChar;

lineCount++;

}

}

}

}

if (currentChar != LF && currentChar != CR && currentChar != NULL)

{

lineCount++;

}

return lineCount;

}

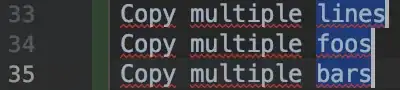

the resultant shows great progress but I hoped to reach 20 minutes. I would like to them this on my stronger server to see the effect on having more CPUs.

the second run returned: 23 min, 25 min, 22 min, 29 min

meaning that the methods don't really make any difference. (was not able to take a screenshot because I removed the pause and the program continued by clearing screen)