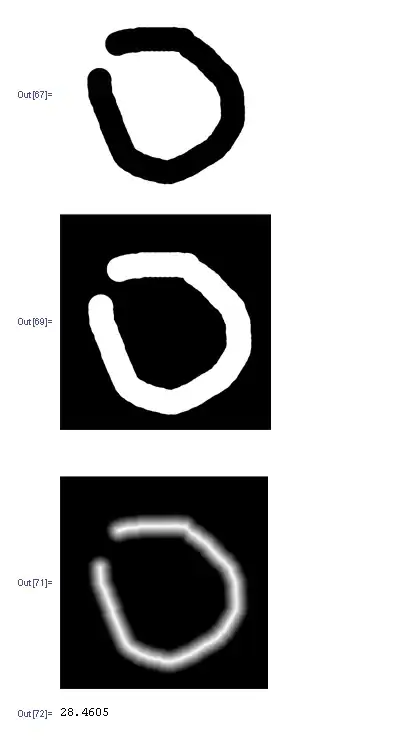

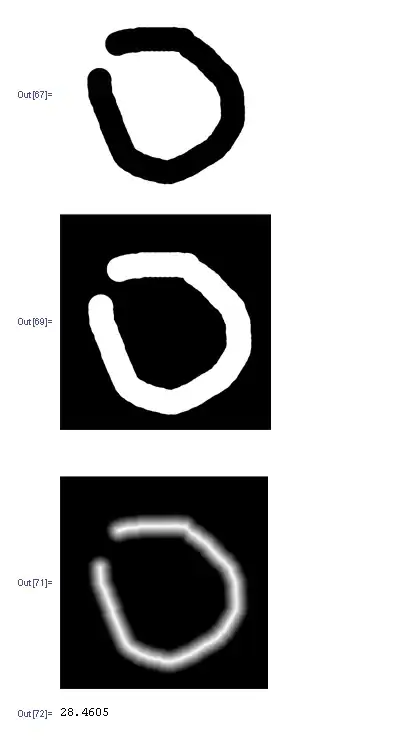

ARCore's new Augmented Faces API, that is working on the front-facing camera without depth sensor, offers a high quality, 468-point 3D canonical mesh that allows users attach such effects to their faces as animated masks, glasses, skin retouching, etc. The mesh provides coordinates and region specific anchors that make it possible to add these effects.

I believe that a facial landmarks detection is generated with a help of computer vision algorithms under the hood of ARCore 1.7. It's also important to say that you can get started in Unity or in Sceneform by creating an ARCore session with the "front-facing camera" and Augmented Faces "mesh" mode enabled. Note that other AR features such as plane detection aren't currently available when using the front-facing camera. AugmentedFace extends Trackable, so faces are detected and updated just like planes, Augmented Images, and other Trackables.

As you know, several years ago Google released Face API that performs face detection, which locates faces in pictures, along with their position (where they are in the picture) and orientation (which way they’re facing, relative to the camera). Face API allows you detect landmarks (points of interest on a face) and perform classifications to determine whether the eyes are open or closed, and whether or not a face is smiling. The Face API also detects and follows faces in moving images, which is known as face tracking.

So, ARCore 1.7 just borrowed some architectural elements from Face API and now it's not only detects facial landmarks and generates 468 points for them but also tracks them in real time at 60 fps and sticks 3D facial geometry to them.

See Google's Face Detection Concepts Overview.

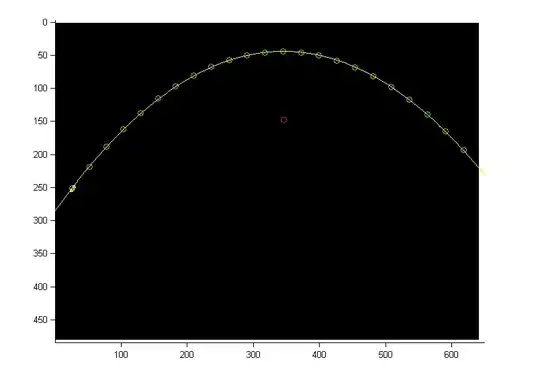

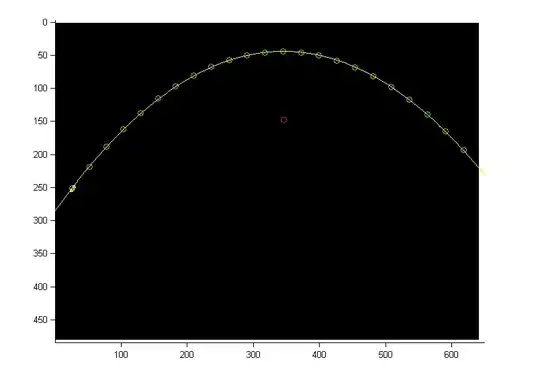

To calculate a depth channel in a video, shot by moving RGB camera, is not a rocket science. You just need to apply a parallax formula to tracked features. So if a translation's amplitude of a feature on a static object is quite high – the tracked object is closer to a camera, and if an amplitude of a feature on a static object is quite low – the tracked object is farther from a camera. These approaches for calculating a depth channel is quite usual for such compositing apps as The Foundry NUKE and Blackmagic Fusion for more than 10 years. Now the same principles are accessible in ARCore.

You cannot decline the Face detection/tracking algorithm to a custom object or another part of the body like a hand. Augmented Faces API developed for just faces.

Here's how Java code for activating Augmented Faces feature looks like:

// Create ARCore session that supports Augmented Faces

public Session createAugmentedFacesSession(Activity activity) throws

UnavailableException {

// Use selfie camera

Session session = new Session(activity,

EnumSet.of(Session.Feature.FRONT_CAMERA));

// Enabling Augmented Faces

Config config = session.getConfig();

config.setAugmentedFaceMode(Config.AugmentedFaceMode.MESH3D);

session.configure(config);

return session;

}

Then get a list of detected faces:

Collection<AugmentedFace> fl = session.getAllTrackables(AugmentedFace.class);

And at last rendering the effect:

for (AugmentedFace face : fl) {

// Create a face node and add it to the scene.

AugmentedFaceNode faceNode = new AugmentedFaceNode(face);

faceNode.setParent(scene);

// Overlay the 3D assets on the face

faceNode.setFaceRegionsRenderable(faceRegionsRenderable);

// Overlay a texture on the face

faceNode.setFaceMeshTexture(faceMeshTexture);

}