I am doing a BULK INSERT into sqlserver and it is not inserting UTF-8 characters into database properly. The data file contains these characters, but the database rows contain garbage characters after bulk insert execution.

My first suspect was the last line of the format file:

10.0

3

1 SQLCHAR 0 0 "{|}" 1 INSTANCEID ""

2 SQLCHAR 0 0 "{|}" 2 PROPERTYID ""

3 SQLCHAR 0 0 "[|]" 3 CONTENTTEXT "SQL_Latin1_General_CP1_CI_AS"

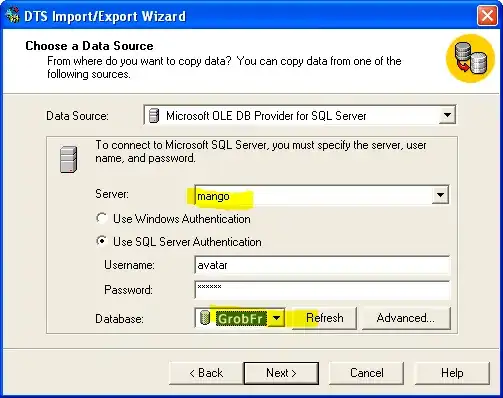

But, after reading this official page it seems to me that this is actually a bug in reading the data file by the insert operation in SQL Server version 2008. We are using version 2008 R2.

What is the solution to this problem or at least a workaround?