I have a Spark SQL that used to execute < 10 mins now running at 3 hours after a cluster migration and need to deep dive on what it's actually doing. I'm new to spark and please don't mind if I'm asking something unrelated.

Increased spark.executor.memory but no luck.

Env: Azure HDInsight Spark 2.4 on Azure Storage

SQL: Read and Join some data and finally write result to a Hive metastore.

The spark.sql script ends with below code:

.write.mode("overwrite").saveAsTable("default.mikemiketable")

Application Behavior:

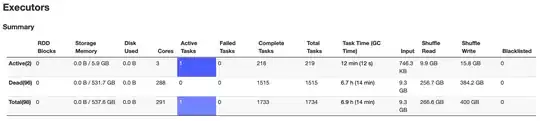

Within the first 15 mins, it loads and complete most tasks (199/200); left only 1 executor process alive and continually to shuffle read / write data. Because now it only leave 1 executor, we need to wait 3 hours until this application finish.

Not sure what's the executor doing:

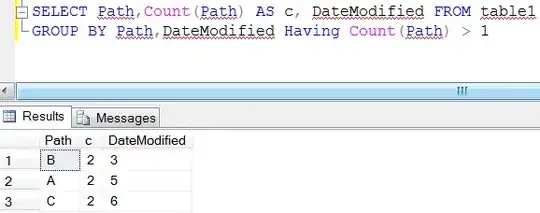

From time to time, we can tell the shuffle read increased:

Therefore I increased the spark.executor.memory to 20g, but nothing changed. From Ambari and YARN I can tell the cluster has many resources left.

Release of almost all executor

Any guidance is greatly appreciated.